The COVID-19 response demonstrated how computational biology could enhance public health research. Though the pandemic has waned, public health researchers remain vigilant about catching dangerous disease strains early and speeding vaccine development. The combination of today’s artificial intelligence tools with genomic data could improve preparedness.

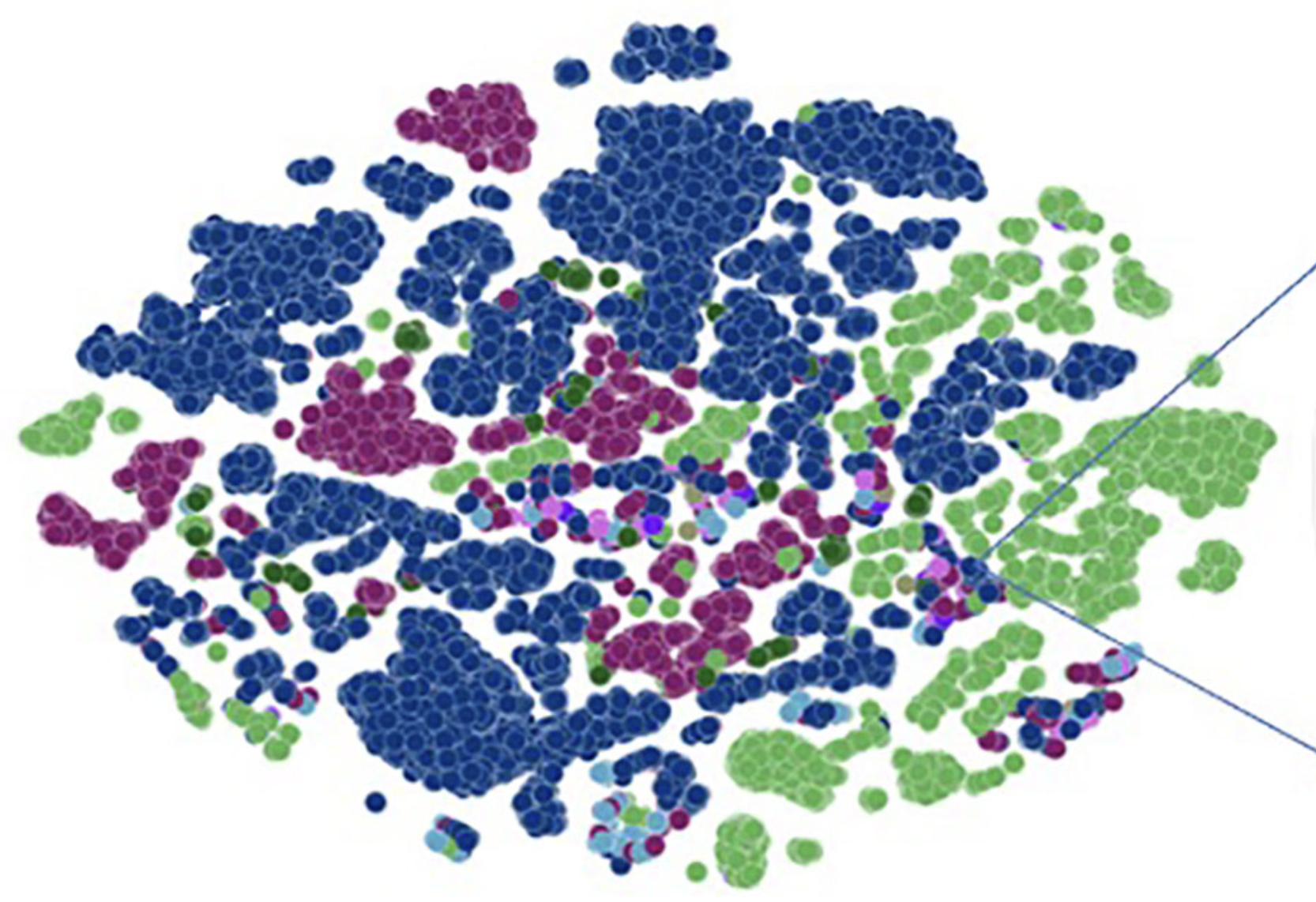

The large language models (LLMs) used in chat-based tools have showcased AI’s potential. Arvind Ramanathan of Argonne National Laboratory and his team already demonstrated that LLMs can predict viral evolution in SARS-CoV-2. In 2022, they developed an open-source genome-scale language model (GenSLM) that predicted the evolution of important SARS-CoV-2 variants. That research won the 2022 Gordon Bell Special Prize for Covid-19-Related Research.

With current threats such as mpox and bird flu circulating globally and the resurgence of deadly Marburg virus in Africa, Ramanathan wondered if they could expand their modeling to other infectious agents. “It’s not just beta-coronaviruses. We actually want to have a list of these models available across families of viruses, so that the next big pandemic that shows up you’re prepared to address any concern that arises.”

Instead of focusing on a single virus family, they’ve trained a fundamental GenSLM based on all available gene data. The model can analyze individual genes and recognize how they could evolve. This broader approach can apply to infectious bacteria, too.

LLM-tinged AI like GenSLM is part of a larger category known as foundation models. Ramanathan and colleagues want to build on these tools and techniques, he says, so “that we can understand viral evolution or bacterial evolution, or any other forms of evolution we might be interested in.” To accomplish this task, the team is supported by a Department of Energy Innovative and Novel Computational Impact on Theory and Experiment (INCITE) allocation.

The sheer volume of genomic information also pushes the limits of LLMs.

GenSLMs take advantage of LLMs’ language-processing abilities and turn them toward sorting and then predicting the patterns of letter abbreviations in the genetic code. Genes produce proteins that carry out cells’ work and make pathogens infectious. Training LLMs to solve biology problems presents challenges, Ramanathan notes, and each problem “comes with its own ingredient for failure.”

Genes have a grammatical structure, Ramanathan says, which makes defining them relatively easy. Specific letter combinations mark the heart of the gene, the region that carries the information for protein production.

The work becomes tricky, though, when placing in context those genes and the regulatory information that surrounds them. Viruses and bacteria employ many strategies to parse the same DNA sequence in multiple ways to produce more than one protein. And some viruses bundle different parts of their genome in separate particles.

The sheer volume of genomic information also pushes the limits of LLMs. Today’s largest language models might sift for connections among 32,000 words, a fraction of the typical size of even a small genome at 6 million characters. The work is highly risky, Ramanathan admits. “But it’s also innovative, because the language models actually do possess that ability to fill in the gaps of things that we might not know.”

The pretraining’s scope means that Ramanathan and his colleagues burned through their initial allocation of 50,000 node-hours on Argonne’s Polaris in the first three months of 2024. They continued the work through a director’s discretion award on Oak Ridge Leadership Computing Facility’s Frontier. With Aurora now available at the Argonne Leadership Computing Facility, they’ll use a much larger allocation of 270,000 node-hours on that exascale system.

They’ve already started training the initial model on individual virus families. Validating the model’s predictions involves a variety of tests to see whether the computational results are biologically valid. When training they use many but not all genomes from a virus family. Then they ask the model to predict data for the viruses that they haven’t included and compare the model’s results with known viruses. They also use a technique called preference optimization that incorporates feedback into the training to guide the model toward biologically relevant results.

An international coalition, the Center for Epidemiological Preparations, is also supporting Ramanathan’s efforts to apply viral GenSLM models for vaccine design. After viruses, they’ll use a similar strategy with infectious bacteria, where these tools could help with new antimicrobial drugs for multidrug-resistant infections.

Ramanathan’s group also is examining protein design by stringing together multiple models. The team has combined a GenSLM model with a text-based one to allow researchers to incorporate both DNA sequences and descriptive information, such as the function that the protein should have within an organism. That work was recognized as a finalist for the 2024 Gordon Bell Prize.

Other researchers around the world have contacted Ramanathan about using GenSLMs, something he never imagined when he started this research. As a result, his team’s also grappling with making the models available in useful forms. “We are happy that people are willing to use these models and trying to build on them.”