Powering today’s petaflops computers – capable of quadrillions of calculations per second – costs $5 million to $10 million a year. Using current technology and linking a million teraflops (trillions of calculations per second) processors, an exascale system a thousand times more powerful would draw more than a gigawatt of electricity and cost at least $1 billion a year, says William Harrod, Research Division Director of the Department of Energy’s Office of Science Advanced Scientific Computing Research (ASCR) program.

Those costs are a nonstarter, says Thuc Hoang, manager of high-performance computing (HPC) development and operations for the National Nuclear Security Administration’s (NNSA) Advanced Simulation and Computing program. “There’s no way we can increase our electricity payment by 50 times or 100 times.”

Even an optimistic outcome of current R&D activities projects a factor-of-five gap between expected power efficiency and what’s necessary for an exascale system to run on around 20 megawatts, DOE’s target. It will take improvements in nearly every part of the computing stack – hardware, system software, communications, storage, algorithms, equipment cooling and more – to even come close.

‘FastForward was really trying to start R&D thinking.’

“Computing is now at a critical crossroads,” according to Harrod, who is delivering a keynote talk on the topic this week in Salt Lake City at SC12, the annual supercomputing conference. “We can no longer proceed down the path of steady but incremental progress to which we have become accustomed. Thus, exascale computing is not simply an effort to provide the next level of computational power by creative scaling up of current petascale computing systems. New architectures will be required.”

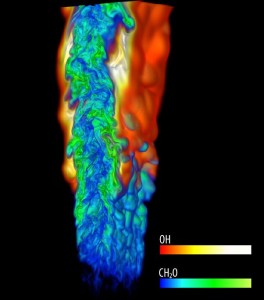

Combustion simulations like this one from Sandia National Laboratories will be more precise with exaflops-capable computing, helping create more fuel-efficient engines and other devices. This shows a lifted ethylene/air jet flame, with the hydroxyl radical (red/white) denoting the lifted flame and formaldehyde (blue/green) denoting ignition intermediates upstream of the lifted flame. The simulation consisted of 1.28 billion grid points tracking 22 chemical species. Image courtesy of Hongfeng Yu, Sandia National Laboratories.

FastForward, a program supported by the Office of Science and NNSA to advance computing along the path to exascale, will tackle power consumption and other necessities of extreme-scale computing such as memory, processors and more. As a first step, this summer the program awarded $62 million in contracts over two years to chipmakers AMD, Intel (including its recently acquired Whamcloud data storage company) and NVIDIA and to computer-builder IBM for improvements in the energy efficiency of processors and memory and in data storage and movement.

These investments are critical, ASCR and NNSA officials say, because exascale computing will give researchers access to a new level of scientific and engineering simulations. Among Office of Science priorities, these advances could spur new energy-storage materials, feasible ways to convert plants into fuels and ever more precise models to gauge human impact on climate.

NNSA is counting on exascale computing to help maintain the country’s nuclear deterrent, to ensure that it remains safe and reliable without underground testing. Soon, it won’t be enough to rely on decades-old test data; researchers will need greatly refined science-based simulations to predict how systems will perform and how best to refurbish them to ensure reliability. These simulations must address fundamental physics questions, such as how aging materials perform.

But reducing power demand also has ramifications for industry, says Terri Quinn, principal deputy head of Integrated Computing and Communications at Lawrence Livermore National Laboratory. If smartphones and tablet computers “could operate at a lower power consumption either with the same or greater performance, that would be a huge win.” Trimming power use also is important for huge data centers, like those Google, IBM and other firms operate. Worldwide, enterprise data centers consume approximately 30 gigawatts of power, The New York Times recently reported.

Still, computing technology companies’ plans to address power consumption aren’t aggressive enough to achieve the agencies’ goal of exascale within the decade, says Quinn, who coordinates FastForward on behalf of Livermore and six other DOE labs: Argonne, Lawrence Berkeley, Los Alamos, Oak Ridge, Pacific Northwest and Sandia. “There’s a lot of attention on it, but we need greater attention.”

FastForward targets memory because powering it and moving data in and out account for the largest electricity demands in HPC. Simply reducing the amount of memory available is not an option for science and engineering simulations that are built upon large experimental datasets and complex theoretical models. Operating just the memory system needed, Quinn says, would exceed power goals for the entire system. Getting past that means reducing the number of bytes moved per calculation and improving memory capacity, bandwidth and energy efficiency.

Memory is a big target, Quinn says, but processors need attention, too, so FastForward also supports work to boost their efficiency by cutting the number of watts expended per operation. IBM Blue Gene/Q systems, like the ones installed at Livermore and Argonne, currently hold the record on the Green500 list of the world’s most energy-efficient supercomputers at around 2.1 billion operations per second (gigaflops) per watt of energy consumed. FastForward aims to improve that by around 25 times, to 50 gigaflops per watt.

Hardware is only part of the solution, NNSA’s Hoang notes. Application software must now be written to minimize data movement – an objective most DOE and NNSA application codes have not primarily emphasized over the past two decades.

Most codes also will need revision to cope with heterogeneous architectures, which mix regular processors with power-efficient accelerators such as the graphics processing units (GPUs) first used in computer gaming and now part of the world’s fastest computer, Titan, at Oak Ridge. “It takes people with new or different programming ideas to rethink how codes should be rewritten or tweaked” so they’re not totally tied to the old programming model and can cope with heterogeneous processors, Hoang says.

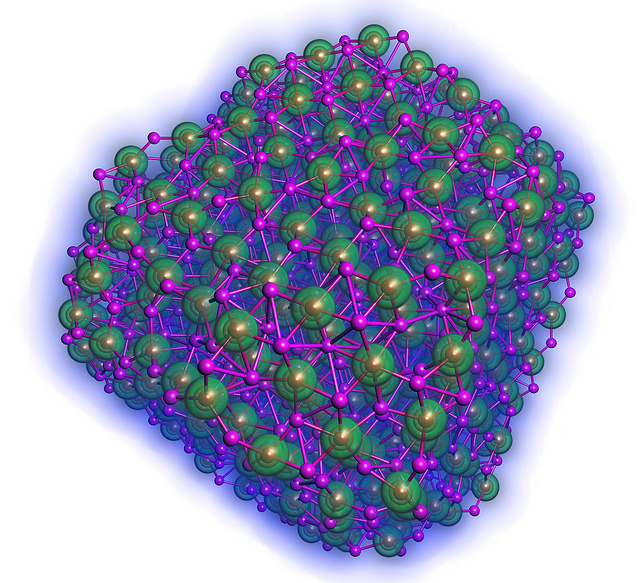

That’s one reason technical experts from the seven DOE labs will work with FastForward vendors on co-design, she adds. “We need to understand the new architecture’s requirements or the new architecture design points that would demand different things from our algorithms.” ASCR supports three exascale co-design centers, uniting HPC users from many fields with builders, programmers and applied mathematicians to develop machines that can address a spectrum of scientific problems such as combustion, materials design and nuclear engineering.

Of course, energy-efficient memory and processors are just two exascale challenges, Quinn notes. There’s the cost of cooling a huge machine. Also, if processors run at reduced power, computers will need more of them, complicating concurrency – keeping millions of cores busy at about the same level all the time. And the more parts, the more difficult it is to keep an exascale machine operating for more than a few hours at a stretch. Each failure and restart consumes energy.

“Power is a very important (challenge) and would kill us, but these other things are, I would say, equally important,” Quinn says. FastForward isn’t designed to address those other questions and, with only two years of research, is unlikely to produce marketable products. Companies will carry on development if the commercial prospects are good or will seek new government investments.

“FastForward was really trying to start R&D thinking,” Quinn says. “It’s just trying to take the vendors’ roadmaps, which already are tackling the power problem, and see if we could help them do something even more aggressive.”

Harrod says the agencies “anticipate funding a companion program to FastForward that will investigate the design and integration of exascale computers. We will not overcome the exascale challenges by minimizing the power consumption of the components. We need to redesign the system, both hardware and software, to achieve our goals.”