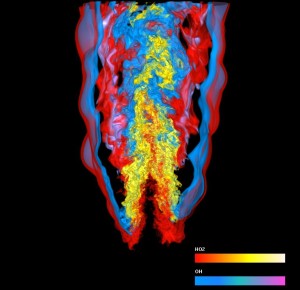

Simultaneous visualization of two variables of a turbulent combustion simulation performed by Jacqueline Chen, Sandia National Laboratories. Credit: Hongfeng Yu, University of California at Davis.

It’ll take a team effort if the next generation of supercomputers is to maximize dividends for science.

To that end, the Department of Energy’s Office of Advanced Scientific Computing Research (ASCR) is launching the first three in a series of Exascale Co-Design Centers. DOE laboratories, working with academic and industrial collaborators, will research challenges to creating exascale-capable applications in specific disciplines. The centers will perform research in computer science and applied mathematics to inform the hardware design, understand systems software requirements and devise new algorithms for the intended applications.

“We really see these as the point of the spear going toward exascale,” says Daniel Hitchcock, acting associate director of the DOE Office of Science for ASCR. The centers were chosen from among 21 proposals because their scientific domains are different enough to yield a range of solutions others can follow. They also were chosen because each demonstrated strong ability to manage a complex collaboration and because they’ll focus on science considered vital to DOE and the country, Hitchcock says.

The three centers are:

The Combustion Exascale Co-Design Center, directed by Jacqueline Chen, a distinguished member of the technical staff at Sandia National Laboratories. Collaborators include researchers from Los Alamos, Lawrence Berkeley, Lawrence Livermore and Oak Ridge national laboratories; the National Renewable Energy Laboratory; the University of Texas at Austin and University of Utah; Stanford and Rutgers universities; and the Georgia Institute of Technology.

With exascale computing power, Chen says, combustion simulations will portray more detailed chemical reactions under extreme turbulence and pressure conditions. “We need a bigger computer to tackle those more difficult regimes, but more realistic regimes, where automobiles, trucks and gas turbines for power and (aerospace) operate.”

CESAR, the Center for Exascale Simulation of Advanced Reactors, directed by Robert Rosner, a senior fellow in the Computation Institute, a joint venture between Argonne National Laboratory and the University of Chicago, where Rosner is also professor of physics and astronomy and astrophysics. Collaborators include other scientists at Argonne and Chicago, and at the Massachusetts Institute of Technology, IBM, Texas A&M and Rice universities and Lawrence Livermore, Los Alamos, Pacific Northwest and Oak Ridge national laboratories.

CESAR will combine complex, multiphysics nuclear reactor simulation codes with hardware capable of running them. Modeling is key to understanding what happens in the extreme environments of reactor cores and generating data for designing the next generation of safer, more efficient and more economical nuclear energy plants.

Exascale can help model in detail things that can’t be modeled today, such as loss of coolant accidents, Rosner says. “That really is one of the ambitious targets,” because it couples codes portraying thermal hydraulics, neutron transport and structures inside the core.

Exascale Co-Design Center for Materials in Extreme Environments (ExMatEx), directed by Timothy Germann, a scientist in the Physics and Chemistry of Materials Group, Theoretical Division, at Los Alamos National Laboratory. Collaborators include researchers from Los Alamos, Lawrence Livermore, Oak Ridge and Sandia national laboratories, Stanford University and the California Institute of Technology.

The center will focus on two intense environments: mechanical extremes such as shock compression and high strain-rate loads – conditions experienced in nuclear weapons and space – and high-radiation, like what nuclear reactor materials endure. Most such models today depict materials on just one scale of length and time out of many, Germann says. Simulations must bridge these various scales to help us understand material behavior under extreme conditions. “The end goal is to couple scales,” with more precise calculations at different locations in the model. That demands tremendous computer power.

Coordinated co-design efforts will pay big benefits, says Michael Heroux, a distinguished member of the technical staff in the Computing Research Center at Sandia National Laboratories.

“The more of these pieces we can bring in and simultaneously design, the better off we’ll be, especially in this era of very rapid change” in technology.