Bahar Asgari thinks that high-performance supercomputers (HPCs) could run far more efficiently and consume less energy. That’s particularly possible when crunching sparse datasets — ones with many zeros or empty values — that are often encountered in scientific computing. Her solution: low-cost, domain-specific architecture and hardware, and software co-optimization reminiscent of processes in the human brain.

“If you look at the performance of modern scientific computers used for sparse problems, they achieve the desired speed, but they don’t run efficiently,” says Asgari, a University of Maryland assistant professor of computer science. “So, they end up using more energy than is necessary.”

Asgari is in the first year of a five-year, $875,000 Department of Energy Early Career Research Program award to develop systems enabling intelligent dynamic configurability, which she has been working on since her time as a graduate student at the Georgia Institute of Technology.

One major reason HPCs are not energy efficient lies in their one-size-fits-all design. For the most part, manufacturers have no idea whether their components will be used for modeling nuclear transport, drug discovery, training artificial intelligence models or something else. So, they optimize chip design for the most common use case and make the entire machine programmable so it can be used for a wide range of applications.

“For many years, programmability has been the key to success in high-end computers,” Asgari says. “However, as technology scales upward, we must be more mindful about making these machines waste less energy.”

To improve computation efficiency and resource utilization, architects can employ hardware specialization. But that’s usually impractical because it is such a slow and costly process. Moreover, one specialized solution cannot fit the diverse requirements of various scientific computing workloads. To address this challenge, Asgari is developing a technique called intelligent dynamic reconfigurability, whereby hardware and software are unified in a holistic manner, adapting them together to efficiently run a program or multiple programs.

One example of the one-size-fits-all mentality behind modern CPU design is the assumption that the data they’ll crunch are dense. Imagine a spreadsheet in which most of the cells include non-zero, or dense, entries. When processing such dense data — all the information, say, a site like Amazon or Facebook keeps about a given user — there is an assumption that the machine will need to access the same data or neighboring data in the next cycle and subsequent cycles. This assumption does not hold for sparse data, which are located randomly in the cache, and which could be used by Amazon or Facebook for applications like recommending a product or a new friend.

“The assumptions built into a device — which determine how components in a chip are put together — drive the way it brings data from memory to the cache, and the way it performs a computation,” Asgari says. “This stuff matters for efficiency. Traditionally, optimization is focused computation. We pay less attention to how much data moves around. But in recent years, moving data around, whether on chip or off chip, consumes way more energy than the computation itself, so we want to also consider which configuration minimizes data movement.”

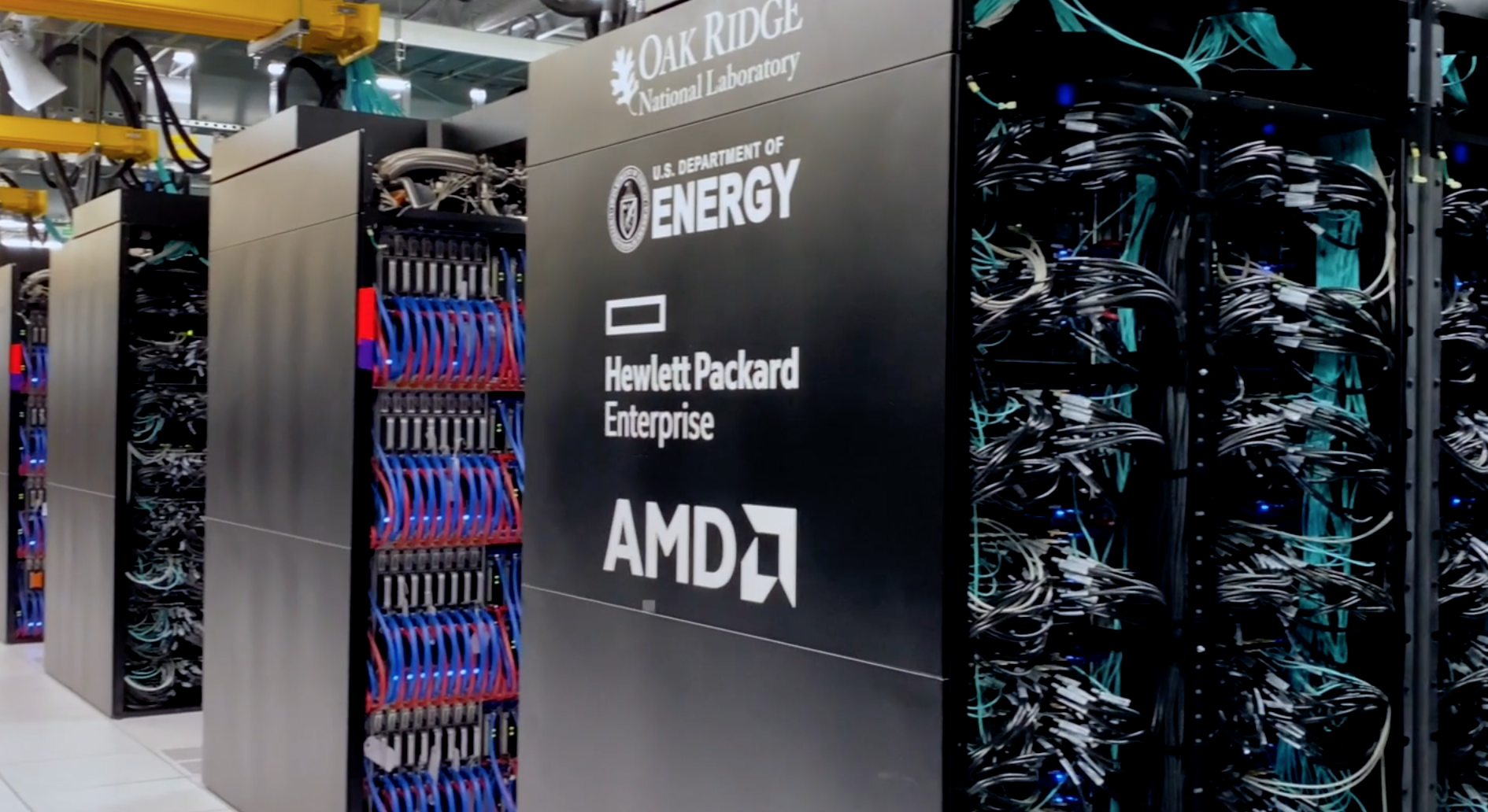

According to Asgari, even Oak Ridge Leadership Computing Facility’s Frontier and Japan-based Riken Center for Computational Science’s Fugaku — two of the world’s fastest HPCs — achieve no more than 0.8% and 3% of their peak floating-point operations per second, respectively, when running the sparse HPCG benchmark, which was developed to compare the performance of HPCs.

“Why have we been designing computers with the assumption that all data is dense?” Asgari asks. “We must also design for sparsity.”

‘If you have the best architecture but cannot properly use it, then the benefit is lost.’

Given the many types of sparse problems, though, it would not be effective to have one fixed design for dense problems and another for sparse problems. “You cannot just keep designing different hardware for different applications, as the design process is fairly time consuming,” Asgari notes. “Therefore, we are trying to build systems with changeable hardware configurations based on different application requirements, including different types of sparse problems.”

Asgari found inspiration for dynamically changing hardware components in the brain. “When we learn something new, our neurons actually change by producing more or different neurotransmitters and/or more or different receptors. In other words, our brain can reconfigure its own hardware.”

She also found inspiration during stints at Google and Apple. One of her major projects at Google, where she worked between earning her Ph.D. and landing at her current position, was getting workload applications — such as YouTube, Maps, Gmail and Search — to run more efficiently by using less memory without impinging on other important performance metrics, such as latency and throughput. At Apple, where she spent two summers during her doctoral program, Asgari experimented with building domain-specific architectures for sparse data applications into real-world devices.

Asgari’s configurable system could benefit a range of devices from high-end supercomputers to consumer-grade products. This system uses machine learning algorithms to derive the optimal hardware and software configuration based on samples of the data that a user plans to work with, how quickly the computing system needs to be reconfigured, and what technology the user can access. In some cases, Asgari’s system could spit out a shopping list of components that a client assembles to build out their system. In others, multiple components with different specifications could all exist, but connections among only the relevant components become activated.

Asgari and her team are currently working on a prototype using field programmable gate array (FPGA) reconfigurable hardware, with a goal to build a system that incorporates a variety of emerging technologies, including novel chip designs and edge computing capabilities. Her team also will develop specialized software to optimize hardware efficiency. “If you have the best architecture but cannot properly use it, then the benefit is lost.”

Asgari’s also lending her expertise in dynamic architecture to a project funded through the National Science Foundation’s Principles and Practice of Scalable Systems (PPoSS) program, which issues just a few awards annually. The goal of the five-year, $5 million grants, which began last year, is boosting HPC efficiency by getting these computers to process data as it moves throughout the system, not just in the central processing unit (CPU).

“We’re working with the largest-scale supercomputers available today and trying to enable the nodes in their network to perform actual data processing,” Asgari explains. “Normally, we must wait until data reaches the CPU to process it. But we’re working on a way to process data in other nodes while it moves around.”

Asgari’s role on the project will be designing the architecture to allow efficient computations outside the CPU. Her co-investigators include four researchers at Georgia Tech — including her former mentor, Hyesoon Kim — who bring expertise in energy efficient computer architectures, numerical computing, performance engineering and high-performance algorithms.

“Ultimately,” she says, “my hope for these projects, and other projects in the lab, is to enable a larger class of applications running on next-generation, high-performance computers in the most efficient way possible, making advanced computing accessible to all and reducing its impact on the environment.”