Fans of Star Trek: The Next Generation know Q as omnipotent alien with a mind like a supercomputer. Technophiles know Q as the next-generation IBM Blue Gene supercomputer.

The latter may share more than just the 17th letter of the alphabet with the former. Due to arrive during the second half of 2012 at a down-to-earth location – Argonne National Laboratory (ANL) – the machine, named Mira, promises to take scientific computing where no researcher has gone before.

“At 10 petaflops, Mira will be about 20 times more capable than our current Blue Gene/P system,” says Rick Stevens, ANL associate laboratory director for computing, environment and life sciences.

Flops, or floating point operations per second, are units of computing power. The average PC runs at roughly 7 gigaflops or, roughly translated, 7 billion calculations per second. Mira can compute around 10 quadrillion calculations a second. A quadrillion is a 1 followed by 15 zeroes – a thousand trillion.

“Machines like Mira make it possible for one user to do computational research all of humanity working for all of human history could not have accomplished,” says James Myers, who directs the Rensselaer Polytechnic Institute (RPI) Computational Center for Nanotechnology Innovation. “That type of advance allows us to think about the challenges facing our society at entirely new levels.”

David Bader, director of the Georgia Institute of Technology High Performance Computing Center, calls the Blue Gene/Q “revolutionary.” It’s impossible to watch a star explode in person, but Mira’s supercharged resolution could simulate a supernova “down to the sub-millimeter level,” Bader explains. “We’re talking about simulations even more detailed than the human eye can perceive.” His group will get in line to use Mira soon.

Supercomputer simulations have become an important part of everyday science, too. They model, among other things, weather and storm conditions, fuel-efficient jet engines, nuclear reactor designs, earthquake-resistant skyscrapers and basic properties of next-generation materials for energy.

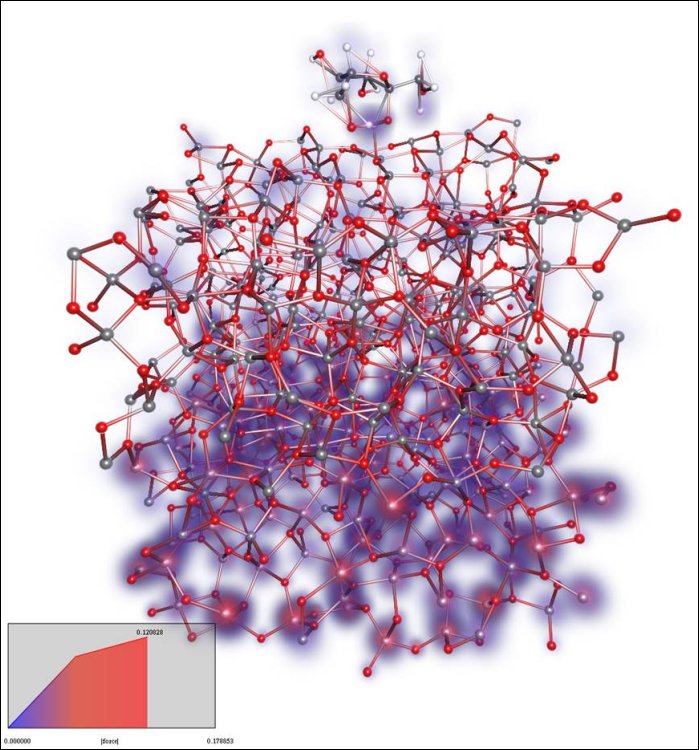

The IBM Blue Gene/Q supercomputer Mira will be installed (in 48 racks like this one) at Argonne National Laboratory in the second half of 2012 and will be about 20 times more powerful than Argonne’s current machine. Image courtesy of IBM and Argonne National Laboratory.

“The possibilities are endless,” says Myers, who notes that computers such as Mira also are critical for industrial competitiveness. “You can model different product designs and pick the best one. You can look at the process of creating a product, how the product functions during use, how easy it will be to recycle. You can make better products cheaper.”

Bader adds that teraflops computers – a step below petaflops – occupied “huge rooms just a decade ago. Now, you can buy a teraflops chip at an electronics store. Supercomputing has been democratized, largely because of computers like Mira that keep shrinking barriers.”

Theory and experimentation once supported science alone. But that arrangement wasn’t enough as scientists entered the 20th century.

“Computation provides a third leg that allows a theory to be tested when an experiment is impossible or infeasible,” says Hal Sudborough, a computer science professor at the University of Texas, Dallas (UTD). Supercomputer simulations can help answer nuclear engineering questions, for example, like how to design a reactor core safer than today’s.

Supernovae, reactors and other simulations are comprised of partial differential equations. Their solutions are, at best, only approximate, the models they generate fuzzy. Each leap in computer power reduces the fuzziness, sometimes enough so that new science emerges.

Dynamo theory, which describes the Earth’s magnetic field, is another recent example, says Steve Pearce, a Simon Fraser University lecturer in computer science. This fascinating phenomenon remained locked away in unsolved differential equations until 1995. The complex, colorful simulations that model dynamo theory emerged only after groups from Los Alamos National Laboratory and the University of California, Los Angeles used more than a year of supercomputer time to approximate the magnetic field equations.

Dynamo theory is the kind of “tractable problem” that Mira will improve our ability to solve, Pearce says. As the name suggests, intractable problems have no solutions. But tractable problems are soluble with enough time, depending on their size. For instance, modeling the Big Bang is a tractable problem with a large input size that would take much more time than modeling a planetary magnetic field. Adding flops by the quadrillions, Pearce says, considerably reduces that time.

With nearly 800,000 processing cores – circuits that execute programming instructions – Mira is hardly a typical computer. A laptop, by comparison, has two to four cores. Business data servers commonly have no more than 20 cores.

That capacity puts the Blue Gene/Q in rare company: ultra-super supercomputers such as Titan, a Cray XK6 at Oak Ridge National Laboratory, and Japan’s K Computer. “Each machine is best for different applications,” Stevens says. “For some problems, Mira is faster; for others, Titan and the K-machine might be faster.”

Speed, however, isn’t everything. Green is increasingly important in supercomputer architecture, in part for the sheer impracticality of hogging energy and cooling resources to improve performance.

“Different manufacturers take different approaches to power efficiency,” says RPI’s Myers. To gain efficiency, many of today’s most power-efficient, high-end computers use graphics processing units (GPUs) like those powering video games, “but Mira is ahead of GPU-based machines.”

So far ahead, in fact, that Mira should finish near the top of the Green500, a list of the world’s most environmentally responsible supercomputers, says David Kaeli, who directs the Northeastern University Computer Architecture Research Laboratory.

As one of two DOE Office of Science Leadership Computing Facilities, “Argonne was a natural site to host Mira,” Stevens says. Argonne scientists worked with IBM to design Blue Gene/Q and its predecessor Blue Gene/P, known as Intrepid. “Computer codes that already run on Blue Gene/P should be easy to convert for Blue Gene/Q.”

Of course, playing host to such a behemoth of a supercomputer doesn’t happen overnight. Specially equipped trucks will deliver Mira in pieces – racks – over several months. The racks, each weighing 4,000 pounds (1,814 kilograms), are unpacked, checked out, positioned and connected – to ultrapure cooling water lines, networking gear and enough power to deliver 100 kilowatts.

After months of tests – and if problems arise, fixes and repairs – the system will come online in 2013 to work on a first batch of 16 projects. For instance, Larry Curtiss, an Argonne distinguished fellow and physical chemist, will use Mira to study new batteries aimed at improving the range of electric vehicles from 100 miles today to 500 miles, Stevens says. For Stevens’ own bioinformatics work, “Mira will enable us to screen hundreds of millions of possible drugs, from new antibiotics to treatments for diseases such as Parkinson’s and Alzheimer’s. We will also use Mira to design microbes for bioenergy and microbial communities for sustainable agriculture.”

The next next-gen of computers – the exascale machines – will operate in the exascale range: a quintillion flops, or 1018 calculations per second.

“IBM’s goal is to build an exascale-class supercomputer,” UTD’s Sudborough says. Mira is a step toward that goal: an energy-efficient template on which engineers can scale up to hundreds of millions of cores working in parallel.

Explains Stevens: “Parallel computers break problems into smaller parts that can be worked on at the same time. Mira will have nearly 1 million things to do in parallel. But in the near future, we will need to break things down into 100 million parallel tasks. This is an enormous challenge, and fortunately systems like Mira will help us gain experience by the time exascale machines are possible at the end of the decade.”