In kilometer-sized particle accelerators, electric fields hurtle small bits of matter at nearly light speed. Those fast, powerful particle beams at facilities such as CERN and the SLAC National Accelerator Laboratory have let physicists discover fundamental particles such as the Higgs Boson, generate X-rays that reveal matter’s fundamental structures, and produce radioisotopes for cancer therapies.

The largest particle accelerators are multibillion-dollar instruments, available to only a few scientists. But if researchers could shrink the devices to room size, more researchers could use them, even in hospitals.

Compact devices, however, require fields more than a thousand times stronger than those today’s accelerators produce, a challenge that demands plasma-based instruments and multiple scientific advances. Physicists need higher-quality simulations to unravel the complex plasma behavior involved, and an early project for the forthcoming Frontier exascale computer at the Department of Energy (DOE) Oak Ridge Leadership Computing Facility (OLCF) is helping a team refine an open-source code for the job. Besides advancing physics, the project lets computational scientists optimize critical software that will support teams as they simulate a range of problems on Frontier’s novel combination of AMD central and graphics processing units, or CPUs and GPUs.

This Center for Accelerated Application Readiness (CAAR) project focuses on optimizing an open-source plasma physics code, PIConGPU (particle-in-cell on GPU), for Frontier and is led by Sunita Chandrasekaran of the University of Delaware and Brookhaven National Laboratory. It’s in collaboration with researchers from the Helmholtz-Zentrum Dresden-Rossendorf (HZDR) in Germany and its Center for Advanced Systems Understanding, from Georgia Tech and from the OLCF’s home base, Oak Ridge National Laboratory (ORNL).

A veteran of parallel programming model research, Chandrasekaran specializes in developing directives and compilers, ensuring that programming layers – the interlocking software “bricks” that bridge scientific algorithms and computer processors and efficiently distribute the calculations and communication between them. Chandrasekaran has worked on parts of the Exascale Computing Project SOLLVE since 2017 and became its principal investigator in September.

Chandrasekaran and her colleagues are constantly constructing, optimizing and testing these software bridges because most of the DOE’s newest supercomputers combine CPUs with GPUs in different ratios and with processors from various manufacturers. Chandrasekaran has pursued many interdisciplinary science projects, modeling problems from nuclear reactor technology to biophysics on Summit, presently the OLCF’s top system. She knew Frontier would be next, and she was intrigued by the challenges that would come with it.

The machines differ greatly, Chandrasekaran notes. Summit’s nodes each combine two IBM CPUs with six NVIDIA GPUs, while Frontier’s all-AMD nodes each include four even more powerful GPUs and one CPU. “We’re really talking apples and oranges here,” she says. “We really need the proper software stack.”

The work on Frontier will allow physicists to build realistic models of their laser-plasma accelerators.

PIConGPU was developed by Michael Bussmann’s HZDR team more than a decade ago to simulate high-energy physics problems while harnessing GPUs’ capacity to compute many tasks simultaneously. To ensure the code runs on various hardware without requiring constant adaptation to each architecture, the researchers use the Alpaka C++ programming library, which allows the code to run efficiently across systems. The HZDR has run PIConGPU successfully on other supercomputers, including OLCF’s Titan and Summit, studying plasma-based ion accelerators to produce tissue-penetrating beams for cancer therapy. (Work on Titan earned Gordon Bell Prize finalist honors in 2013.)

Frontier’s unique AMD GPU hardware has interconnections and storage that differ from those in Titan and Summit. To overcome those challenges, HZDR researchers approached Chandrasekaran because her work on programming directives meshed well the Alpaka library, she says. “Alpaka has several backends. And I was already working on two or three of the backends.”

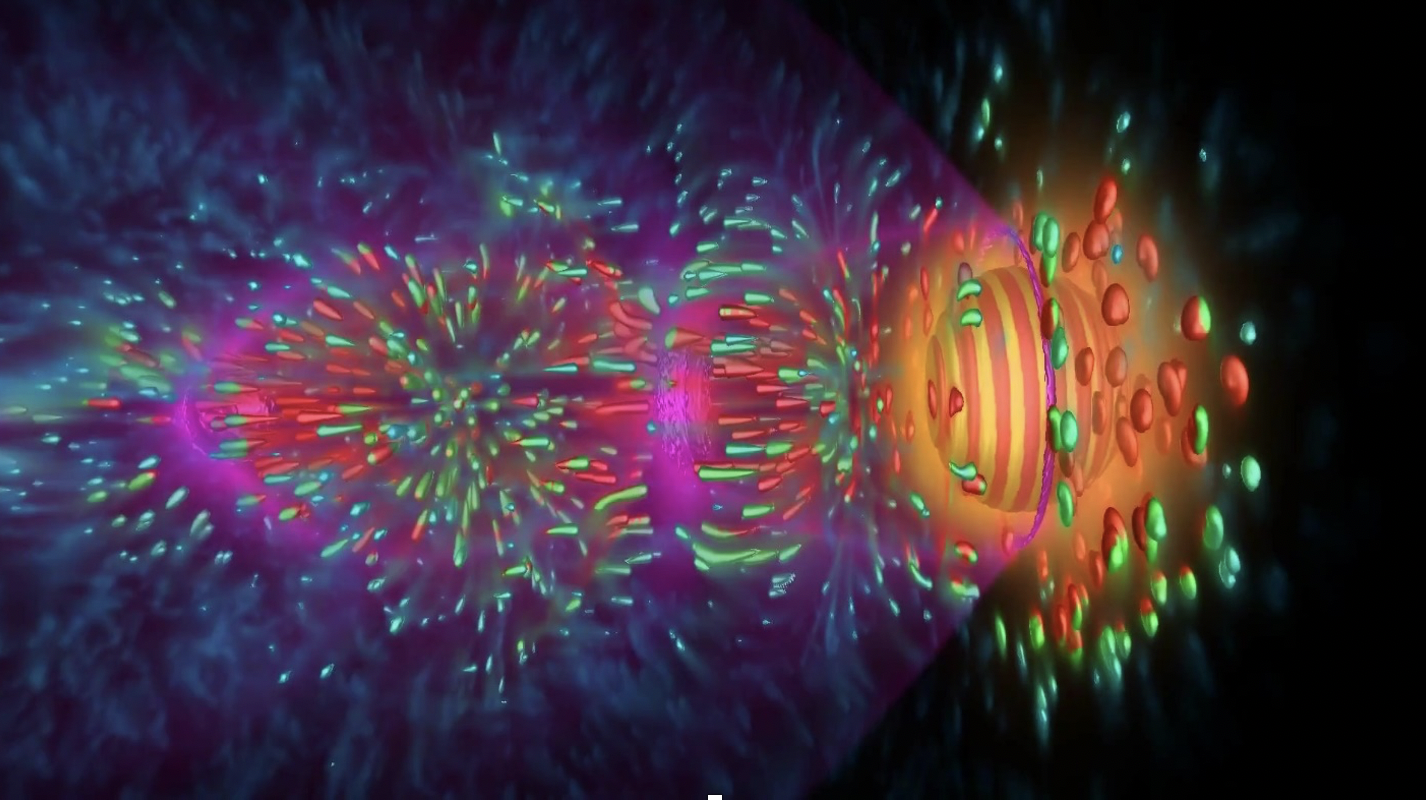

Frontier’s exascale power could provide key simulations to test a benchtop accelerator designed by Alexander Debus and his HZDR team. To shrink accelerators, scientists must use high-powered lasers to ionize gases and produce plasma. Within this plasma, ultrashort but intense laser pulses excite a wave traveling at nearly the speed of light. Such waves can sustain extreme electromagnetic fields. As particle beams are injected into such waves, their speed is boosted within centimeters, rather than the kilometers conventional accelerators require.

Although many of these new designs rely on single laser pulses, Debus and his colleagues have developed an approach in which two extended pulses cross inside a gas stream to create an initial wave that remains synchronized with the accelerated particles, without defocusing or dissipating. As a result, this two-laser-beam setup can be scaled to greater lengths to produce increased particle energies.

Those extensions could overcome a key challenge in building compact accelerators. Today’s laser-plasma accelerators peak at an energy of 10 GeV, but to put a CERN-comparable device in a room, the compact accelerators need particle energies a thousand times higher: 10 TeV. The work on Frontier will allow the HZDR physicists to build realistic models of their laser-plasma accelerators, providing critical insights to guide their theoretical and experimental work.

The CAAR team’s simulations also are pushing computational boundaries. Over the past two years, the team has run laser wakefield calculations on ever-larger developmental systems. In July, the team ran the largest simulation so far on ORNL’s pre-exascale test system, Spock, which includes AMD’s MI100 processors, just a step from Frontier’s MI200 chips. They’ve achieved simulations that are on target toward their goal of a fourfold speedup on Frontier.

On Spock, they’ve also demonstrated a visualization tool, ISAAC, to depict an energy pulse tearing through plasma and displacing electrons.

That tool is particularly important because of the size of these simulations and the volume of data they spew – tens of petabytes per experiment. As a result, the move toward exascale is reshaping the researchers’ approaches to data management, analysis and storage, gleaning insights from data streams in real-time rather than writing to disk, Bussmann says. We won’t “keep all of that data but keep the understanding of the data.”