This research team wants to make literal earthshaking discoveries every day.

“Earthquakes are a tremendous societal problem,” says David McCallen, a senior scientist at the U.S. Department of Energy’s Lawrence Berkeley National Laboratory who heads the Earthquake Simulation (EQSIM) project. “Whether it’s the Pacific Northwest or the Los Angeles Basin or San Francisco or the New Madrid Zone in the Midwest, they’re going to happen.”

A part of the Department of Energy’s Exascale Computing Project, the EQSIM collaboration comprises researchers from Berkeley Lab, Lawrence Livermore National Laboratory and the University of Nevada, Reno.

The San Francisco Bay area serves as EQSIM’s subject for testing computational models of the Hayward fault. Considered a major threat, the steadily creeping fault runs throughout the East Bay area.

“If you go to Hayward and look at the sidewalks and the curbs, you see little offsets because the earth is creeping,” McCallen says. As the earth moves it stores strain energy in the rocks below. When that energy releases, seismic waves radiate from the fault, shaking the ground. “That’s what you feel when you feel an earthquake.

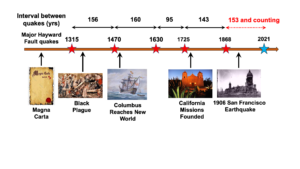

The Hayward fault ruptures every 140 or 150 years, on average. The last rupture came in 1868 – 153 years ago.

Historically speaking, the Bay Area may be due for a major earthquake along the Hayward Fault. Image courtesy of USGS.

“Needless to say, we didn’t have modern seismic instruments measuring that rupture,” McCallen notes. “It’s a challenge having no data to try to predict what the motions will be for the next earthquake.”

That data dearth led earth scientists to try a work-around. They assumed that data taken from earthquakes elsewhere around the world would apply to the Hayward fault.

That helps to an extent, McCallen says. “But it’s well-recognized that earthquake motions tend to be very specific in a region and at any specific site as a result of the geologic setting.” That has prompted researchers to take a new approach: focusing on data most relevant to a specific fault like Hayward.

“If you have no data, that’s hard to do,” McCallen says. “That’s the promise of advanced simulations: to understand the site-specific character of those motions.”

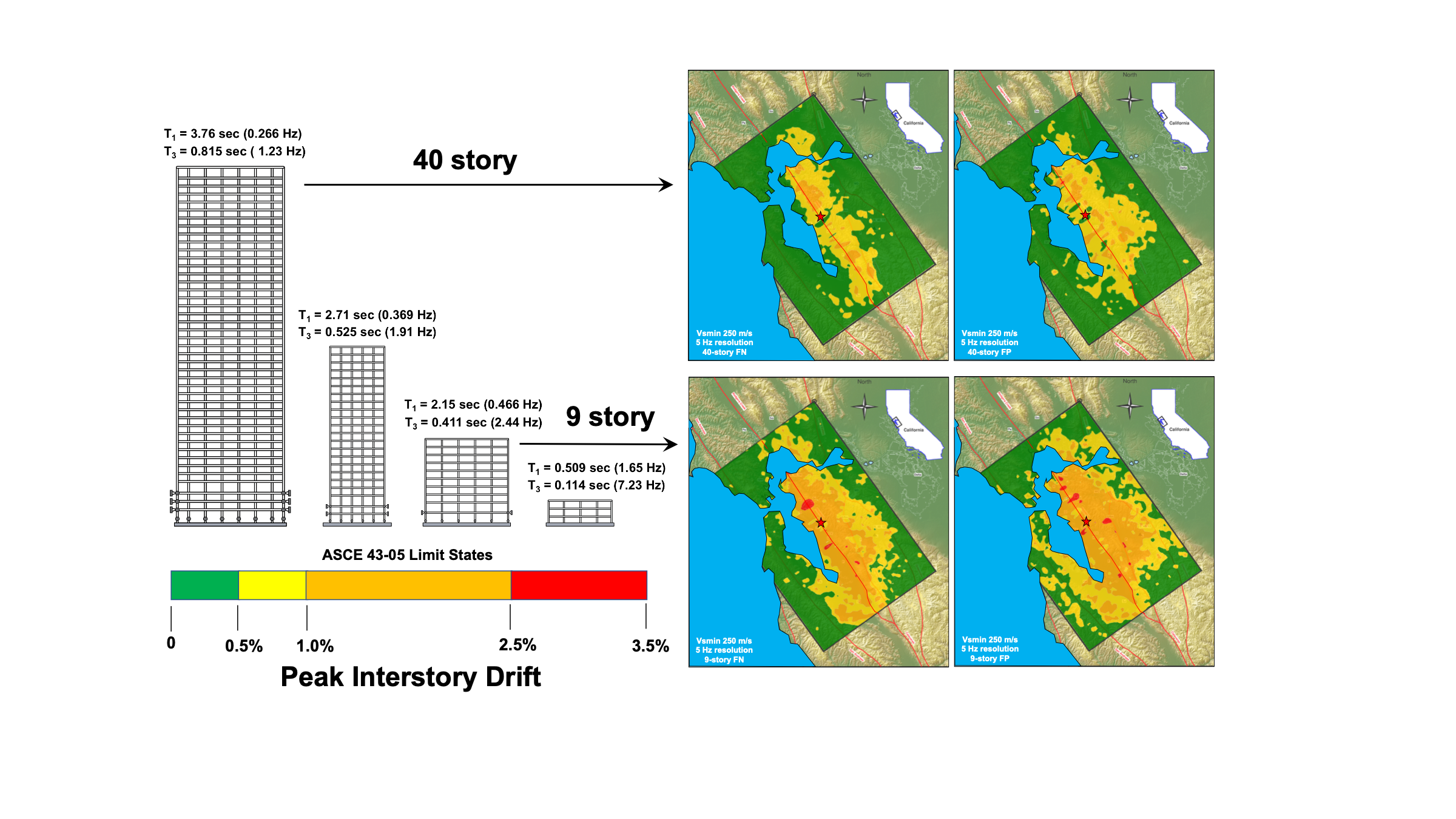

Part of the project has advanced earthquake models’ computational workflow from start to finish. This includes syncing regional-scale models and with structural ones to refine earthquake wave forms’ three-dimensional complexity as they strike buildings and infrastructure.

“We’re coupling multiple codes to be able to do that efficiently,” McCallen says. “We’re at the phase now where those advanced algorithm developments are being finished.”

Developing the workflow presents many challenges to ensure that every step is efficient and effective. The software tools that DOE is developing for exascale platforms have helped optimize EQSIM’s ability to store and retrieve massive datasets.

The process includes creating a computational representation of Earth that may contain 200 billion grid points. (If those grid points were seconds, that would equal 6,400 years.) With simulations this size, McCallen says, inefficiencies become obvious immediately. “You really want to make sure that the way you set up that grid is optimized and matched closely to the natural variation of the Earth’s geologic properties.”

‘We want to generate the ground motions that have the dynamics in them relevant to engineered structures.’

The project’s earthquake simulations cut across three disciplines. The process starts with seismology. That covers the rupture of an earthquake fault and seismic wave propagation through highly varied rock layers. Next, the waves arrive at a building. “That tends to transition into being both a geotechnical and a structural-engineering problem,” McCallen notes. Geotechnical engineers can analyze quake-affected soils’ complex behavior near the surface. Finally, seismic waves impinge upon a building and the soil island that supports it. That’s the structural engineer’s domain.

EQSIM researchers have already improved their geophysics code’s performance to simulate Bay Area ground motions at a regional scale. “We’re trying to get to what we refer to as higher-frequency resolution. We want to generate the ground motions that have the dynamics in them relevant to engineered structures.”

Early simulations at 1 or 2 hertz – vibration cycles per second – couldn’t approximate the ground motions at 5 to 10 hertz that rock buildings and bridges. Using the Oak Ridge National Laboratory’s Summit supercomputer, EQSIM has now surpassed 5 hertz for the entire Bay Area. More work remains to be done at the exascale, however, to simulate the area’s geologic structure at the 10-hertz upper end.

Livermore’s SW4 code for 3-D seismic modeling served as EQSIM’s foundation. The team boosted the code’s speed and efficiency to optimize performance on massively parallel machines, which deploy many processors to perform multiple calculations simultaneously. Even so, an earthquake simulation can take 20 to 30 hours to complete, but the team hopes to reduce that time by harnessing the full power of exascale platforms – performing a quintillion operations a second – that DOE is completing this year at its leadership computing facilities. The first exascale systems will operate at 5 to 10 times the capability of today’s most powerful petascale systems.

The potential payoff, McCallen says: saved lives and reduced economic loss. “We’ve been fortunate in this country in that we haven’t had a really large earthquake in a long time, but we know they’re coming. It’s inevitable.”