George Fann says there’s no hidden meaning behind the name he and his fellow researchers chose for their scientific software framework. MADNESS was a catchy play on words for Multiresolution Adaptive Numerical Scientific Simulation and, perhaps, a reflection of the project’s ambitious nature.

Unusual name aside, MADNESS could cause a stir in the scientific modeling community. The mathematical methods behind it could allow scientists to attack problems previously considered computationally impossible. They also could let scientists solve such problems with a previously unattainable level of precision.

Fann, a senior researcher at the Department of Energy’s Oak Ridge National Laboratory, is collaborating on the project with University of Colorado applied mathematician Gregory Beylkin and Robert Harrison, leader of Oak Ridge’s Computational Chemical Sciences Group.

The work, supported by DOE’s Office of Advanced Scientific Computing Research through the Scientific Discovery through Advanced Computing (SciDAC) program, could have uses in energy technology, drug development, and other fields.

The researchers are applying the mathematical methods encompassed in MADNESS to computational chemistry with a focus on solving the electronic structures of atoms, molecules and nanoscale systems. They’re also preparing their models to run on the next generation of high-performance computers.

MADNESS ‘allows us to finely resolve points of interest through increasing details.’

Scientists running such models often must limit their size. The algorithms – mathematical recipes computers use – that are practical in one dimension demand too much computer time for high-accuracy applications in the multiple dimensions needed to represent big systems of many atoms or molecules.

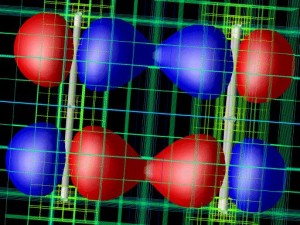

A molecular orbital of the benzene dimer computed using MADNESS is visualized with the adaptive cubes displayed

To do such computations, “usually you’re using a reduced model so you actually compute in three dimensions (instead of more) and you’re assuming certain things,” Fann says. The mathematical methods Beylkin, Fann, and Harrison created can compute structures in multiple dimensions – Beylkin has run model calculations on as many as 30 – faster and more precisely. That can help scientists simulate or predict experiments, letting them focus on the most promising areas.

Chemistry and materials science were good matches to test their methods, Fann says. They’re “rich in high-dimensional problems and they want very high precision,” he adds. “The scientists write the equations, and we’re writing the math for solving those equations.”

Detail from previous graphic

That math relies on approximating both mathematical expressions and the operations performed on them through a sequence of spatial scales. “It actually allows us to finely resolve points of interest through increasing details,” Fann says. “The difference is that we actually compute at one level, but for subsequent levels we keep track of the differences, so we only add in the additional information or improvements to the resolution.”

The ability to compute at different levels of detail means researchers can choose to focus their simulations on areas that interest them most. This multiresolution approach – using coarse-scale models that adapt to capture fine-scale detail – also uses computer power more efficiently.

Fann compares it to looking at a picture. You can see the whole image, but if something important catches your eye, you’ll focus on that. As you examine it more closely, your eyes gloss over the rest of the image.

The MADNESS algorithms also let researchers choose how precise they want the calculations to be. “We go to the level of detail necessary to get the precision,” Fann says. That’s especially important in molecular chemistry, when scientists compare energy levels – the arrangement of electrons in atoms, molecules and other structures. The differences can be very fine, requiring as much as 12 decimals of accuracy, Fann says.

The computational chemistry algorithms Fann and Beylkin have developed with Harrison “scale linearly” – they allow scientists to model larger and larger systems without sacrificing speed or precision. In addition, they are especially well adapted for parallel processing, the approach that lets high-performance computers run thousands of processors at once.

“We’ve demonstrated that it scales up to at least 4,000 processors,” Fann says. His latest research, through SciDAC, is aimed at preparing the algorithms to run on the petascale computers coming on line in the next few years – computers capable of one quadrillion calculations per second. That’s almost 3.5 times faster than today’s fastest computer.

The programming tools that run underneath MADNESS will be released in late March or early April 2007, Fann says. A general release of the mathematics and operators is slated for September 2007.

There’s still work to be done, however. The researchers must collaborate with scientists in each field to adapt the code for their use. The code currently runs time-independent problems – taking into consideration only space, not changes over time. And Fann and his colleagues believe they can make their algorithms run as much as three times faster than they do now.

“Speeding up the code is one our goals but it’s not the main goal,” Fann says. “The main focus is on extending the approach to problems with more complicated mathematics. For example, introducing boundary conditions for arbitrary regions, without losing the high order of accuracy, allows our methods to solve more difficult problems. Another example is taking advantage of multiresolution representation for time-evolving problems.

He adds, “It all starts with the math,” but “If we don’t get the mathematical representations and algorithms right and then the parallel computing it’s not going to work. … Without the scientists, Robert Harrison and others, we would not have been able to get” the physics, chemistry and other factors right. The practical demands of those computational scientists also help to focus the math research on relevant directions.