As Stephen Hawking once said, “the brain is essentially a computer.” No other thinking machine gets mentioned more as the gold standard, especially when it comes to energy efficiency and tasks such as image recognition. As such, some researchers have taken up the quest for neuromorphic, or brain-inspired, computing – “a set of computer architectures that are attempting to mimic or take inspiration from biological features of the brain,” says Brian Van Essen, computer scientist at the Center for Applied Scientific Computing at the Department of Energy’s Lawrence Livermore National Laboratory (LLNL).

Whether building a device that hews closely to the brain or creating one with a more abstract connection, computer scientists tend to emulate a set of brain features in their designs. One such characteristic is a spatial architecture, with memory distributed throughout the processor so it’s close to computation. Second, brains work at relatively low frequencies, and “lots of neuromorphic designs are pushing toward slower speeds – not pushing the clock frequency as fast as possible,” Van Essen says. Neuromorphic machines also run asynchronously, without a master clock, so data propagate as needed. Many architectures in this area also use low-resolution coding, another aspect typical of brain structures.

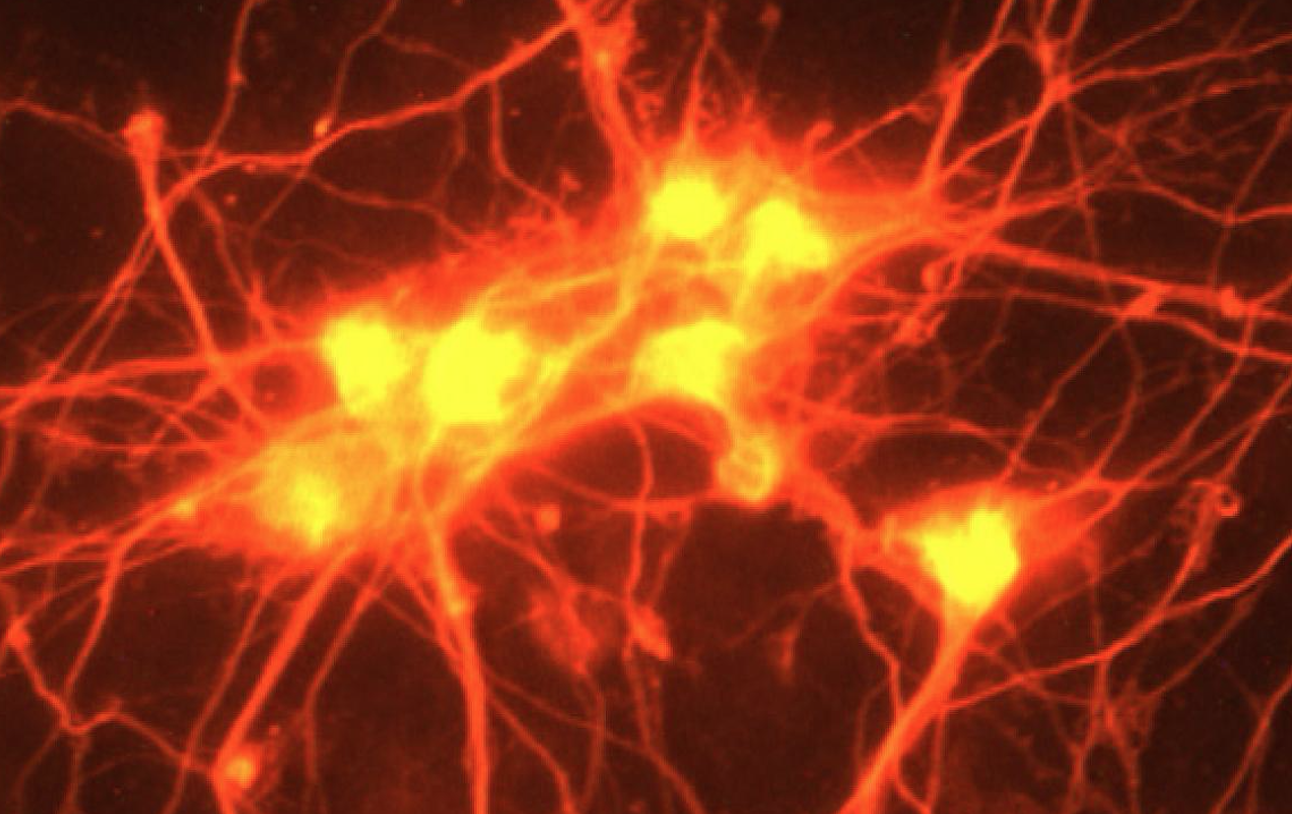

Connectivity is a key element. Brains rely on very high levels, with an estimated 10,000 links per neuron. In neuromorphic computing, Van Essen says, connectivity is where capability varies the most. Hardware implementations do not approach brain-like connection levels, but some software-based approaches can.

LLNL researchers are evaluating a number of neuromorphic architectures, Van Essen says. One is IBM’s NS16e TrueNorth processor. This device includes 16 million neuron-like elements and 4 billion synapses, the gaps between neurons. That delivers about 250 connections per neuron. “That’s not as highly connected as some,” Van Essen points out, but “IBM is very focused on capability and optimization that work well in today’s [computer) architectures.”

The LLNL team is testing this processor because it is a “fairly scalable implementation, where we can put reasonable-size problems on it,” Van Essen says. “This processor is more biological inspiration than biological mimicry.”

TrueNorth takes on some of the typical brain-like features neuromorphic chips mimic. For example, its spatially distributed architecture is asynchronous and runs on low power – just 2.5 watts.

This processor provides other desirable features, Van Essen explains. Applications designed with traditional deep-learning neural networks can be mapped on its architecture “with fairly good results.”

‘Neuromorphic computing provides the potential for very low-power solutions.’

In systems based more on biomimicry, computer scientists can explore neurogenic processes like spike-timing-dependent plasticity (STDP), which controls the strength of connections between neurons based on the electrical activity, or spikes, before and after a synapse. “We know the brain uses STDP and other neurogenic effects where connections get strengthened based on use and forge new connections,” Van Essen says. “It’s really interesting, but it’s hard to design a task based on those techniques.”

Some scientists are exploring learning and memory mechanisms in the brain, which could lead to new computing approaches. (See sidebar, “Modeling Memories.”) Methods the brain uses to store or analyze information, however, do not always translate readily into computer hardware or software.

The LLNL team’s key question: Can neuromorphic architecture make an impact on the lab’s work? “Can we do it at a reasonable scale?” Van Essen asks. “We’re looking at this from a purely scientific research point of view.”

Those questions led them to explore the TrueNorth processor. Van Essen says their approach is dictated by practical considerations. “The architecture was realized in silicon, and we could buy research hardware to tackle a project at scale.” For certain problems, “neuromorphic computing provides the potential for very low-power solutions.”

In fact, his team mapped several applications to the TrueNorth processor to test it. “In some examples, we realized the solutions that we hoped for,” he says, “but it remains an open question if we can get much higher performance in general.”

Much of the LLNL group’s early work examined the complexity of mapping applications on the new hardware and met with early success. For instance, the researchers worked on image-processing applications, and developed neural networks that map well to TrueNorth. “It’s a promising result,” Van Essen says. “We came up with good solutions, reasonably quickly in development time, and they provided good accuracy compared to traditional approaches.” Plus, these solutions used little power.

For some of these applications, the team must develop capabilities that TrueNorth lacks. For example, Van Essen’s group explored some computing processes that required machine learning. “That was interesting because the required machine-learning structures don’t exist in the TrueNorth ecosystem,” Van Essen says. So, his team is working on solving that. “We’ve had good progress there,” he says, but more remains to be done.

In fact, it will be some time before scientists know how far they can get with neuromorphic techniques. Maybe the most brain-like approaches will work the best, or computer scientists might get better results from architectures that resemble rather than replicate neural circuits and processes. Progress in neurobiology and computer science could eventually join forces to yield energy-efficient computations, one of the remaining stumbling blocks to pushing processor operations ahead.