In the supercomputers of yore, “people wanted the operating system to just get out of the way,” says Pete Beckman, a senior computer scientist at Argonne National Laboratory and co-director of the Northwestern Argonne Institute for Science and Engineering.

Beckman recalls complaints about slowdowns in application runtime while the OS handled mundane but necessary behind-the-scenes tasks. Frustrated with this OS noise, users called for a “thin, lightweight operating system,” he says.

Now, a re-tooled OS may be key to making high-performance computing (HPC) systems run smoother and more cost-effectively. As HPC approaches exascale – a million trillion computations per second – the field must achieve new heights in efficiency. For years, hardware architects and application developers treated power, memory and processor time as abundant resources. They focused on building machines and applications. Conserving those resources was a secondary concern at best.

As today’s high-performance machines take on simulations of climate, the universe, interactions of subatomic particles and other demanding computational challenges, they must deal with increasing volumes of data to delve into ever more granular detail. As such, simulations demand large amounts of energy, memory and processor time. These resources must be managed at unprecedented levels.

To fill this management need, the humble OS will have to assume new roles in synchronizing and coordinating tasks. “The operating system has to adapt and be more than a node-operating system. It has to be a global operating system, managing these resources across the entire machine in clumps of cores and clumps of nodes,” Beckman says.

He’s working toward giving the OS what he calls “a new view on life.” He leads Argo, a Department of Energy project at Argonne aimed at developing the prototype for an open-source OS that will work on any type of high-performance machine made by any vendor and will allow users to mix and match modular OS components to suit their needs.

The Argo team is making several of its experimental OS modifications available.

The Argo team is 25 collaborators at three national laboratories (Argonne, Lawrence Livermore and Pacific Northwest) and four universities (University of Chicago, University of Illinois at Urbana-Champaign, University of Oregon and University of Tennessee). They’re developing modifications to Linux that will let the OS manage power throughout a high-performance machine, reduce the time and energy spent writing data to disk and reading them back, let applications share idle processing power during runtime, and create a means of executing smaller tasks without invoking a power-greedy process for each.

Although tradition holds that users can’t mess with the OS, Beckman says those days will soon end. “Users need the interfaces to manage the OS directly,” he says. “Argo manages the node-operating system, and it manages the global synchronization and coordination of all the components.”

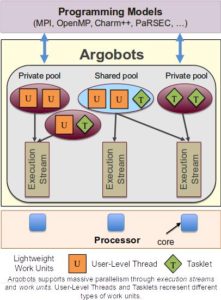

The project’s first product to become available is called Argobots. This runtime layer allows users to invoke small tasks for parallel execution without creating a process for each. The user creates a container to hold the work and a scheduler executes it. “You could run literally tens of thousands of lightweight threads without having this big overhead of creating a whole process,” he says. Invoked by the user, Argobots provides local control over constrained resources.

A schematic of how Argo’s first product, called Argobots, works. The Argobots is a runtime layer allows users to invoke small tasks for parallel execution without creating a process for each. The user creates a container to hold the work and a scheduler executes it. Image courtesy of Pete Beckman, Argonne National Laboratory.

The Argo team is still experimenting with features that manage resources globally. To direct power, it’s developed a concept that runs counter to the traditional view, which Beckman characterizes as “just give the whole machine to the application.” Argo contains a hierarchical system for sequestering nodes in groups, which the team calls enclaves. At the global level, the OS assigns tasks to individual enclaves, which then run separately from the rest of the machine, leaving other enclaves free for other work. Ongoing communications up and down the hierarchy inform the global level when an enclave has completed its task and is available for more work.

Enclaves also conserve CPU time and memory. To further rein in these resources, Argo will enable nodes to execute data reduction at the same time they generate the data, avoiding the need to write data to disk only to read it back for evaluation. To demonstrate how the system enables this in situ data analysis, the team statically dedicated a group of nodes to run a simulation. As it ran, its need for computing resources fluctuated. At the same time, the OS dynamically pressed the cores’ unused hardware into service for data analytics.

The Argo team is making several of its experimental OS modifications available. Beckman expects to test them on large machines at Argonne and elsewhere in the next year. Now that the project’s components are coming together, there are interesting challenges ahead, he notes. For example, the team needs large test beds, but “the ability to try out new algorithms, new mechanisms, new pieces, on the freshest, newest hardware, is always a bit of a challenge.” Members of the system software community “need to be able to get in there and modify critical pieces and watch it with a power meter. Those kinds of test beds are still difficult to set up and configure and manage.”

A longer-term challenge may be that users who are comfortable downloading new applications are wary of trying modified operating systems. “That’s something that we’re still working through with people and letting them know it’s very easy to do,” Beckman says. “We do this all the time on desktops and everywhere else by installing security updates and other things. In Linux, it’s very easy to make a modification and change something and try it and go back if you don’t like it. But it is a cultural impediment.”

Beckman and his colleagues think OS modifications will be vital to exascale computing’s success. “The operating system now needs to manage the machine in new ways – manage power dynamically, manage memory and cores dynamically, and even manage the speed of different processors dynamically – because every bit uses power, and power is a constrained resource.”