In some ways, the interconnecting grids of wires, generators and transformers that power U.S. homes and businesses resemble a patchwork quilt. As lines and power plants rose up to follow the population, each was sewn onto the system we have today.

Now grid operators are paying a price for complexity. They track thousands of components, adjusting power flows and generator speeds to ensure that production and demand are roughly balanced and that line capacities aren’t exceeded. If something fails, operators quickly jump in to avoid overloads and outages.

Multiple failures often mean major blackouts, like those that hit the West Coast in 1996 and the Northeast in 2003. Yet the grid is more volatile than ever as companies add intermittent power sources such as solar and wind. The computers that help manage the grids struggle to keep up. Software often takes minutes to run; failures can occur in seconds.

Researchers at the Department of Energy’s Pacific Northwest National Laboratory (PNNL) are developing computational tools that will boost performance and help grid managers improve their ability to block blackouts.

Today’s real-time grid operation is built on a process called “state estimation,” which compares and minimizes the mismatch between model-predicted electrical quantities and values measured by remote sensors in the supervisory control and data acquisition (SCADA) system. The result is an estimated grid state reflecting actual operating conditions.

With an estimated state, engineers analyze contingencies, making predictions about component failure consequences so they’re prepared to stop problems.

But the system is inadequate, says Zhenyu “Henry” Huang, a senior research engineer in PNNL’s Energy Technology Development (ETD) group. State estimation uses a static model, so states such as voltage and current essentially represent a snapshot of the recent past, rather than what’s happening at the moment.

‘If your model is not updated, the prediction will not be accurate. We cannot trust it.’

That creates an inherent risk, Huang says. For example, when a power line fails, operators must quickly recalculate flows on other lines and redirect them to avoid overloads. With static data, operators can’t be sure what’s happening during the transition, raising dangerous possibilities.

Huang and his colleagues are developing algorithms for dynamic state estimation based on how fast generators are turning and other constantly changing information. Dynamic state estimation would allow operators to more quickly identify and correct contingencies.

“We’re trying to bring the dynamic operation into the real-time grid operation,” he says. “We use differential equations to describe the swings or oscillations on the grid.”

With ASCR support, Huang and his colleagues will frame the state estimation problem so it’s amenable to computer solution. They’ll work on making their algorithms run on parallel processing computers, which divide problems into pieces, then distribute the pieces to multiple processors for simultaneous solution.

One goal is to devise codes able to perform dynamic state estimation 30 times a second – as fast as data arrives from phasor measurement units (PMUs), a new generation of remote sensors. That’s a huge challenge, Huang says, and a main driver for increasing computational efficiency.

The group’s research focuses on the Extended Kalman Filter (EKF) method. In essence, EKF uses what is known to be true about a model to filter out noisy measurements that contribute to uncertainty. The process is applied recursively to update the state estimate at each time step.

Huang’s EKF-based approach starts with a system of equations representing the power grid and applies a prediction-correction procedure. First, the computer solves the equations to provide an idea of the grid’s status. “That’s the prediction step. You use the model to predict what would be happening in the power grid right now,” Huang says.

But because the grid changes constantly, “if your model is not updated, the prediction will not be accurate. We cannot trust it. We have to do some kind of correction.”

The correction step compares predictions with PMU data. An algorithm estimates an error rate and uses it to correct the state variables, filtering out noise as well as outliers and dropouts. “The final output will be a much better picture of your real-time power grid status,” Huang says.

Huang and his colleagues are investigating ways the model can be calibrated with system measurements so it’s more accurate with each step, producing better performance over time.

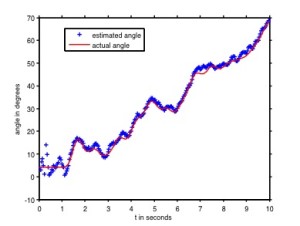

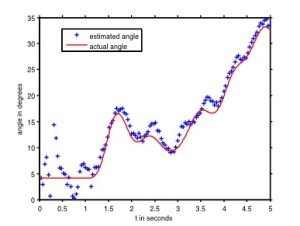

In one test Huang and his colleagues injected 30 percent measurement noise into a simulated system of three generators and nine busbars, electrical connections that concentrate and conduct power. The EKF algorithm rejected most of the noise and estimated generator speed oscillations nearly identical to actual. The test also showed the algorithm could handle 30 phasor measurements per second.

A more recent test on a simulated system of 50 generators and 145 busbars had similar results. The next challenge is a simulated system of 1,000 generators and thousands of busbars, Huang says.

“We find the performance is very good. We believe we will be able to develop a function reliable enough for power grid operation.” Yet the challenges are great enough that field tests are still years away.

Any model will have to demonstrate its validity and adjust for changing conditions if power engineers are to trust it and use it for real-time control. Shuai Lu, another ETD group researcher, heads a second ASCR-supported project to develop real-time model validation and calibration on a large system – particularly the power grid. A model that’s constantly validated and calibrated would give engineers better information as they watch for problems and could lead to more reliable and efficient power grid operation.

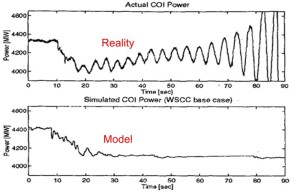

Valid models compare well with real data. Well-calibrated models take external forces into account to more closely match reality. But standard model validation and calibration methods typically rely on offline test data that may quickly become obsolete, adding to model uncertainty. And because they’re conducted offline, such tests lead to costly service interruptions.

Field measurements (top) stand in stark contrast with simulation models of the California and Oregon Intertie (COI) power flows on Aug. 10, 1996. An intertie, or interutility tieline, is a circuit used to connect two load areas of two utility systems. The power grid broke up after oscillations accelerated rapidly, but models showed a stable response. The comparison demonstrates how inadequate power grid models can be. (From Kosterev, et al. 1999)

“I wouldn’t say the offline studies are unrealistic,” Lu says. “There is still truth in them,” but often they don’t accurately represent the actual system in real time, so engineers must add big margins when they use the studies to determine system operating limits. And studies based on offline models may not reflect the impact of line drops or other changes in the system with sufficient accuracy.

Many power grid models also attempt to portray all of the hundreds or thousands of generators, transformers and transmission lines, but “when we put all these component-level models together, their uncertainties will add up,” Lu says. “The result from the final big model may be very unreliable.”

Increases in intermittent power sources – wind, solar – also exacerbate uncertainty, he says. “We cannot control the wind, so the power flows on those lines become much more variable than before.”

Most power grid models are deterministic, with fixed parameters, so they’re unlikely to capture system variability. They also do little to account for human factors, like the actions of power system operators in grid control rooms.

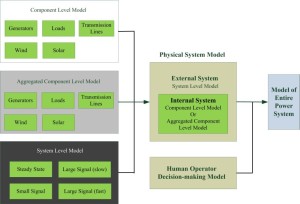

The models Lu and his fellow researchers devise will work with online measurements, providing real-time validation and calibration that includes human factors. But including details of each system component in the model would cost an intolerable number of processor hours, even on the most powerful computers.

To make the simulations computationally tractable, the researchers use model reduction techniques – mathematical methods that combine levels of detail. They will rely on individual component data, as in standard models, if the data are reliable. They will aggregate other inputs if their details are unnecessary. They may discard some parameters in favor of major characteristics.

Finally, the models will portray some parts at the system level if little information is available or only high-level data is needed, says Guang Lin, a PNNL computational mathematics scientist collaborating on the project.

“If we’re only interested in the Pacific Northwest power system, then for other parts of the interconnection we can aggregate the model. Since we don’t need that much detail we can model them with some system-level models.”

Pacific Northwest National Laboratory researchers are proposing a system model calibration and validation approach that uses different levels of detail and complexity to make the problem computationally tractable. It will use a component-level model when structures are clearly known, down to a single generator (white box). Other inputs will be aggregated if their details are unnecessary or parameters are unknown (gray box). The model will portray some parts at the system level (black box) if little information is available or only high-level data is needed. The group will study and model human operators’ responses to system disturbances and include them to arrive at an entire system model.

The group also will study human operator response and add its effects to the model, Lu says. “We need to be clear about the response time, the realistic response time, of system operators. We need to consider these factors because operators will be the ones using the output from the model.”

Even with improved, real-time data for validation and calibration, the researchers’ model still will contain uncertainty. Part of their project will focus on quantifying that uncertainty – arriving at a number describing the model’s reliability.

“We have this numerical model, and if we want to use it to predict what will happen in the future, we have to consider uncertainty,” Lin says. But real-time uncertainty quantification will demand even more computational power.

To tackle the problem, the researchers will develop tools to identify and rank random inputs according to their impact on the output. The most sensitive inputs will be modeled with algorithms that incorporate randomness to account for uncertainty.

Other researchers have tried model reduction techniques for power system modeling, Lu says, but no one has attempted to systematically validate and calibrate the reduced power system model and use it in real-time applications. That’s partly due to the lack of data and computational power.

To address the data issue, the PNNL researchers are working with the Western Electricity Coordinating Council (WECC), which oversees the power grid in much of the Western United States and in parts of Canada and Mexico. WECC transmission operators and utilities are integrating SCADA and PMU data from the entire system. Instead of having access only to their own data, they will be able to tap system-wide information. Many more PMUs, which collect accurate and high-time-definition data, also are coming on line in the WECC system.

The PNNL group also is working with the Bonneville Power Administration, which oversees electric transmission from dams and other Pacific Northwest facilities; with the California Independent System Operator, which oversees much of that state’s power grid; and with other WECC utilities.

With their help and with WECC’s improved sensor and communications networks, “PNNL is now able to overcome past data issues,” Lu says.

PNNL also is well equipped to surmount the computing power hurdle. Its Cray XMT is based on a massively multithreading architecture, making it well suited to handle scattered power grid data.

Says Lu, “All these factors added together make it very promising.”