As researchers develop new materials for spacecraft, aircraft and earthbound uses, they need to generate images from experimental results quickly at atomic-scale resolution.

“We need to understand how these new materials will function under extreme environments or extreme temperatures so that we’ll be safe when we use them,” says Xiao Wang, staff research scientist at Oak Ridge National Laboratory. To do that, Wang and colleagues study their molecules and atoms using electron microscopy.

Even an electron microscope can’t reveal individual atoms with a single, straightforward exposure. But a technique called ptychography can. Ptychography, literally “layer-writing,” uses overlapping electron microscope exposures to generate interference that can be processed to yield images with resolutions higher than any other technology. Since this method was developed at Caltech in 2013, various research groups have been using it to generate images that reveal atomic-level crystal structures. However, the computer processing required for ptychography has demanded prohibitive amounts of computer memory and long compute times on high-performance machines.

Now Wang and his colleagues have developed a new approach to ptychography computation they call image gradient decomposition. In calculations on the Oak Ridge supercomputer Summit, using electron microscopy data from an alloy called titanate, they compared their new algorithm to the prevailing method. Image gradient processing splits the problem into nine times more partitions for simultaneous processing, decreasing the average memory per GPU by 98 percent. At this scale, the new method kicked out a hi-res image 86 times faster — in 2.2 minutes compared to more than 3 hours. The team reported the results at last year’s supercomputing conference, SC22.

With image gradient decomposition, ptychography is a step closer to their ultimate goal of reconstructing an image so efficiently that users can see it as soon as they finish their experiments, Wang says.

This is Wang’s most recent effort to speed up image processing and improve their resolution in any modality, from submicroscopic scientific imaging to medical imaging. In 2017, as a doctoral student at Purdue University, he was a finalist for the Gordon Bell Prize from the Association for Computing Machinery. He and his colleagues developed the non-uniform parallel super-voxel algorithm, a more efficient means of processing computer-unfriendly data from computed tomographic (CT) scanning. The new algorithm was more than 1,665 times faster than the previous top-performer. In 2022, Wang and his co-workers used an improved version to win the Truth CT Grand Challenge, hosted by the American Association for Physicists in Medicine. Among 55 teams working with the same data, they created the sharpest image.

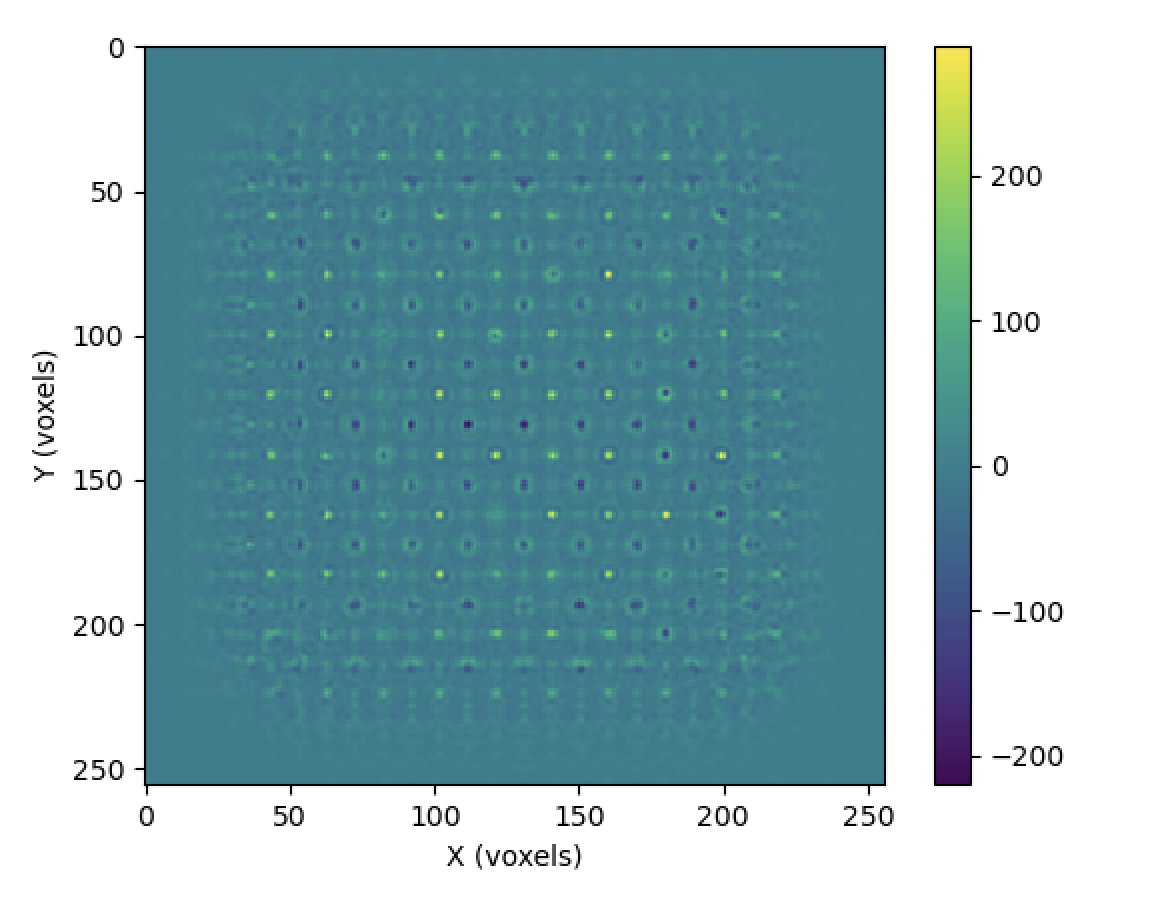

Ptychography begins with a series of overlapping, circular electron microscope exposures called probe locations. This snapshot array may look at first like water rings left by drinking glasses on a tabletop, but the patterns’ orientations are not random. They are precisely arranged so that every spot of the target material is captured within two or more probe locations, each one typically providing data for hundreds of pixels.

Until recently, the most efficient application for reconstructing an image from such probe locations has been the halo voxel exchange method. (A voxel is a 3D unit made up of layers of pixels.) That algorithm works by dividing probe locations into central tiles bordered by halos, or regions where neighboring probe locations overlap. The tiles and halos are all updated separately, then recombined into the final image.

‘There isn’t an easy way that we can partition and distribute this problem size into smaller problems for each supercomputer’s GPU to compute.’

Halo voxel exchange generates powerful high-resolution images, but the method requires long compute times on large machines. The application requires crunching numbers pixel by pixel. Each probe location is assigned to a GPU, which first combines data from the overlap between two probe locations and then, for a subset of those pixels, updates the pixels using data from a third overlapping probe location, then a fourth and possibly more. Between updates, each GPU must retain the results from the previous updates, incurring large memory costs. These demands often force the application into tradeoffs, giving up image resolution to save memory and computing time.

Ptychography algorithms tend to frustrate efforts at parallelization. “These smaller problems are all dependent on each other,” Wang says. Since ptychography depends on overlapping regions’ interference, “there isn’t an easy way that we can partition and distribute this problem size into smaller problems for each supercomputer’s GPU to compute.”

Although halo voxel exchange achieves limited parallelization, it splits the problem so that when two GPUs crunch numbers independently for the same overlapping area, results can be incomplete and contradictory. The product is a series of artifacts in the final image that look like clumsy attempts to splice the image by hand. Their image gradient decomposition approach avoids these artifacts.

The new algorithm was born in a group discussion among Wang, principal investigator Jacob Hinkle, materials scientist Debangshu Mukherjee and others at Oak Ridge. (Hinkle has since left ORNL for the private sector.) The group considered analyzing each probe location at a higher level instead of updating the pixels individually. Each probe location would be assigned to a separate GPU, as in halo voxel exchange, but the new algorithm would compute the gradients within each. As vectors, those gradients could express the overall shift across pixels, from light to dark. The resulting arrow would denote both the direction and magnitude of the shading.

After the discussion, Wang found that working with gradients solved two of ptychography’s most stubborn challenges. Gradients can be processed faster than pixel arrays, and retaining gradients between updates requires less memory.

Then Wang took on the big question: how to partition the huge problem into chunks suitable for individual GPUs and aggregate their results while preserving the inherent dependency of the different probe locations.

Gradients again offered the solution. “One thing we can do is let each GPU keep track of the gradients of their computations,” he says. “When those different probe locations overlap with each other, we can just simply add their gradients together in their overlap regions.”

Like compass needles finding north, the arrow-like gradients swing back and forth with each addition from an overlapping probe location. “That allows us to partition the problem size among different GPUs, while also keeping the dependency between the neighboring probe locations,” he says.

When the zones with their final gradients are reassembled, each puzzle piece brings its unique shading to the overall image, and atomic-level details pop. Because image gradient deconstruction avoids problems with incomplete and contradictory data, the reconstructed image is free of artifacts. AI methods can further enhance the method’s resolution.

Wang foresees a bright future for the new algorithm. Now that such problems can be neatly partitioned, they can also be done on smaller machines, even laptops, rather than on high-performance machines. “If we don’t have a parallel supercomputer, we can work on those subproblems one at a time and then stitch them together into a high resolution superlarge, superfine image reconstruction,” Wang says.

This new method could also allow exascale machines such as Frontier, the world’s first computer to execute more than 1 million trillion operations a second, to zoom in even further. “Potentially, if we use this method on Frontier, it can lead to the highest resolution electron ptychography that we have,” Wang says. “And that may even allow us to observe new subparticle phenomena that we have never found before.”