In a mere 18-month span, the Texas Cold Snap left millions of people without power, the Pacific Northwest heat wave scorched millions of trees, and Hurricane Ian produced $50 billion in damages in Florida and the Southeast. Scientists agree that costly and deadly events like these will occur more frequently. But when and where will they happen next? Climate researchers don’t know – not just yet.

The most recent version of the Department of Energy’s new high-resolution Energy Exascale Earth System Model (E3SM), run on DOE Leadership Computing Facility resources, promises the best forecast ever of extreme weather over the next century.

“If we’ve learned anything over the past several years, it’s that the extreme weather events that we have experienced over the past 100 years are only a small fraction of what may be possible,” says Paul Ullrich, professor of regional climate modeling at the University of California, Davis. “A big part of this project is to understand what kind of extreme events could have happened historically, then to quantify the characteristics – for example, intensity, duration, frequency – of those events” and future potential weather disasters.

For more than half a century, dozens of independent climate models have indicated that increased greenhouse gas concentrations in the atmosphere are contributing to global warming and a looming climate apocalypse. However, most models do not say how warming will affect the weather in a given city, state, or even country. And because climate models rely on averages computed over decades, they aren’t good at capturing the inherently rare and mostly localized outliers – storms, heat waves and droughts that bring the highest potential for destruction.

Traditionally, projecting changes to the numerous parameters describing our climate with statistical fidelity requires many high-resolution simulations. Although large model simulation ensembles have been invaluable in recent years for tightly bounding projected climate changes, even the finest of these models simulate events up to only 10 years out at a grid spacing of about 80 kilometers – about the width of Rhode Island or the big island of Hawaii. Fine-scale weather impacts from tropical cyclones or atmospheric rivers, for example, are invisible to those models.

But now, models such as E3SM, running on supercomputers, can simulate future weather events across the globe through the end of this century with resolution approaching 20 km.

E3SM simulates how the combination of temperature, wind, precipitation patterns, atmospheric pressure, ocean currents, land-surface type and many other variables can influence regional climate and, in turn, buildings and infrastructure on local, regional, and global scales.

The model is an unprecedented collaboration among seven DOE national laboratories, the National Center for Atmospheric Research (NCAR), four academic institutions and one private-sector company. Version 1 was released in 2018. The collaboration released version 2 in 2021.

‘We’re pushing E3SM to cloud-resolving scales.’

“As one of the most advanced models in terms of its capabilities and the different processes that are represented, E3SM allows us to run experiments on present climates, as well as historical and future climates,” says Ullrich, who is also principal investigator for the DOE-funded HyperFACETS project, which aims to explore gaps in existing climate datasets.

E3SM divides the atmosphere into 86,400 interdependent grid cells. For each one, the model runs dozens of algebraic operations that correspond to meteorological processes.

Ullrich and his team are attempting to produce the world’s first high-resolution so-called medium ensemble from a single global modeling system. “We’re pushing E3SM to cloud-resolving scales using what is known as regionally refined modeling,” Ullrich says. The model “places higher resolution in certain parts of the domain, allowing us to target the computational expense to very specific regions or events.”

The team, which includes Colin Zarzycki at Pennsylvania State University, Stefan Rahimi-Esfarjani at UCLA, Melissa Bukovsky at NCAR, Sara Pryor from Cornell University, and Alan Rhoades at Lawrence Berkeley National Laboratory, has an ASCR Leadership Computing Challenge (ALCC) award to target areas of interest. They’ve been allocated 900,000 node-hours on Theta at the Argonne Leadership Computing Facility (ALCF) and 300,000 node-hours on Perlmutter-CPU at the National Energy Research Computing Center. “We can also use a finer grid spacing for larger regions,” Ullrich says but notes that would mean fewer storm simulations. “It’s just a matter of how we want to use our ALCC allocation.”

Ullrich says E3SM has been thoroughly tuned to run efficiently on Theta’s Intel processors. “This means we can achieve higher throughputs, for instance, than the Weather Research and Forecasting (WRF) system, which is considerably more expensive, in terms of the time and energy required to complete the run.”

The team will use Perlmutter for smaller, more targeted simulations. “It’s a very flexible, robust system,” Ullrich says, that will let the team run different code configurations “very quickly, and it has much higher throughput than Theta.”

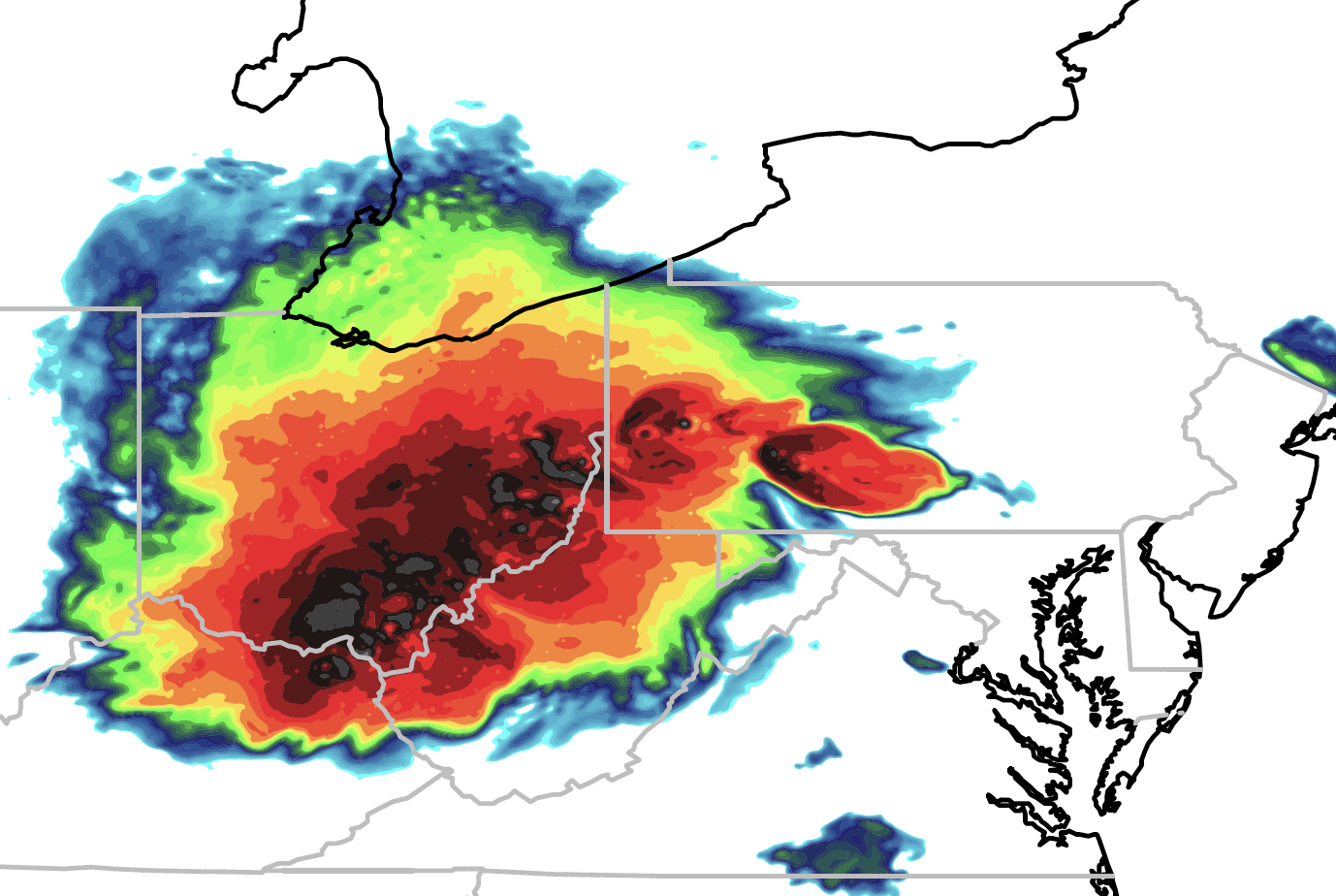

Ullrich and his colleagues plan to focus their high-resolution lens on regions containing 20 to 40 historically notable U.S. storms. They’ll run 10 simulations of each, covering the period from 1950 through 2100 with a refinement region covering the lower 48 states at a grid spacing of approximately 22 km. They aim to capture aspects of large-scale structure and frequency, plus the locations of tropical cyclones, atmospheric rivers, windstorms and winter storms. Then they’ll quantify these conditions’ changes in the future.

Averaging outcomes over 10 simulations for each storm will help iron out forecasting uncertainties. “We’ll start our simulations at slightly different times, or with slightly different initial configurations, in order to trigger small-scale perturbations that lead to different evolutions of those storms,” Ullrich says.

In addition, the team will simulate the 20 most extreme events at high resolution, using the Simple Cloud-Resolving E3SM Atmosphere Model (SCREAM) at 3.5-km grid spacing, and the WRF system at less than 2-km spacing. These runs will let the team compare the E3SM-generated phenomena’s severity to historical events. The large-ensemble runs and the downscaled simulations will further let the researchers predict whether historical worst-case extremes were as bad as it can get or if even more damaging extreme events are possible.

The simulations have begun and look promising so far, Ullrich says. “The simulated climate looks excellent,” containing many small-scale features. It “captures tropical cyclones very nicely. Now, we’re looking forward to building up this large dataset that will enable us to reduce uncertainties, which will really give us a great look into the future of extreme weather.”