A computational model of an exploding star – a supernova – is the “quintessential multi-physics simulation,” says a team designing codes that will improve the speed and fidelity of such calculations.

To the ExaStar project’s astrophysicists, computer scientists and applied mathematicians, quintessential means stellar explosions are “the classic problem in high-performance computing,” or HPC, says principal investigator Daniel Kasen of Lawrence Berkeley National Laboratory. “There are so many different pieces of physics that go into these phenomena that it’s become somewhat of a standard problem.”

Quintessential, however, could refer to the archaic definition of quintessence: a fifth element (besides earth, fire, air and water) philosophers thought composed heavenly bodies. ExaStar’s goal is to help determine celestial sources of heavy elements that comprise planets, pianos, people and more.

It also could allude to quintillion (a 1 followed by 18 zeroes), the number of calculations per second that exascale computers – soon to come on line – will perform each second. ExaStar, under the Department of Energy’s Exascale Computing Project (ECP), will capitalize on that unprecedented power.

With exascale, astrophysics simulations that used to take months to run could take days. “That’s pretty exciting,” Kasen says. “It means going beyond just doing better physics in these models. We can also start to do large surveys” of simulations. Researchers could model many stars to see which explode, which collapse to black holes and which produce particular elements. They could make predictions to compare against astronomical observations. “We’re really connecting to data and telling a full story rather than just trying to understand the base phenomena.”

The ExaStar team, which includes researchers from Berkeley Lab, Oak Ridge and Argonne national laboratories, Stony Brook and Michigan State universities and the universities of Chicago, Tennessee and California, Berkeley, calls its code base Clash, combining Castro and FLASH-X, two astrophysics programs. It’s more than a code, says team member Austin Harris, an Oak Ridge HPC performance engineer. It’s a software ecosystem, modules that, together, capture nearly all the physics driving cosmic blasts and mergers.

FLASH-X lets researchers configure physics, such as hydrodynamics and nuclear reactions, in their models. As part of the ECP, developers upgraded the code, now the team’s primary development and testing vehicle, as reported in an International Journal of High-Performance Computing Applications paper. ExaStar researchers have optimized existing modules and written new ones for a range of physics.

Berkeley Lab’s Castro, named for compressible astrophysics, models high-speed flows using AMReX, an adaptive mesh refinement (AMR) code suite also tailored for exascale. AMR focuses computational power where it’s most needed, such as a supernova’s element-forming regions.

The hard part: adapting the modules to run on the graphics processing units (GPUs) today’s fastest supercomputers use to attain fantastic speeds. “None of these codes, when we started, were adapted well to running on GPUs,” Harris says.

Another challenge: uniting modules into a single simulation. Each is responsible for a particular component, such as gravity or nuclear reactions, but they’re interdependent, sharing and producing output. Argonne’s Anshu Dubey led development of an orchestration system that manages physics modules, evolving them individually and scheduling dependencies to perform smoothly and efficiently.

The orchestration system also lets scientists use a supercomputer with minimal fuss and recoding to adapt legacy software or code only tested on small machines. Users can dictate dependencies and what data is needed and when. They needn’t worry what processor types comprise the supercomputer as the layer fits new physics into the main simulation.

‘Neutrinos are mediating the explosion by carrying energy from the dense nugget of the core to the outer layers of the star.’

Such calculations demand the precision and power exascale will provide. The codes will resolve some physics at a gradation of 100 meters in an overall domain spanning about 50,000 kilometers, the paper says.

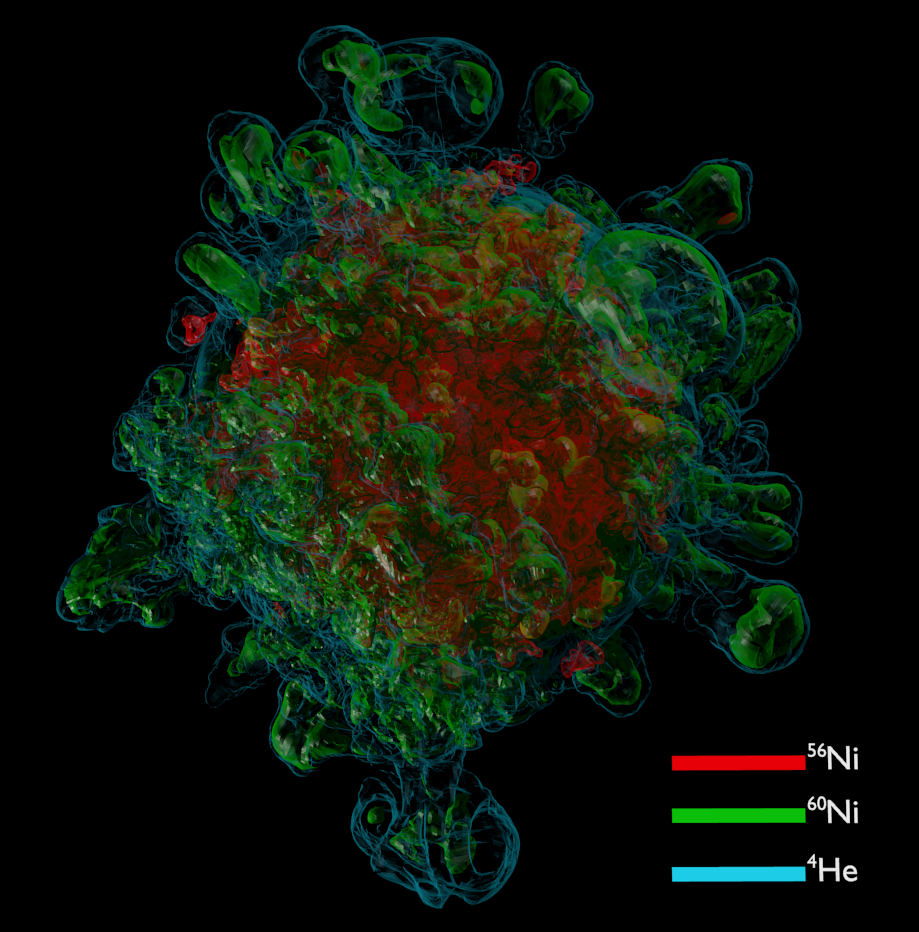

The simulation also tracks submicroscopic nuclear reactions that occur within microseconds, physics that today’s models must approximate. The ExaStar team wants to avoid or improve such estimates and clarify the picture of nucleosynthesis – nuclear reactions that produce elements, especially ones heavier than iron.

Another major approximation the team attacked: neutrinos moving through and fueling a supernova. Because these light particles interact weakly with other particles and forces, gravity can’t bind them to the neutron star formed when the core collapses, Kasen says. “We think the neutrinos are mediating the explosion by carrying energy from the very dense nugget of the core to the outer layers of the star.”

Astrophysicists can’t precisely simulate that – and therefore can’t fully grasp how a supernova happens. Besides three spatial dimensions, a neutrino-tracking code also must account for three dimensions in momentum space. “Instead of solving a three-dimensional problem for one time step, we have to solve a six-dimensional problem,” Harris says. He’s lead author of the paper, which notes that neutrino transport is “the computational bottleneck in astrophysical simulations that include it.”

Fortunately, “the thing that makes it computationally difficult is also the thing that makes it suited to perform well” at exascale, Harris says. GPUs specialize in handling the multitude of floating-point operations that neutrino transport models demand. The team also tapped a technique to frame the calculations as linear algebra equations, another GPU forte.

Even with exascale power, Harris says neutrino transport “will still be the limiting factor on how realistic the physics we’re able to resolve will be,” requiring approximations the researchers continue to narrow.

The codes also will compute the nuclear reactions fueling nucleosynthesis, using a version of XNet, which calculates the rate of nuclear energy deposited to the supernova’s fluid and evolves elements. Instead of the 10 to 20 isotopes that today’s simulations portray, the ExaStar team aims to capture 100 to 3,000. In tests, the team used XNet in FLASH-X to track 160 nuclear species.

Even with exascale power and ExaStar’s algorithmic improvements, it will still take weeks to model just the first seconds of a supernova – the cataclysmic event’s critical part. But that’s an enormous improvement over the months current models consume, and past those initial seconds the model becomes simpler, with calculations carrying the supernova through days.

The output: visualizations of supernovae and other celestial phenomena, and predicted observations, including spectra – the composition of light from the blast – and light curves, or the rate at which objects brighten and fade.

“That will be powerful, because with spectra you see features that indicate particular elements” in the explosion debris, Kasen says. Astrophysicists also can determine how fast the material is moving, the energy driving it, and more. “There’s a lot we can tell and test against our models.”

The simulations also will relate to experiments replicating nucleosynthesis on a small scale. For example, DOE’s Facility for Rare Isotope Beams at Michigan State will gather data on uncommon neutron-rich isotopes. It’s “another connection we’re excited about, that our simulations will be able to take data from these new experiments on the tiny scale of nuclei and put it into codes that are modeling things on astronomical scales,” Kasen says.

Kasen is one of many researchers eager to use the code suite. His prime project: the role of neutron star mergers in nucleosynthesis.

“There are still a lot of questions about how these elements are formed, what really happens when two neutron stars merge and collide, what we can learn about superdense matter, about gravity,” he says. “I’m really excited about that problem.”