The Department of Energy (DOE) X-ray light source complex is a physical-science tool belt that stretches from Long Island to suburban Chicago to the San Francisco Bay Area, comprising five ultra-bright scientific facilities at four national laboratories.

Researchers use these light sources to glean physical and chemical data from experiments in biology, astrophysics, quantum materials, catalysis and other chemistry disciplines, generating millions of gigabytes of data annually, rolling in hour by hour, day by day.

Moore’s Law says computers double in speed approximately every two years, but those advances are left behind as detectors get faster and beams brighter, increasing in spatial and temporal resolution. The result is ever-growing mountains of data. Meanwhile, experiments also are getting more complex, measuring increasingly sensitive quantities and merging information from multiple sources. Learning the best ways to use these advanced experiments is critical to maximizing their output and impact.

Keeping up with all that burgeoning information and complexity requires advanced mathematics and state-of-the-art algorithms. To meet these challenges, the DOE Office of Science’s Advanced Scientific Computing Research and its Basic Energy Sciences program fund a research project called CAMERA, the Center for Advanced Mathematics for Energy Research Applications.

Working with each light source since its 2010 launch at Lawrence Berkeley National Laboratory, CAMERA has been decoding information hidden in the most complex investigations, automating experiments to economically uncover key phenomena, triaging hordes of data and customizing methods that keep and compress results while remaining faithful to the underlying science.

“As DOE science embraces faster detectors, brighter light sources and more complicated experiments, there’s a lot to do,” says James Sethian, James H. Simons Chair in Mathematics at the University of California, Berkeley, Berkeley Lab senior faculty science group lead and CAMERA director. That to-do list includes finding what the data output says about a model input, determining the best way to do experiments based on previous results, and identifying what similarities, patterns and connections the sets of experiments share.

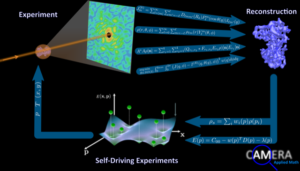

The CAMERA project couples applied mathematics to data science, to accelerate, automate and analyze experiments. Clockwise from top left: An experiment scatters light-source data interpreted via multitiered iterative projections (the four arrows) through which data are reconstructed. Gaussian processes (bottom right) suggest and drive new experiments. All work together in an autonomous loop to optimize sophisticated instruments. Image courtesy of J. Donatelli, M. Noack and J.A. Sethian, University of California, Berkeley/Lawrence Berkeley National Laboratory.

At Brookhaven National Laboratory, for example, CAMERA aims at autonomous, self-steering materials experiments. Intuition or a measurement plan usually drives such experiments. Neither is efficient. As an alternative, CAMERA has developed a powerful method to guide experiments that uses data as they are collected to ensure that measurements are taken where more information is needed and uncertainty is high.

A CAMERA software framework called gpCAM helped Brookhaven researchers increase beam use at the Complex Materials Scattering beamline from 15 percent of its capacity to more than 80 percent in case studies. The framework was developed as a joint project connecting Kevin Yager of Brookhaven’s Center for Functional Nanomaterials, Masa Fukuto of BNL’s National Synchrotron Light Source-II and CAMERA lead mathematician Marcus Noack, who notes that “this ability to automatically assess data as it is collected and then suggest new directions has applications across experimental science.”

Preliminary tests on biological spectroscopy data, acquired at the Berkeley Synchrotron Infrared Structural Biology beamline, showed that gpCAM could reduce the required amount of data to generate a valid experimental result by up to 20-fold. And at the neutron facilities at the Institute Laue-Langevin, gpCAM reduced the beamline time needed to generate experimental results from days to one night.

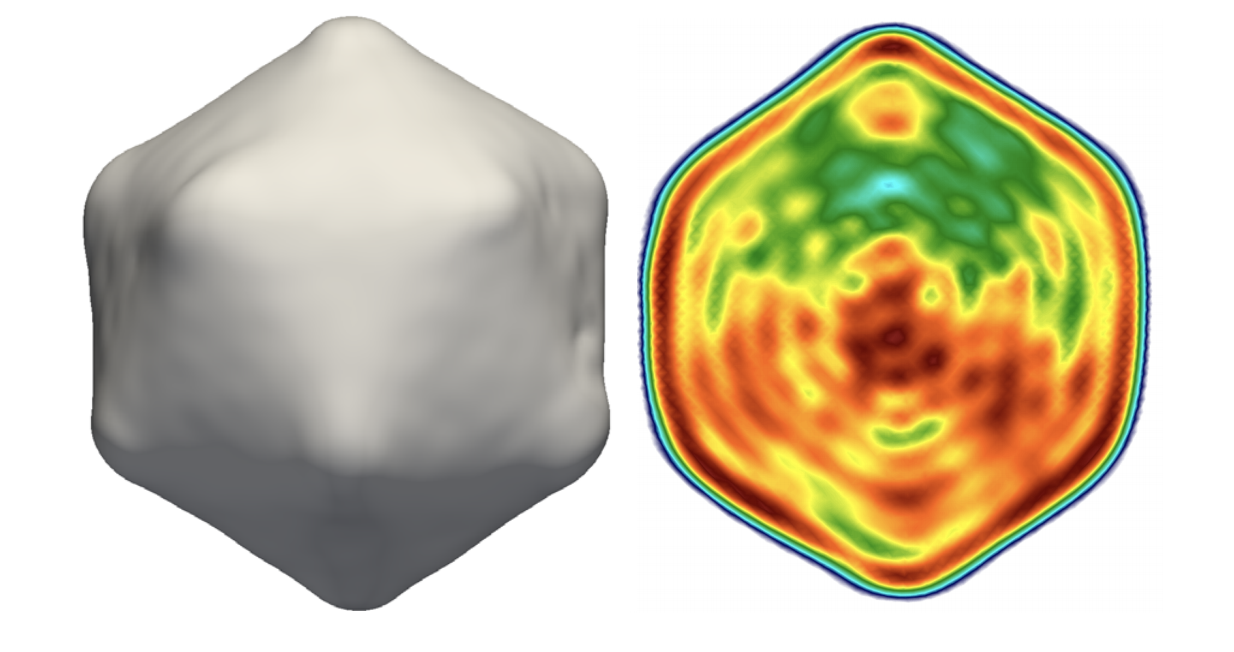

At Argonne National Laboratory’s Advanced Photon Source (APS) near Chicago, CAMERA is helping to reconstruct images in three dimensions.

A planned upgrade for the APS’ synchrotron light source will focus more X-rays than ever before on a tighter spot, enabling a host of new experiments. Coherent surface-scattering imaging, or CSSI, is an emerging technique that harnesses these new capabilities to probe the nanoscale surface structure of biological objects and materials in unprecedented detail. But CSSI presents challenges because light in samples scatters multiple times before reaching the detector.

“This behavior severely complicates CSSI data analysis, preventing standard techniques from reconstructing 3-D structure from the data,” says Jeffrey Donatelli, who leads CAMERA mathematicians in a collaboration with Argonne’s Miaoqi Chu, Zhang Jiang and Nicholas Schwarz. Together, they coupled an algorithm Donatelli devised called M-TIP, for multitiered iterative phasing, to address the CSSI reconstruction problem. These analytic tools will be essential capabilities for a to-be-constructed dedicated CSSI beamline at the upgraded APS.

M-TIP breaks a complex problem into solvable subparts, which then are stitched together in an iteration that converges toward the right answer. Donatelli, an alumnus of the DOE Office of Science’s Computational Science Graduate Fellowship, has used M-TIP to solve longstanding problems in other experimental techniques.

The team has developed several mathematical tools that directly incorporate the physics of multiple scattering effects in CSSI data into M-TIP, providing the first approach capable of solving the 3-D CSSI reconstruction problem.

Closer to home, at the SLAC National Accelerator Laboratory’s Linac Coherent Light Source-II (LCLS-II), CAMERA is applying M-TIP to a technique called fluctuation X-ray scattering to remove motion blur from biomolecular scattering images.

X-ray crystallography or electron microscopy can reveal the precise structures of virus particles and proteins and other biomolecules down to the arrangement of individual atoms. But these snapshots are static and experiments are done at extremely cold temperatures. Although some X-ray scattering techniques can supplement these images, they typically take about a second – long enough for the target molecules to completely reorient themselves. The result is similar to taking a CT scan of a restless toddler: Motion blur fogs the pictures.

Developing materials that can efficiently convert heat into electricity requires assessing a complex set of often conflicting properties.

A technique from the 1970s called fluctuation X-ray scattering could have reduced the snapshot time and eliminated motion blur. But the idea stalled because there was no mathematical algorithm to execute this approach.

The advent of free-electron lasers (XFELS) such as LCLS-II has opened the door to extract the necessary critical data. Donatelli and CAMERA biophysicist Peter Zwart realized M-TIP could sift imaging ambiguities and devised a systematic algorithm based on the original fluctuation X-ray scattering theory. They worked with a team at SLAC and Germany’s Max Planck Institute to demonstrate the idea’s feasibility by solving the structure of a virus particle from experimental data.

“This sort of cross-discplinary collaboration really shows the advantage of putting co-design teams together,” Zwart says. This new approach can extract molecular shape from fluctuation scattering data and is an important component of the ExaFEL project, which applies exascale computing (about four times faster than today’s top supercomputer) to XFEL challenges. The Advanced Scientific Computing Research program supports CAMERA and ExaFEL.

For another SLAC project, at the Stanford Synchrotron Radiation Lightsource (SSRL), postdoctoral scholar Suchismita Sarker uses CAMERA-developed gpCAM to sift a multitude of possibilities for environmentally friendly materials in alloys with complex compositions. DOE’s Office of Energy Efficiency and Renewable Energy supports the project, which SSRL’s Apurva Mehta leads.

Developing materials that can efficiently convert heat into electricity requires assessing a complex set of often conflicting properties. Trial-and-error searches through multidimensional data sets to evaluate properties of hundreds of potential alloys is slow and computationally expensive. But gpCAM can help guide researchers through the vast universe of possibilities to predict the experimental values and uncertainties needed to create the best target materials.

At Berkeley Lab’s Advanced Light Source (ALS), Alex Hexemer, Pablo Enfedaque, Dinesh Kumar, Hari Krishnan, Kanupriya Pande, Ron Pandolfi, Dula Parkinson and Daniela Ushizima combine software engineering, tomographic experiments, algorithms and computer vision to seamlessly integrate and orchestrate data creation and management, facilitate real-time streaming visualization and customize data-processing workflows.

A different challenge stems from analyzing images without the luxury of reference libraries to compare them against. This rules out typical machine-learning approaches, which require millions of training images as input. It can take weeks to hand-annotate just a few precisely defined images for many scientific applications.

So CAMERA mathematicians Daniël Pelt, now at CWI Amsterdam, and Sethian are developing MS-D – mixed-scale dense convolution neural networks, which need far fewer parameters than conventional approaches and can learn from a small set of training images. Scientists now use this technique for a host of applications, including a project at Berkeley Lab’s National Center for X-ray Tomography with the University of California, San Francisco, School of Medicine on how form and structure influence and control cellular behavior.

That’s the technical side. CAMERA also has a human side, Sethian says, that introduces its own complexities. “Building interdisciplinary teams of beamline scientists, physicists, chemists, materials scientists, biologists, computer scientists, software engineers and applied mathematicians is a wonderfully challenging problem, but it can take time for collaborators to develop a common language and understanding.”

To a mathematician, success might mean an airtight paper and publication. To a beamline scientist, success might be a tool that converts experimental data into information and insight. To a software engineer, it might be tested, downloaded and installed code.

“Figuring out how to align these different goals and expectations takes a lot of time,” Sethian says, “but is a key part of CAMERA’s success.”