The Department of Energy’s Los Alamos National Laboratory (LANL) is taking an early plunge into a kind of computer so revolutionary that it remains an open question whether the technology actually works as advertised.

Made by D-Wave Systems Inc. of Burnaby, British Columbia, the $10 million device is designed to use quantum physics to boost calculating power beyond what is possible in our normal, classical computing world.

Trinity, LANL’s latest massive state-of-the-art supercomputer, has about 2 petabytes of memory. By comparison, LANL’s D-Wave 2X would have only about 136 bytes of available memory in classical terms. But the potential promise is much larger. A quantum computer “can exploit vastly more computational parallelism than any conceivable classical supercomputer,” says Scott Pakin, a LANL computer scientist for 15 years who serves as the D-Wave scientific and technical point of contact.

The new machine also could help address looming high-performance computing (HPC) obstacles, notes Mark Anderson, director of advanced simulation and computing and institutional R&D at the DOE National Nuclear Security Administration and formerly of LANL. “As conventional computers reach their limits of scaling and performance per watt, we need to investigate new technologies to support our mission.”

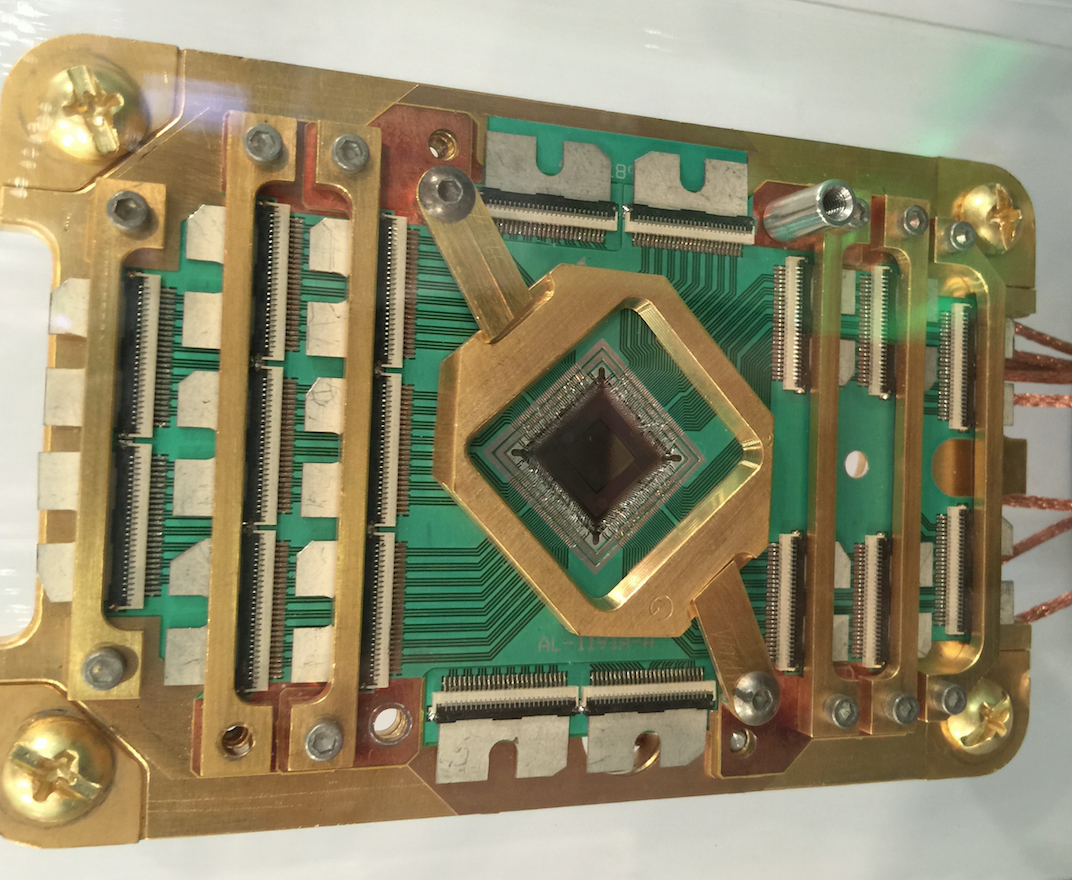

Quantum computers operate using computational units expressed as qubits rather than classical bits, and LANL’s D-Wave 2X has about 1,000 of those to play with. The action takes place inside a single fingernail-sized chip refrigerated to about 0.01 degrees Celsius above absolute zero. This ensemble is surrounded by a 10-by-10-by-12-foot black box, radiation-shielded to block any interference from subatomic particles like cosmic rays.

Pakin is both enthusiastic and realistic about what amounts to a gamble with quantum computing. “It’s a fascinating subject because it’s such virgin territory,” he says. “Everything is unknown. We don’t know how to build these well. We don’t know what to do with them. Every stone you overturn has something exciting hidden underneath.”

Quantum computers come in two “flavors,” Pakin says. The first, called the gate or circuit model, is “pretty analogous to a digital circuit.” That means a gate-model algorithm takes in and spits out the familiar 0s and 1s of normal computing.

From there that analogy becomes a bit strained because quantum gates are not the normal gates that manipulate those 0s and 1s to produce a different set of 0s and 1s. Instead, quantum gates manipulate qubits, which “start to adopt unusual quantum properties,” he explains.

Those qubits can represent not only 0 or 1 but an infinite number of different 0s and 1s as well as values that are neither purely 0 or 1 but teeter varyingly toward either. They can go into superposition, meaning simultaneously having properties of both, to varying degrees. And they can get entangled so some change in lockstep with others, even in separate locations. Thus, “qubits are able to achieve a lot more than classical bits can,” Pakin says.

D-Wave machines are best at optimization problems, which Pakin describes as ‘finding the best something.’

In a company video, D-Wave physicist Dominic Walliman says gate model systems have the ability “to control and manipulate the evolution of that quantum state over time. Having that amount of control means that you can solve a bigger class of problems.”

Unfortunately, gate-model systems also “tend to be incredibly delicate to work with,” Walliman says. That means present-day gate models almost guarantee exceptionally hard-to-correct errors, which Pakin calls “probably one of their biggest show-stoppers.”

D-Wave and Los Alamos have elected to go another way with a competing method called quantum annealing. The label refers to standard annealing, in which metals are melted and then allowed to cool and crystalize, losing all the energy they can in the process.

Classical computing can also simulate a version of annealing with an algorithm where “you’re trying to find the minimum value of some function,” Pakin says. Quantum annealers “begin with a comparatively easy-to-express classical problem” but then “use quantum effects to solve it more efficiently.”

The goal, as Walliman puts it, is to “harness the natural evolution of quantum states.” Unlike gate models, “you don’t have any control over that evolution. So you set up the problem at the beginning and let quantum physics do its natural evolution.”

For lay audiences, scientists use the analogy of raindrops falling on a mountain to describe what happens during the quantum phase.

“The droplets can roll down but then get stuck in a mountain valley that’s up pretty high,” Pakin says. Quantum annealing is like a drill that can pierce that valley so the droplets flow further downhill “to a better solution,” he explains.

That drilling is technically known as quantum tunneling, or the spooky ability of quantum particles to penetrate barriers. This tunneling happens as supercooled electromagnetic currents pass through SQUIDS (for “superconducting quantum interference devices”) inside D-Wave chips.

Even D-Wave acknowledges that applications for its products are more limited than for gate-model quantum computers. D-Wave machines are best at optimization problems, which Pakin describes as “finding the best something” – everything from building the best house on a tight budget to selecting the best car routes to minimizing traffic jams.

Pakin says “the jury is still out” on whether D-Wave machines will have fewer error problems than gate-model quantum devices. Another shortcoming of all quantum computers is the paucity of guiding quantum algorithms, he adds. A recent count logged only about 60, compared to an “astronomical number” of classical algorithms. The final results add another wrinkle: A quantum computation may “internally explore many possible solutions to the same problem, but only one single answer can be returned.”

Still, Pakin sees a future for this emerging field, because today’s classical supercomputers are getting hard to improve on. “We’re reliant on more and more trickery,” he says. Perhaps by the time HPCs advance to the exascale and “problems get bigger and bigger and bigger” there will eventually be a “crossover” for “certain problems that quantum systems are good at.”

Small Los Alamos research teams have been exploring several scientific examples with a series of internally funded projects, each lasting just a few months. One probed scattered points within Earth’s ionosphere looking for “events of interest.” Another explored how terrorist networks form and change relationships over time. “We have the hammer,” Pakin says of his lab’s D-Wave. “Now we’re looking for some nails.”

Los Alamos National Laboratory, a multidisciplinary research institution engaged in strategic science on behalf of national security, is operated by Los Alamos National Security, LLC, a team composed of Bechtel National, the University of California, BWXT Government Group, and URS, an AECOM company, for the Department of Energy’s National Nuclear Security Administration.