This article is part of a series on a DOE-NCI project to apply big data and supercomputing to cancer research.

During World War II, the Allied military recruited mathematicians and engineers to help calculate the optimal defensive strategies for aerial combat. Using only rudimentary computational tools, those pioneers laid the mathematical foundation for optimizing outcomes when dealing with multiple unpredictable variables and for quantifying uncertainty. Today, those mathematical principles provide the underpinnings for a drastically different problem: defeating cancer.

A Department of Energy (DOE) collaboration with the National Cancer Institute (NCI) is using advanced computing with an aim to deliver precision medicine for oncology. At its heart are models based on advanced statistical theory and machine-learning algorithms. Frank Alexander of the DOE’s Los Alamos National Laboratory is leading the drive to apply these principles to the effort’s other pilot projects.

“If you want to predict the most likely location of an enemy plane at some future time, you have to have a model of how it moves, and while you’re taking measurements of its path, the measurements aren’t perfect, and the weather patterns affect how it moves and those are constantly changing,” says Alexander, acting leader of LANL’s Computer, Computational and Statistical Sciences Division.

“The engineering community developed tools for taking all that data and coming up with a prediction of where the plane would be when you want to shoot it down. When you’re talking about cancer, the mathematical formulations are more complicated, but I believe we can still hit the target.”

Predicting cancer’s trajectory and response to different treatments can be compared to what’s known as the traveling salesman problem, a classical mathematical puzzle. It focuses on a salesman who wants to travel between a number of cities while optimizing his time and minimizing both travel costs and distance. As the number of cities grows, the potential routes quickly make this a difficult optimization problem that has intrigued mathematicians and computer scientists for years.

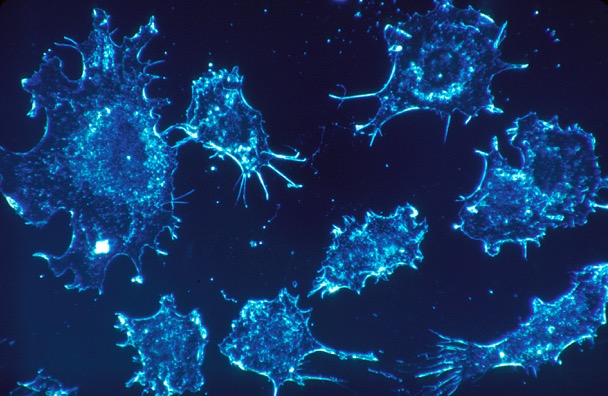

Similarly, the variables involved in predicting a cancer’s genesis, course and outcome quickly produce a daunting number. Alexander focuses on building predictive models that will combine experimental and drug-response data with adaptive computational approaches. These machine-learning models are expected to integrate biological and chemical data, including structural information, and predict a given drug’s effect on a given tumor.

‘We have a lot of experience dealing with complex systems and quantifying our confidence.’

The relation between the properties of drugs and those of tumors are non-linear, Alexander says. Further, the problem’s size will require using DOE’s most advanced high-performance computing resources. The scale is related to the problem’s parameters, which describe chemical properties of a drug or compound (106), a tumor’s molecular characteristics (107), and drug/tumor screening results (107).

Compared with battle scenarios, “biological systems are much more complex and much less is known about them.” Alexander says. “These are stochastic systems, and a lot more randomness occurs. They are also changing and evolving with time. We’re asking, how do you do optimal prediction under uncertainty. How do you take a lab-based system that is close to the system that you care about, do some experiments, and then extrapolate to the system (human health) that you can’t do experiments on?” He offers an example: “Can you do experiments on a cell line and then really accurately extrapolate to how a given human would respond?”

Although the cellular-response model has unique features, Alexander notes that the other project challenges (molecular-, cellular- and population-scale) all have common data management and data analysis needs. Crosscutting work to deal with uncertainty and large numbers of variables is expected to support the whole program.

“These projects require us to do simulations but also to support large-scale data analytics and machine learning,” Alexander says. “The vision we have for these systems is that they’re equally good at those three domains.”

Model-building is a long-term problem; the collaborators also are focused on interim goals.

Once an algorithm is in place, for instance, the research team plans to use it to help NCI figure out which experiments are most likely to help fill in missing data and reduce uncertainty in the models’ predictions.

It might seem unusual to have experts from one of DOE’s three national security laboratories (with Sandia and Lawrence Livermore) advising cancer researchers on how to best address a biological problem. But the weapons labs have sought ways to model the extraordinary complexity of nuclear weapons systems to ensure the devices would continue to perform as a deterrent.

“We have a lot of experience dealing with complex systems and quantifying our confidence in predicting what will happen under different real-world scenarios,” Alexander notes.

Five of the LANL computational scientists involved in the cancer project also are involved in the DOE National Nuclear Security Administration’s Advanced Simulation and Computing (ASC) program, which develops the tools and techniques used in large-scale physics simulations.

The computing requirements for both physics and biology start to converge around the requirements for exascale computing optimized for big data and machine learning.

“This is the most exciting project I’ve ever worked on,” Alexander says. “This is a noble problem.”