For high-performance computing (HPC) systems to reach exascale – a billion billion calculations per second – hardware and software must cooperate, with orchestration by the operating system (OS).

But getting from today’s computing to exascale requires an adaptable OS – maybe more than one. Computer applications “will be composed of different components,” says Ron Brightwell, R&D manager for scalable systems software at Sandia National Laboratories.

“There may be a large simulation consuming lots of resources, and some may integrate visualization or multi-physics.” That is, applications might not use all of an exascale machine’s resources in the same way. Plus, an OS aimed at exascale also must deal with changing hardware. HPC “architecture is always evolving,” often mixing different kinds of processors and memory components in heterogeneous designs.

As computer scientists consider scaling up hardware and software, there’s no easy answer for when an OS must change. “It depends on the application and what needs to be solved,” Brightwell explains. On top of that variability, he notes, “scaling down is much easier than scaling up.” So rather than try to grow an OS from a laptop to an exascale platform, Brightwell thinks the other way. “We should try to provide an exascale OS and runtime environment on a smaller scale – starting with something that works at a higher scale and then scale down.”

To explore the needs of an OS and conditions to run software for exascale, Brightwell and his colleagues conducted a project called Hobbes, which involved scientists at four national labs – Oak Ridge (ORNL), Lawrence Berkeley, Los Alamos and Sandia – plus seven universities. To perform the research, Brightwell – with Terry Jones, an ORNL computer scientist, and Patrick Bridges, a University of New Mexico associate professor of computer science – earned an ASCR Leadership Computing Challenge allocation of 30 million processor hours on Titan, ORNL’s Cray XK7 supercomputer.

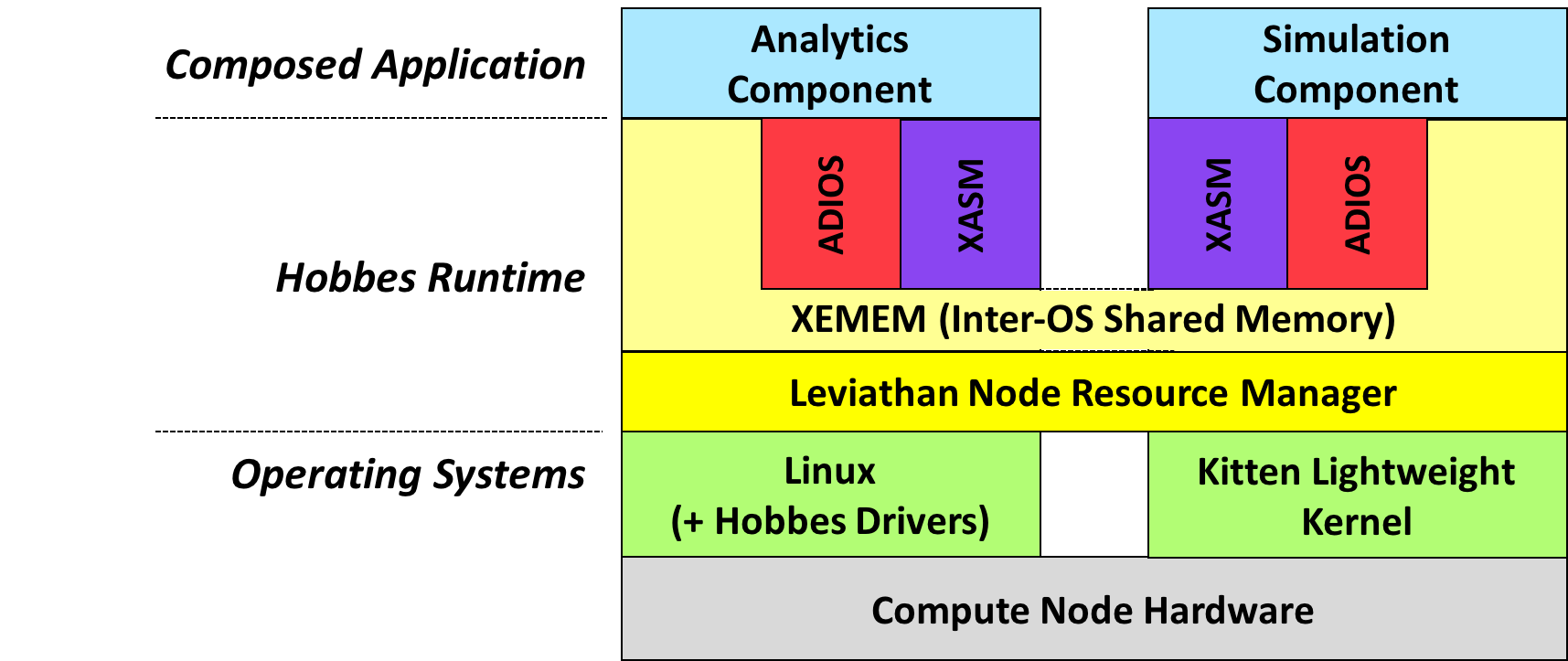

The Hobbes OS supports multiple software stacks working together, as indicated in this diagram of the Hobbes co-kernel software stack. Image courtesy of Ron Brightwell, Sandia National Laboratories.

Brightwell made a point of including the academic community in developing Hobbes. “If we want people in the future to do OS research from an HPC perspective, we need to engage the academic community to prepare the students and give them an idea of what we’re doing,” he explains. “Generally, OS research is focused on commercial things, so it’s a struggle to get a pipeline of students focusing on OS research in HPC systems.”

The Hobbes project involved a variety of components, but for the OS side, Brightwell describes it as trying to understand applications as they become more sophisticated. They may have more than one simulation running in a single OS environment. “We need to be flexible about what the system environment looks like,” he adds, so with Hobbes, the team explored using multiple OSs in applications running at extreme scale.

As an example, Brightwell notes that the Hobbes OS envisions multiple software stacks working together. The OS, he says, “embraces the diversity of the different stacks.” An exascale system might let data analytics run on multiple software stacks, but still provide the efficiency needed in HPC at extreme scales. This requires a computer infrastructure that supports simultaneous use of multiple, different stacks and provides extreme-scale mechanisms, such as reducing data movement.

Part of Hobbes also studied virtualization, which uses a subset of a larger machine to simulate a different computer and operating system. “Virtualization has not been used much at extreme scale,” Brightwell says, “but we wanted to explore it and the flexibility that it could provide.” Results from the Hobbes project indicate that virtualization for extreme scale can provide performance benefits at little cost.

The Hobbes project uses virtualization to explore the use of multiple OSs working on the same simulation at extreme scale.

Other HPC researchers besides Brightwell and his colleagues are exploring OS options for extreme-scale computing. For example, Pete Beckman, co-director of the Northwestern-Argonne Institute of Science and Engineering at Argonne National Laboratory, runs the Argo project.

A team of 25 collaborators from Argonne, Lawrence Livermore National Laboratory and Pacific Northwest National Laboratory, plus four universities created Argo, an OS that starts with a single Linux-based OS and adapts it to extreme scale.

When comparing the Hobbes OS to Argo, Brightwell says, “we think that without getting in that Linux box, we have more freedom in what we do, other than design choices already made in Linux. Both of these OSs are likely trying to get to the same place but using different research vehicles to get there.” One distinction: The Hobbes project uses virtualization to explore the use of multiple OSs working on the same simulation at extreme scale.

As the scale of computation increases, an OS must also support new ways of managing a systems’ resources. To explore some of those needs, Thomas Sterling, director of Indiana University’s Center for Research in Extreme Scale Technologies, developed ParalleX, an advanced execution model for computations. Brightwell leads a separate project called XPRESS to support the ParalleX execution model. Rather than computing’s traditional static methods, ParalleX implementations use dynamic adaptive techniques.

More work is always necessary as computation works toward extreme scales. “The important thing in going forward from a runtime and OS perspective is the ability to evaluate technologies that are developing in terms of applications,” Brightwell explains. “For high-end applications to pursue functionality at extreme scales, we need to build that capability.” That’s just what Hobbes and XPRESS – and the ongoing research that follows them – aim to do.