From climate-change predictions to models of the expanding universe, simulations help scientists understand complex physical phenomena. But simulations aren’t easy to deploy. Computational models comprise millions of lines of code and rely on many separate software packages. For the largest codes, configuring and linking these packages can require weeks of full-time effort.

Recently, a Lawrence Livermore National Laboratory (LLNL) team deployed a multiphysics code with 47 libraries – software packages that today’s HPC programs rely on – on Trinity, the Cray XC30 supercomputer being assembled at Los Alamos National Laboratory. A code that would have taken six weeks to deploy on a new machine required just a day and a half during an early-access period on part of Trinity, thanks to a new tool that automates the hardest parts of the process.

This leap in efficiency was achieved using the Spack package manager. Package management tools are used frequently to deploy web applications and desktop software, but they haven’t been widely used to deploy high-performance computing (HPC) applications. Few package managers handle the complexities of an HPC environment and application developers frequently resort to building by hand. But as HPC systems and software become ever more complex, automation will be critical to keep things running smoothly on future exascale machines, capable of one million trillion calculations per second. These systems are expected to have an even more complicated software ecosystem.

Most application users are concerned with generating scientific results, not with configuring software.

“Spack is like an app store for HPC,” says Todd Gamblin, its creator and lead developer. “It’s a bit more complicated than that, but it simplifies life for users in a similar way. Spack allows users to easily find the packages they want, it automates the installation process, and it allows contributors to easily share their own build recipes with others.” Gamblin is a computer scientist in LLNL’s Center for Applied Scientific Computing and works with the Development Environment Group at Livermore Computing. Spack was developed with support from LLNL’s Advanced Simulation and Computing program.

Spack’s success relies on contributions from its burgeoning open-source community. To date, 71 scientists at more than 20 organizations are helping expand Spack’s growing repository of software packages, which number more than 500 so far. Besides LLNL, participating organizations include six national laboratories – Argonne, Brookhaven, Fermilab, Lawrence Berkeley (through the National Energy Research Scientific Computing Center), Los Alamos, Oak Ridge and Sandia – plus NASA, CERN and many other institutions worldwide.

Spack is more than a repository for sharing applications. In the iPhone and Android app stores, users download pre-built programs that work out of the box. HPC applications often must be built directly on the supercomputer, letting programmers customize them for maximum speed. “You get better performance when you can optimize for both the host operating system and the specific machine you’re running on,” Gamblin says. Spack automates the process of fine-tuning an application and its libraries over many iterations, allowing users to quickly build many custom versions of codes and rapidly converge on a fast one.

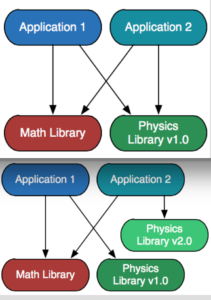

Applications can share libraries when the applications are compatible with the same versions of their libraries (top). But if one application is updated and another is not, the first application won’t work with the second. Spack (bottom) allows multiple versions to coexist on the same system; here, for example, it simply builds a new version of the physics library and installs it alongside the old one. Schematic courtesy of Lawrence Livermore National Laboratory.

Each new version of a large code may require rebuilding 70 or more libraries, also called dependencies. Traditional package managers typically allow installation of only one version of a package, to be shared by all installed software. This can be overly restrictive for HPC, where codes are constantly changed but must continue to work together. Picture two applications that share two dependencies: one for math and another for physics. They can share because the applications are compatible with the same versions of their dependencies. Suppose that application 2 is updated, and now requires version 2.0 of the physics library, but application 1 still only works with version 1.0. In a typical package manager, this would cause a conflict, because the two versions of the physics package cannot be installed at once. Spack allows multiple versions to coexist on the same system and simply builds a new version of the physics library and installs it alongside the old one.

This four-package example is simple, Gamblin notes, but imagine a similar scenario with 70 packages, each with conflicting requirements. Most application users are concerned with generating scientific results, not with configuring software. With Spack, they needn’t have detailed knowledge of all packages and their versions, let alone where to find the optimal version of each, to begin the build. Instead, Spack handles the details behind the scenes and ensures that dependencies are built and linked with their proper relationships. It’s like selecting a CD player and finding it’s already connected to a compatible amplifier, speakers and headphones.

Gamblin and his colleagues call Spack’s dependency configuration process concretization – filling in “the details to make an abstract specification concrete,” Gamblin explains. “Most people, when they say they want to build something, they have a very abstract idea of what they want to build. The main complexity of building software is all the details that arise when you try to hook different packages together.”

During concretization, the package manager runs many checks, flagging inconsistencies among packages, such as conflicting versions. Spack also compares the user’s expectations against the properties of the actual codes and their versions and calls out and helps to resolve any mismatches. These automated checks save untold hours of frustration, avoiding cases in which a package wouldn’t have run properly.

The complexity of building modern HPC software leads some scientists to avoid using libraries in their codes. They opt instead to write complex algorithms themselves, Gamblin says. This is time consuming and can lead to sub-optimal performance or incorrect implementations. Package management simplifies the process of sharing code, reducing redundant effort and increasing software reuse.

Most important, Spack enables users to focus on the science they set out to do. “Users really want to be able to install an application and get it working quickly,” Gamblin says. “They’re trying to do science, and Spack frees them from the meta-problem of building and configuring the code.”