Investigators at Brookhaven National Laboratory’s Computational Science Initiative (CSI) haven’t yet been called on to solve crimes, like their CSI television counterparts, but they’re cracking even more substantive data-driven puzzles.

Already an established group, CSI recently announced a significant expansion that will greatly increase its research and development capabilities. Its ambitious vision is to shift data collected on large scientific instruments from labor-intensive retrospective analysis to real-time, on-the-fly interpretation. The idea is to allow nimble fine-tuning of the information gathered as experiments are still running. But there are several key problems to solve first, and Brookhaven is amassing key recruits and partners to take on the challenges.

CSI Director Kerstin Kleese van Dam, a computing industry leader in data infrastructure and management, is assembling teams to tackle three key areas: novel data structures and scalable algorithms designed for computer architectures such as those based on graphics processing units (GPUs); seamless data movement between instruments and computers; and computing models that bring scientists and engineers into the design and analysis process.

The expanded CSI group receives support from state of New York, the Department of Energy and the lab itself. Academic partners from Columbia, Cornell, New York, Stony Brook and Yale universities will contribute, along with NVIDIA, Intel Corp. and IBM Research.

The CSI is focusing first on large multi-user science facilities, such as Brookhaven’s Relativistic Heavy Ion Collider, National Synchrotron Light Source II (NSLS-II) and Center for Functional Nanomaterials (CFN); DOE’s Atmospheric Radiation Measurement Program; and the ATLAS experiment at Europe’s Large Hadron Collider. CSI aims to help scientists identify critical information in a data stream and enable them to steer their experiments to new discoveries.

Although coordinated through Brookhaven, the CSI group also seeks input from user facilities in DOE and beyond. To that end, Brookhaven began a hackathon series in late 2015. The first weeklong event gathered data scientists from the five DOE X-ray light and neutron-scattering sources. Such events can help foster collaborations that will be critical to creating open data structures that work across DOE’s approximately 240 shared scientific instruments, Kleese van Dam says.

‘It’s easy to find features in the data, but what’s of interest to the scientist may be a completely different matter.’

Over the course of a week, scientists addressed crosscutting data challenges at their respective facilities and worked toward real-time streaming data analysis.

One project used machine-learning methods to cluster and categorize data generated at NSLS-II and combined them with a streaming visualization tool that highlighted decision-critical insights for the scientists, Kleese van Dam says. “That’s important because the volume of information from large scientific instruments can quickly become unwieldy.” For instance, she notes, there’s an instrument at CFN that produces 400 images per second. At that rate, data analysis and extraction are critical, as is working with users to identify the most scientifically interesting information.

“It’s easy to find features in the data, but what’s of interest to the scientist may be a completely different matter,” Kleese van Dam says. Advanced manufacturing and materials-by-design, for instance, requires engineers “to influence the process to control the outcome.”

Such time-sensitive process control will require devising experiment-steering algorithms for vast amounts of data. “Most of the algorithms that we have today just don’t scale to large enough data volumes,” Kleese van Dam says. “I get terabytes of data per minute. There is nothing out there at this time that can deal with this data flow.”

To address scalability, the CSI created the Computer Science and Mathematics Group. It’s led by Barbara Chapman, a joint Brookhaven and Stony Brook appointee. Chapman was a pioneer in shared-memory parallel programming and is one of the principal developers of OpenMP, the industry standard application interface for shared-memory multiprocessing. Her team will focus on the computer science and mathematical underpinnings for analyzing data from scientific instruments based on scientists’ hypotheses.

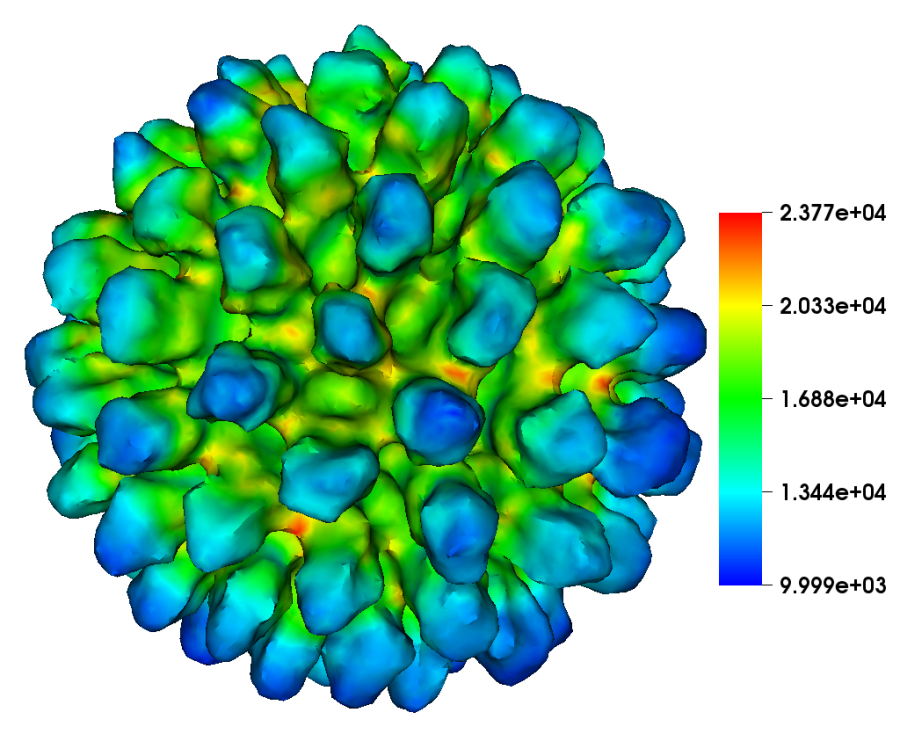

Brookhaven physicist Simon Billinge has demonstrated how streaming analysis might work for the most advanced, complex experiments – for example, studying battery life or industrial-catalyst potency under working conditions. Capturing the physical, chemical and biological processes across different experiments requires simulations that take into account the scientific theories behind the observations. Billinge has developed a novel method to combine data and theory in a “global optimizer.” Using nanomaterial design as a case study, the method combines experimental data from several instruments and extracts only the key information about a material’s promise and limits. Those results are further refined through numerical modeling.

Billinge, who has a joint appointment at Columbia’s School of Engineering and Applied Science, also is on a team looking to the future of materials science codes: the Center for Computational Design of Functional Strongly Correlated Materials and Theoretical Spectroscopy. The group, a joint project of Brookhaven and Rutgers University, has received $12 million over four years from the DOE Basic Energy Sciences program. Led by BNL’s Gabriel Kotliar (who has a joint appointment at Rutgers), the center works closely with materials scientists to develop codes that incorporate many of the advanced functions current models lack. Billinge, for example, hopes to improve the speed and accuracy of materials models so they can better inform analysis and interpretation of complex experiments.

CSI’s Computational Science Laboratory (CSL) helps the center and other teams maximize benefit from new computing architectures. For instance, one of the most computationally intensive models today uses quantum chromodynamics (QCD) theory to study how subatomic particles such as quarks and gluons interact. Physicists use QCD to predict particle behavior and then compare those predictions with experiments. The calculations require large-scale parallel computing and create many teraflops of data. As computer architectures evolve, CSL researchers versed in both QCD and computational science act as a bridge to help make decisions about how to change algorithms while maintaining scientific accuracy, Kleese van Dam says. Similarly, CSL is helping move Quantum Espresso, a suite of open-source materials science codes, from CPU-based architectures to GPU-based architectures.

Kleese van Dam is leading an effort that underpins all of the above: updating data storage and archiving and exploring how to make archived data more useful.

“What we are looking toward is how we can bring archived data back into the scientific discovery process in an automated way so it becomes natural to use those data,” she says. With easily accessible archives, scientists could ask and answer questions that are impossible under current data structures, she adds. For example, if an algorithm flags a phenomenon in a set of experiments, accessing data from previous experiments would let users ask how common that phenomenon is and whether it has ever been seen before.

“Making data accessible is important,” she says. “There is no point in collecting data if there is not sufficient good quality metadata” with them to make them searchable. But it’s just as important to “make the knowledge inherent in these data sets easily accessible for future reuse. The new data services Brookhaven is creating will help scientists do both.”

CSI is seeking “experts who can contribute to the complete ecosystem required to make data-driven discovery for science, national security and industry a normality rather than the exception,” she adds.

Cracking that code would open up technological possibilities any made-for-TV CSI could only dream about.