Few would be surprised today that power – the rate of energy consumption – looms as a key constraint in tomorrow’s high-performance computing (HPC) environment. But even as recently as 15 years ago “people weren’t that concerned about power, except in specific places,” says Hank Hoffmann, assistant professor of computer science at the University of Chicago.

That concern developed as HPC experts started to grasp the big numbers for the amount of power required to run the fastest computers. In exascale computing – performing a billion billion computations a second – the round number for power looks like about 20 megawatts (MW), a figure that creates two key stumbling blocks.

Problem No. 1 is simply providing that much power. As Hoffmann says, “That’s quite a load on the grid.” If a facility wanted to generate that power with, for instance, Honda’s biggest portable generators, it would take 2,000 of them. If designers aren’t careful, they could “build computers that consume more power than we could deliver to the facility,” Hoffmann explains. The question is: How do we handle that problem?

The simplest approach splits that power among a computer’s components. So just divide 20 million watts by the number of components, and then design them all to use that amount of power or less. This approach, though, “leaves lots of potential on the table,” Hoffmann says. “Some applications might need more of one resource than another.” So instead, the system should intelligently distribute power to optimize operation of applications the exascale computer is running. “The trick is that this is a really difficult problem.”

Hoffmann received a Department of Energy Early Career Research Program award this year to explore what he calls CALORIE: a constraint language and optimizing runtime for exascale power management. In essence, Hoffmann envisions a technique that lets scientists focus on the application while CALORIE takes care of the power.

To limit overall power consumption, computer designers might use components that operate at lower voltages, and those parts might be less reliable.

Under Hoffman’s approach, scientists provide high-level information about what their applications do. Using an elementary programming language, a scientist would describe the simulations. Hoffmann’s technique would extract information from that and use it to configure the supercomputer.

This concept of adjusting a computer system on the fly connects to Hoffmann’s concept of self-aware computing, which Scientific American named one of 10 “World Changing Ideas” in 2011. “A computer runs on a model, and self-aware computing lets it manipulate that model,” explains Hoffman, who developed the idea as a graduate student. “It can change its behavior as it is running.”

Likewise, CALORIE modifies a supercomputer’s power management. To make this technique work on many applications, Hoffmann uses control theory, tracking how resource use corresponds to power consumption and letting the operating system make changes as it runs.

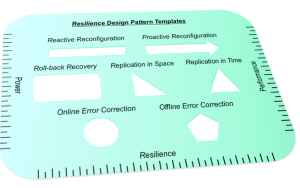

Resilience design patterns will provide templates for a wide range of advanced computing techniques. Image courtesy of Christian Engelmann.

Problem No. 1 contributes to problem No. 2: resilience. To limit overall power consumption, computer designers might use components that operate at lower voltages, and those parts might be less reliable. In addition, making components smaller can lead to more manufacturing defects or shorter life. On top of that, exascale software will be more complex than today’s codes. As Christian Engelmann, system software team task lead in the computer science research group at Oak Ridge National Laboratory, explains, “If you combine all of this, you must deal with more component failures and more software failures from the complexity.”

The computer must keep running despite these failures. That’s the goal of Engelmann’s resilience design patterns project, which also received a DOE Early Career Research Program award this year. Software engineers often use design patterns. “It’s something like a template,” Engelmann says, comparing it to a building’s architecture: “Every time an architect designs a new house, there will be a kitchen and bathroom and so on.” Likewise, solutions to coding types of computations include similar components. “We design a template to deal with the problem,” Engelmann says. “My idea is to develop different design patterns for resilience in high-performance computing.” For example, Engelmann and his colleagues are developing a Monte Carlo method, in which multiple simulations with random variables approximate the likelihood of various outcomes. Engelmann’s technique works even after a failure without restarting the simulation from an earlier point.

Although Engelmann’s overall method will require multiple templates, he expects to need only a limited number. “There will be a variety of templates for specific mechanisms or techniques, but a number of techniques will fit in the same template.”

It’s taken some time to develop the idea, Engelmann says. Object-oriented software, a concept developed in the 1990s, also uses design patterns. Software engineers later started applying the concept to parallel computing. Then “I got the idea that we might extend that to resilience design patterns,” Engelmann says.

He acknowledges that his approach is a little new and even a little risky. Nonetheless, he expects that his experience in developing individual mechanisms to solve problems can mature into developing design patterns. “I’ll build on top of what I’ve built in the past, but use the design-pattern concept.”

For Hoffmann and Engelmann, past experience promises to inform their projects on power and resilience for exascale computing. As Hoffmann predicts, “We are going to build computer systems that are so complicated that very few people can optimize them, so we need to make the machines intelligent to handle some of this, or we’ll have machines that are not useful.”