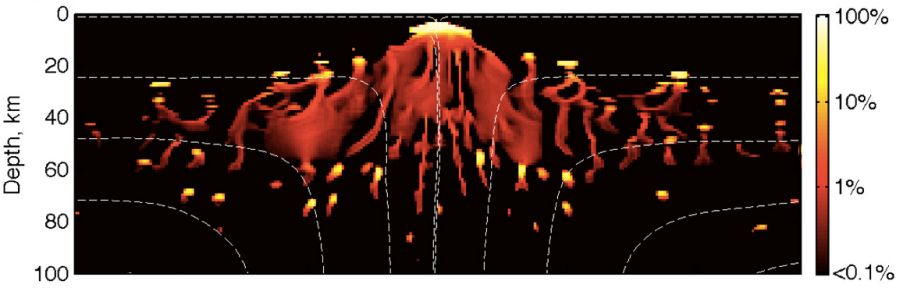

On September 4, 2010, enormous slabs of rock under New Zealand grated against each other, releasing pressures built up by one tectonic plate plowing into another. The resulting earthquake sent tremors throughout the nation’s South Island and the southern part of the North Island. Forty kilometers east of the epicenter, the city of Christchurch shook hard enough to topple chimneys and crumble masonry walls.

At an American Geophysical Union meeting four years later, a computer simulation revealed the quake’s subterranean dynamics.

“It was a very complex rupture with multiple faults,” says Brad Aagaard, a scientist at the United States Geological Survey (USGS) in Menlo Park, California, and leader of the simulation team. “When we develop our seismic hazard maps, we need to understand what kinds of earthquakes are possible. In what cases can faults link together? You have to look at everything in three dimensions and include the dynamic effects.”

The computer model was created using PyLith, a code written and distributed by a team that included Aagaard. PyLith is just one of dozens of computer codes that have vaulted from concept to application using software from Argonne National Laboratory’s PETSc (Portable Extensible Toolkit for Scientific Computation), an open-source library of ready-to-use software for high-performance computing (HPC) systems.

‘Simulations are not run just for their own sake. They are run in order to enable decision-making.’

In March, the team that developed PETSc will receive the SIAM/ACM Prize in Computational Science and Engineering from the Society for Industrial and Applied Mathematics (SIAM) and the Association for Computing Machinery (ACM) at a SIAM conference in Salt Lake City. It is only the latest honor in a string that includes an R&D 100 Award from R&D Magazine. Primary funding for PETSc has come from the Department of Energy’s Office of Science through its Advanced Scientific Computing Research program. The library has attracted users from a range of disciplines.

“Our primary long-term goal is to support the development of sophisticated simulation applications by scientists and engineers in a wide variety of fields,” says Argonne’s Barry Smith, lead PETSc developer. “PETSc users have demonstrated that sophisticated scalable simulations do not have to be written from scratch but rather can be customized from carefully designed, open-source, general-purpose libraries rapidly, at low cost, and with high quality.”

PETSc offers software to solve equations that mathematically capture change over time and to relate those equations to one another. The integration of these partial differential equations (PDEs) through PETSc has enabled simulation of a variety of scientific phenomena, from earthquakes, to acoustics to water flow.

The software is especially designed for extreme-scale computing, incorporating ways to decompose problems into pieces that multiple processors can work on simultaneously. Extreme-scale means the largest machines available for science, each one roughly equivalent to millions of laptops working concurrently.

The software library began in 1991 as a collection of codes for domain decomposition, the art of breaking large, continuous problems into pieces for individual solution. The goal was to organize software according to the problems the codes could solve instead of the nuts and bolts of how they had been written.

That effort, initiated by Smith and William Gropp (formerly at Argonne, now at the University of Illinois at Urbana-Champaign), grew quickly. Today it’s a comprehensive and expanding library of more than 50 distinct equation solvers and integrators that programmers, working in standard computational-science languages, can compose into thousands of combinations. The team also grew rapidly. Meanwhile, more than 50 outside contributors also have provided software to the library.

The team’s goal to support others in developing simulations is especially important now. Scientists in virtually every discipline are scrambling to create software and programming models that will work on rapidly evolving HPC hardware to simulate increasingly complex phenomena.

“Simulations are no longer typically just a single physics or model but rather the interactions among multiple physics or models in a complicated environment,” says Argonne’s Lois Curfman McInnes, a member of the PETSc core development team that also includes Smith, Gropp and Argonne researchers Matthew Knepley, Satish Balay, Jed Brown and Hong Zhang. “For example, heart simulation involves blood flow, muscle, electrical nerve conduction that drives the muscle contractions, etc. Moreover, simulations are not run just for their own sake. They are run in order to enable decision-making, which often requires running many related simulations to converge to optimal designs.”

“PETSc permits application scientists to focus on their problems without writing low-level parallel code,” says Knepley, who works out of the Computation Institute, a joint initiative between Argonne and the University of Chicago. “Numerous PETSc users have commented that they have saved literally years of development time because they do not need to write low-level message-passing code, but rather have been able to work at higher levels of algorithmic abstraction.”

The New Zealand earthquake simulation illustrates PETSc’s success and promise. The PyLith code, developed through the National Science Foundation’s Computational Infrastructure for Geodynamics project, incorporates PETSc’s solvers for nonlinear systems of equations and uses its sophisticated algorithmic infrastructure designed for parallel computing. Like Aagaard, Knepley was a member of the PyLith code-writing team, along with Charles Williams of New Zealand’s GNS Science (Institute of Geological and Nuclear Sciences Limited).

“PETSc gives us a suite of numerical methods, and we can choose which ones will work best,” Aagaard says. “We don’t have to worry about developing specific numerical methods. It has allowed us to focus on the physics.”