Building a computer that solves scientific problems a thousand times faster than the best ones available today is harder than just making it bigger.

Nearly every core component will have to change to create a practical exascale computer – one capable of a million trillion, or 1018, scientific calculations per second. The operating systems and underlying algorithms also must be revamped to run well on these huge machines and take advantage of their computational power.

It’d be easy to identify dozens of such obstacles to exascale computing, says Robert Lucas, deputy director of the Information Sciences Institute at the University of Southern California. But earlier this year a panel he led identified just the top 10 in a report to the U.S. Department of Energy.

A bill directing the DOE to pursue exascale computing passed the U.S House of Representatives before the fall recess. The American Supercomputing Leadership Act of 2014 now awaits Senate action.

And this month, leading up to a meeting at the SC14 supercomputing conference in New Orleans, the DOE’s Advanced Scientific Computing Advisory Committee (ASCAC) is releasing what it’s calling a “preliminary conceptual design” for an Exascale Computing Initiative (ECI) to achieve exascale computing by 2023.

Continued progress, however, depends on even greater computational power.

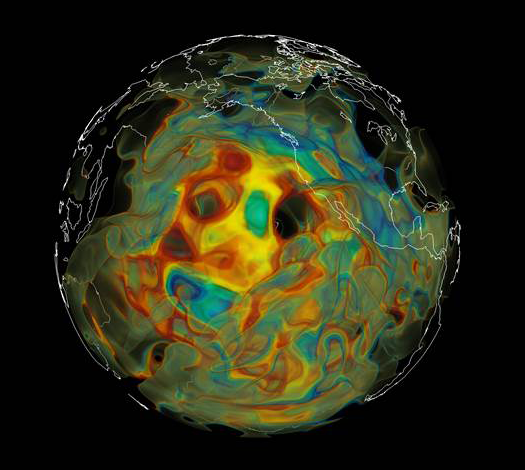

For decades, DOE has led the United States in developing and using the most advanced high-performance computing (HPC) technology, from the 1970s’ megaflops (millions of floating point operations per second) machines to today’s supercomputers, like Titan, that are capable of petaflops (quadrillion operations per second). HPC has helped DOE address a range of missions: developing new energy technologies, tracking climate change, ensuring the safety, security and effectiveness of the nation’s nuclear weapons, and more.

Continued progress, however, depends on even greater computational power. “If we don’t have exascale machines, or perhaps zettascale (1021 operations per second) further down the road, then there will be engineering problems you won’t be able to solve,” Lucas says.

Patricia Dehmer, acting director of the DOE Office of Science, directed ASCAC to identify the top 10 exascale challenges. Lucas headed a subcommittee of 23 HPC experts in hardware, algorithms and programming that examined the question and wrote the report. The subcommittee report says its top 10 list, now a centerpiece of the nascent exascale initiative design, is grouped into categories, but isn’t in rank order:

Energy efficiency: It would take as much energy as a small city consumes and cost millions of dollars a month to operate and cool an exascale computer built with today’s technology. DOE’s goal to hold power consumption to a maximum 20 megawatts will require advances in energy efficiency, power delivery, architecture and cooling.

Interconnect technology: Systems that allow processors to talk to each other and to memory quickly and efficiently are vital, the report’s authors say. “Without a high-performance, energy-efficient interconnect, an exascale system would be more like the millions of individual computers in a data center, rather than a supercomputer.”

Memory technology: Researchers must evaluate new memory technologies for exascale use and focus on energy efficiency, minimizing data movement and holding down cost.

Exascale algorithms: Established science and engineering programs must be adapted to run on exascale machines. In some cases the underlying algorithms will have to be redesigned or reinvented.

Algorithms for discovery, design and decision: Methods and software will be needed to determine how much faith to put in an exascale simulation’s results and to find the best solutions to problems under given restraints.

Resilience and correctness: Exascale computers must supply correct and reproducible results, even as they recover from frequent component failures. “Getting the wrong answer really fast is of little value to the scientist,” the report says.

Scientific productivity: New software engineering tools and programming environments are needed so computational scientists can use an exascale machine productively. Otherwise, they may spend months preparing to run an application for just a few hours or days.

The report recommends research directions to overcome each of these challenges, but warns that a revolutionary approach is needed – not an evolutionary strategy of incremental advancement.

DOE also must invest in research for the long run, including supporting exascale hardware and algorithm development to meet the missions of DOE and other federal agencies, the subcommittee says. And the department should promote training to ensure computational scientists are available to effectively use the new machines.

The report recommends that DOE create an Open Exascale System Design Framework to coordinate development and foster collaboration across disciplinary boundaries. The approach should follow co-design principles, encouraging collaboration between system and software designers and the scientists who will use the new machines for discovery.

“The system vendors, the software vendors and DOE scientists (should) all discuss the goals ahead of time,” Lucas says. “If there was an open environment, it would facilitate that.” The result is likely to be supercomputers more closely tailored for specific purposes, such as climate modeling, rather than for general use.