Fast in supercomputing used to be so simple. The fastest supercomputer was

the one that could perform the most flops, perhaps landing the machine a coveted spot on the TOP500 listing of the world’s supercomputers.

Now scientists doing high-performance computing are slowing down and taking a new look at what fast really means with today’s supercomputers and the new problems they’re trying to solve.

Two ASCR-supported Department of Energy Early Career Research Award

recipients are at the leading edge of this transformation to supercomputing that can do more with far less energy, and that can mix speed and scale to mine genetic data for meaningful links as no computer has done before.

Early Career Research awardee Peter Lindstrom of Lawrence Livermore National Laboratory (LLNL) wants to head off “a huge energy problem when it comes to next-generation computers. Today when you get time on a supercomputer, you’re typically charged in terms of the amount of time spent, and that’s going to change because power’s really going to be the key factor.”

It’s estimated that a next-generation exascale supercomputer (capable of a million trillion flops) envisioned for LLNL will consume about 50 megawatts of electricity – the same amount as the entire city of Livermore, Calif., population 80,000. A power-guzzling supercomputer doesn’t just raise costs; it slows computing. There are concerns that during warmer months a next-generation supercomputer would run at only half speed, given the challenges of keeping it cool enough to function. Already this balance between energy use, computational speed and actual usability has resulted in the creation of the Green500, a ranking of the world’s most energy-efficient supercomputers.

“We’re going to have to rewrite our codes to be more power efficient,” Lindstrom says. “And one of the key aspects of this is the power used in data movement. On next-generation supercomputers the power cost of moving data is eally the critical metric for software.”

Graph-based data-intensive computing requires a new way of thinking about supercomputing speed.

Lindstrom takes a three-pronged approach to minimizing data movement – and thus maximizing speed and energy efficiency – in supercomputing applications: data compression, streaming analysis and improved data organization.

Data compression is familiar to personal computer users in the form of JPEG image and PDF document files. In many cases, Lindstrom says, it’s computationally faster to focus more on data compression and decompression – the upstream end of the computing – to minimize the processor time and energy required for data movement. Lindstrom also is taking a cue from Netflix and other Internet music and video streaming services by developing a form of cost-efficient supercomputer parallel stream processing.

At the heart of Lindstrom’s research is improved data organization and management. A supercomputer with thousands of processors resembles a growing corporation in which efficient communication becomes more and more important to the organization’s overall efficiency and success. Without good overall communication, workers – in this case processors – aren’t performing at full potential.

“It doesn’t matter how much computation you have if there’s no data there to actually process,” Lindstrom notes. “In that case all those expensive processors are just sitting there idling, spinning, waiting for something to do.”

Lindstrom already has developed a new movement-minimizing algorithm for ghost data – the information available to a processor about its neighboring processor’s work, something particularly critical in visualizations.

“There’s almost limitless improvement that you can achieve by better data organization,” Lindstrom says. “If you start looking at the way hardware works today, accessing a single byte on disk might take over a million times longer than it might take to access that byte if it sits very close, right on the processor.”

In practice, he says, the combination of his three data movement-minimizing techniques could readily achieve improvements in computational speed of\ between 10 and 100 times – taking the heat off future supercomputers and enabling them to reach full potential.

A dozen years after the completion of the Human Genome Project, the promise of a genetic revolution in medicine and biology is mired in a data deluge. The problem: It’s proven far easier to amass genetic information than to analyze it. Today, sequencers can pump out the billion or so letters of a full genome in less than an hour. Yet it’s proving much more computationally difficult to find the meaningful links betweens genes, proteins and physical traits, such as diseases.

“What is really amazing to me is that biology is now starting to dictate the development in data-intensive computing,” says Ananth Kalyanaraman, an assistant professor in the School of Electrical Engineering and Computer Science at Washington State University.

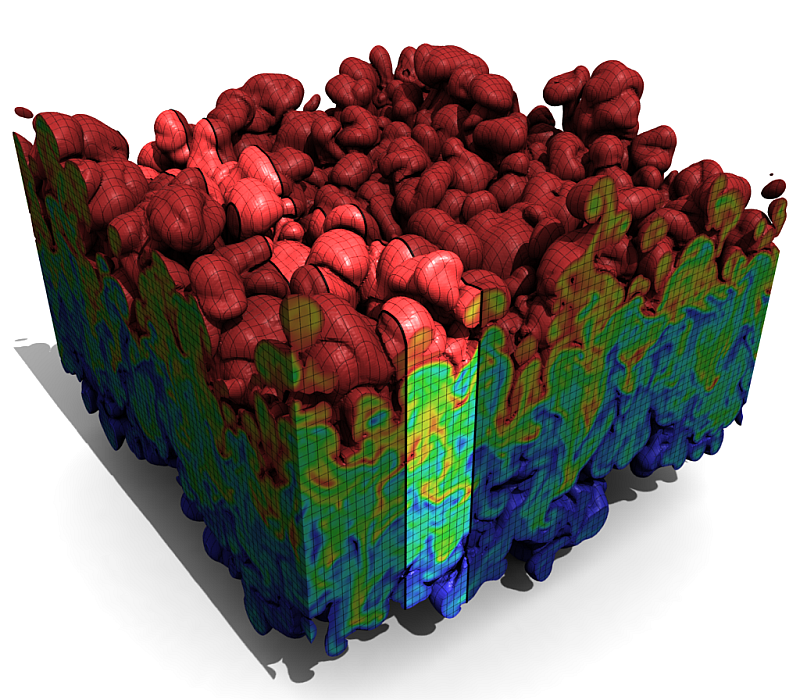

Data-intensive computing is a fundamentally different type of parallel computing than the physics simulations for which supercomputers are famous. In data-intensive computing, the emphasis isn’t on simulations but instead on processing huge volumes of data – typically terabytes or petabytes – and focusing on the data’s movement, storage and organization.

To help biologists tease out the hidden links between genes, proteins and physical traits from this Big Data, Kalyanaraman is developing graph-theory based parallel algorithms. Graph theory uses the plotting of data points on a graph as the basis for making links between these different elements, a process called community detection. With the Early Career Research Award, he’s looking at extending community detection methods across a range of parallel supercomputing platforms.

“Within these large graphs we’re trying to group data elements based on highly shared properties,” Kalyanaraman explains. He’s already implemented simpler clustering functions for supercomputers to build and analyze draft assemblies of multiple plant genomes, including the apple and corn.

“What we hope to achieve are new parallel methods for clustering graphs suited for different supercomputing architectures,” Kalyanaraman says.

This is a major challenge. Graph-based data-intensive computing requires a new way of thinking about supercomputing speed. Supercomputer architectures that are well suited to physics simulations can be slowpokes when it comes to parsing data-intensive graph analysis. Only one supercomputer makes the top 10 of both the TOP500 supercomputers and the Graph 500, a website that ranks machines based on their speed in running graph algorithms.

Kalyanaraman recently developed a graph-based parallel algorithm that shows the technique will significantly improve scientists’ ability to model, mine and compare vast biological databases. These include protein family inventories and metagenomics data – enormous amounts of genetic and protein data collected from a single environment, such as a termite’s gut.

The algorithm uses what’s known as a MapReduce method, “a simpler way to write graph analysis code for a parallel machine,” he says. In a proof-of concept trial, the MapReduce parallelization technique was almost 400 times faster than existing codes – locating the links between 8 million points of protein data in the same time it takes an existing serial code to analyze just 20,000 proteins.

Similarly, working with DOE Pacific Northwest National Laboratory computational biologist William Cannon, Kalyanaraman is developing parallel algorithms that will accelerate community detection of amino acid sequence similarities within a library of proteins.

Says Kalyanaraman: “Supercomputers are designed not only to solve problems faster. They should also scale to solve much larger problem sizes faster. That’s what’s required in data-intensive biological computing and the aspect that differentiates this work.”