Part of the Science at the Exascale series.

After decades of neglect, the nuclear energy industry is playing catch-up.

The Three Mile Island nuclear plant accident in 1979 and Chernobyl reactor explosion in 1986 led many utilities and governments to nearly abandon research into fission power. The earthquake and tsunami in Japan earlier this year that triggered the Fukushima nuclear disaster, plus lesser scares in the United States from a flood and a recent freak East Coast earthquake, reopened debate and scrutiny worldwide.

Yet concerns about climate change, energy security and dwindling fossil-fuel resources are fueling a persistent look at nuclear energy. Engineers are researching and designing new reactors and looking for ways to increase the life and efficiency of existing plants.

Many of the industry’s computational models behind those designs, however, “don’t have much predictive science,” says Robert Rosner, a senior fellow in the Computation Institute, a joint venture between Argonne National Laboratory and the University of Chicago, where Rosner is also professor of physics and astronomy and astrophysics. The models are largely based on empirical data gathered from early experiments and decades of reactor operation.

With the hiatus in nuclear energy research ending, a new generation of predictive computer models and tools is coming on line, enabled by high-performance computers. Besides historical data, these models rely on what researchers call “first principles” physics and chemistry, advanced numerical algorithms and high-fidelity experimental results to simulate the intricacies of fission and fluid flow. This basic behavior

transpires at both tiny and enormous space and time scales but drives overall reactor operation. Capturing this broad expanse of scales and physics is a formidable computational task.

Petaflops-capable computers, which can execute a quadrillion floating-point operations per second (flops), have only recently provided sufficient power to model parts of a single nuclear fuel assembly. To simulate aspects of whole reactor cores containing hundreds of fuel assemblies will require computers capable of an exaflops – a million trillion calculations per second. An exascale machine will be about 1,000 times as powerful as today’s petaflops machines.

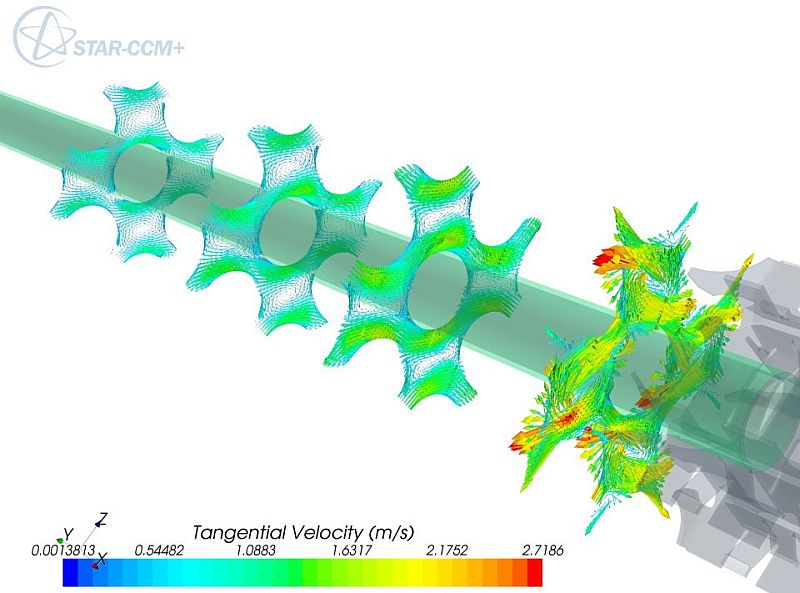

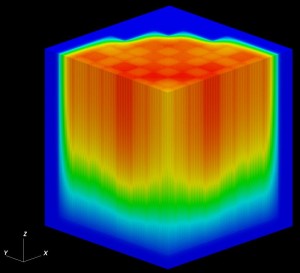

Using DENOVO, a multiscale neutron transport code, Oak Ridge National Laboratory researchers simulated a quarter of a nuclear reactor core in three dimensions. This visualization shows neutron flux density (a measure of neutron radiation intensity). It shows a cut through the core midplane and the lower region of the core. Red regions show areas of high fast neutron flux density; blue regions have low fast neutron flux density. The simulations were performed on the Jaguar computer system in the ORNL Leadership Computing Facility. Andrew Godfrey (CASL Advanced Modeling Applications), Josh Jarrell, Greg Davidson, Tom Evans (DENOVO Team), ORNL.

Exascale computing power is expected within the decade. Nuclear engineers and computational and computer scientists already are pondering the technical obstacles and the problems such machines could tackle. Rosner, a former director of Argonne National Laboratory near Chicago, was co-chairman of a 2009 workshop to consider the issues. The Department of Energy’s offices of Nuclear Engineering and Advanced Scientific Computing Research sponsored the meeting, one in a series that considered exascale computing’s impact on science.

Exascale computing can potentially help make existing and future reactors safer, more economical and more efficient, says Kord Smith, chief scientist for Studsvik Scandpower.

“We haven’t effectively used petascale yet, but it’s easy to see that many of our most challenging problems couldn’t be solved” on those computers, says Smith, who has helped design much of the software Studsvik sells to analyze and optimize a plant’s nuclear fuel use. “That next factor of a thousand is huge in terms of being able to get over the hump.”

A nuclear reactor splits atoms of an element – usually uranium – held in thousands of long pins grouped into bundles. The atoms release neutrons that split more atoms and generate heat in a controlled chain reaction. A liquid coolant, usually water, circulates around thousands of fuel-bearing assemblies in the reactor core, carrying away heat to make steam that turns an electrical generator.

Predictive models can help existing nuclear plants continue operating longer and at higher power.

The basic technology has been around since the 1950s – and that’s one of the issues, Rosner says. The costs and risks of innovation have been too great for utilities to bear. “Everything was done extremely conservatively, so the safety margins are huge,” he adds. “If you look at what’s being built today, they’re basically variants of designs that have been around for 30 to 40 years,” with incremental improvements in safety and efficiency. “There has not been a revolution in what is actually built.”

Today’s models typically make meticulous calculations of local processes, then propagate those results through a global representation, says Smith, who helped found Studsvik’s U.S. branch 27 years ago. It’s impossible to make existing models work directly with fine resolution. “It’s a dated approximation that we’ve pushed as far as we can with 30-year-old methods.”

More precise models are needed to characterize reactor processes like neutron transport (the emission, motion and absorption of fission neutrons through the core), changes in the fissionable material and complex coolant behavior. Engineers also want to predict when nuclear boiling will start or when fuel cladding will fail.

Models could move to that predictive-science base with petascale and exascale computing. That could mean replacing crude safety margins with “conservative safety margins derived from a better-educated design, a science-based design,” Rosner says. “You’re not reducing any aspect of safety. You’re using a better understanding of how things work to replace overdesign.” He likens it to the auto industry, which used lots of heavy steel to make cars safe. Today’s vehicles are lighter and more efficient but safer, thanks to new systems and a better understanding of materials and forces.

Predictive models also could help cut the time – and cost – to prototype and build new reactor designs, Rosner says. For example, engineers must test new fuel rod designs or fuel compositions to see how they exhaust themselves, a process that takes years. The data are used to fine-tune models. “It’s extremely expensive to do those experiments,” Smith says. With predictive modeling, “we’re on the verge of being able to compute those kinds of things. The fuel designers are looking at this and salivating.”

Modeling also can help devise new, less-expensive modular reactors, with parts manufactured in a single facility and transported to a site for installation. Taken together, Rosner says, these efforts can help “break the cost curve” that makes new nuclear plants hugely expensive.

Besides enabling revolutionary designs, predictive models can help existing nuclear plants continue operating longer and at higher power. “We’re in the midst of a major program of life extension,” Rosner says, raising questions about the durability of major plant components like containment and pressure vessels. With the right models, researchers can gauge how materials withstand reactor conditions.

Scientists already are building models that can calculate many nuclear plant processes and properties, including fluid flow and neutron transport in a fuel assembly. “That’s huge, because you now have ways of looking inside the pin bundle that have never been possible before,” Rosner says. Researchers could computationally test designs before prototyping.

DOE’s Consortium for Advanced Simulation of Light Water Reactors (CASL), based at Oak Ridge National Laboratory (ORNL), is designed to build simulation capabilities that help utilities maximize existing reactors. CASL researchers plan to do that by improving fundamental reactor models, says CASL researcher Jess Gehin, ORNL senior nuclear research and development manager, moving them from approximations to high-fidelity, coupled-physics representations.

The petascale computers now available give researchers much of the capacity needed to do that, CASL leader Doug Kothe says. “Exascale opens up a lot of interesting possibilities. An operational core model is going to be memory intensive – it’s not just flops. More and more memory allows you to use more and more complex physical models and fewer assumptions, hence, at least potentially, less error.”

The ideal model, Smith says, would capture all the coolant flow behavior within a reactor pressure vessel about 15 meters high and five meters across and housing tens of thousands of fuel pins. It also would portray the interaction physics that drives neutron transport. That’s a tremendous challenge, says ORNL’s Tom Evans, a CASL researcher and leader of a project to build Denovo, a multiscale neutron transport code.

It will take years to even come close to building a true high-fidelity reactor core model, Smith says. To get there, applied mathematicians and computer scientists will need algorithms that cope with huge spans of time and space. Their codes also must tackle combinations of physical processes, including the behavior of two phases of matter – liquids and gases – and the transition of one to the other.

DOE is pursuing these mid-term goals through CASL and other programs. Looking further ahead, Rosner and others also are launching an exascale co-design center for nuclear energy. Center researchers will collaborate to ensure the exascale computers and algorithms engineers and mathematicians develop are suited for nuclear energy challenges.

Although computer models can direct experiments, they’ll never fully replace them, Rosner says. They’re needed for verification and validation – ensuring simulations solve the right equations in the right ways. Nuclear energy regulators also require uncertainty quantification (UQ) for codes used to model and design reactor operations.

“It’s going to be absolutely necessary to quantify the uncertainty” if engineers want to rely on modeling, Gehin says. But UQ also can tell researchers which physics and parameters most influence model outcomes. “Those are the areas where exascale may pay off most: not relying on intuition but actually using simulation to help us figure out where we need to focus our attention.”