Part of the Science at the Exascale series.

Researchers building and programming potent computers of the future face many of the same obstacles they’ve surmounted to create and use today’s most powerful machines.

Only more so. Much more so.

Computer designers, applied mathematicians, programmers and hardware vendors already are considering how to overcome these barriers on the road from petaflops (a quadrillion math operations per second) to an exaflops (a thousand petaflops).

But if exascale computing is to realize its potential, there must be an unprecedented level of collaboration between these experts and researchers who will use it to tackle fundamental questions in biology, like the best ways to convert woody materials into fuel; in fusion energy, which creates small versions of the sun’s power here on earth; in astrophysics and particle physics, such as the interactions of immense galaxies and tiny elements of matter; in basic energy sciences, like the properties of materials to capture renewable energy; and in other fields.

The level of collaboration must be “huge” if exascale is to succeed for science, says Jacqueline Chen, a distinguished member of the technical staff at Sandia National Laboratories and a top combustion researcher.

“We’re at an important crossroads in high-performance computing,” says Chen, leader of one of three new co-design research centers. An exaflops computer’s size, plus constraints on its power consumption and cost, mean “it’s important that we revisit everything in the stack,” from applications to hardware to systems software.

Executing parallel processing on millions of cores will magnify many of the issues researchers face when using today’s most powerful computers.

This close partnership is the essence of “co-design” – involving researchers from multiple applications in planning, resulting in exascale computers suitable for general purposes. It’s not an entirely new concept, says Daniel Hitchcock, acting associate director of the Department of Energy’s Office of Science for Advanced Scientific Computing Research, but until now co-design has been largely informal. “That’s been OK in the past,” he says, “but the systems have gotten complicated enough now that that won’t work anymore.”

Co-design has emerged as a major theme in crosscutting issues for exascale computing, says Paul Messina, director of science for the Leadership Computing Facility at Argonne National Laboratory, who was co-chairman of a DOE scientific grand challenges workshop on the subject. With every science domain facing similar obstacles to exascale, “we very much wanted to help them all instead of having a custom solution” for each. Co-design involving different science communities can help meet that goal.

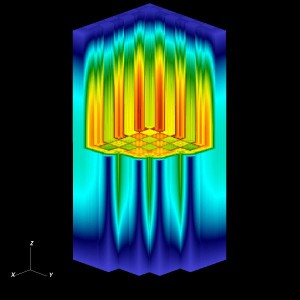

Simulation of fuel pin power distribution in a quarter of a nuclear reactor core using DENOVO, a multiscale neutron transport code developed at Oak Ridge National Laboratory (ORNL). Credit: Andrew Godfrey (CASL Advanced Modeling Applications), Josh Jarrell, Greg Davidson, Tom Evans (DENOVO Team), ORNL.

Involving the larger computational science community in drafting exascale plans is key, says Pete Beckman, director of the Exascale Technology and Computing Institute at Argonne. If systems software – the operating system, libraries of basic functions and other core programs – or programming models must be changed, “we really don’t want that to be done by two or three people who are representing their favorite applications.” Instead, scientists from many different disciplines must be included.

Many scientific computing codes with decades of development behind them will need to be revamped to run on exascale, says Michael Heroux, distinguished member of the technical staff in the Computing Research Center at Sandia. “It’s not an easy thing to do. It often requires a ubiquitous change because we’ve assumed a certain kind of compute model, a certain kind of design of the algorithms and software. We built the applications to this set of assumptions.”

Nonetheless, Heroux is excited, partly because fundamental changes necessary to run exascale computers also will empower new, faster multiple-processor laptop and desk-side machines. “I try to imagine what unleashing this kind of computational power can mean in all these different settings.”

Today’s petascale computers divide huge problems into parts and distribute them among thousands of chips, many with multiple processing cores, for solution in parallel. Some machines also use graphics processing units (GPUs), originally designed for video game consoles, to accelerate computation. But something will have to change if machines are to hit exaflops.

First, processors are no longer increasing in speed, as they have for decades. Even if they did, faster processors also consume more power; to hit an exaflops, a computer would need more than 100 times as many CPUs as are in today’s 2-petaflops machines. Those processors would have to be almost five times as fast as those currently available and would consume an estimated 220 megawatts of electricity – as much as tens of thousands of homes.

Electric power for such a machine would cost $200 million to $300 million a year, “and that’s just not sustainable,” Hitchcock says. “Our goal is to figure out how you can bring an exascale computer in around 20 megawatts” a year. If that’s feasible, it also means a system like Oak Ridge National Laboratory’s Jaguar, a 2-petaflops Cray computer, “becomes a system you could imagine having in your office and plugging into a wall. That means you revolutionize the whole (information technology) industry.”

The processor speed and power factors mean the jump to exascale speed mostly will come from employing more processor cores – as many as a billion – instead of using ones that calculate more swiftly. “If you have a job, you need to work faster or you can add more people. But at some point you can’t work any faster,” Beckman says. “That’s sort of where we are with clock speed. We can’t make the workers work any faster, so the only way to get improvement is to add more of them and arrange them differently.”

This hardware concurrency, as computer scientists call it, will place many cores on each node, a grouping of processors that share memory. “How you program the node is a critical question,” Heroux says. With perhaps thousands of cores on each node, parallel-processing algorithms must take a fine-grained approach that is the focus of much research today.

For best performance, a huge number of cores usually requires a correspondingly huge amount of memory, but memory is expensive and power-hungry. Jaguar, Oak Ridge National Laboratory’s petaflops computer, has about 1.3 gigabytes of memory per core; an exaflops machine may have less than half that to rein in costs and electricity consumption. Yet unless memory increases in proportion with computing power, exaflops “will not translate into a 1,000-fold increase in capability,” according to the grand challenges workshop report.

Moving data to and from memory demands even more energy; an exascale computer’s memory system could consume between 200 and 300 megawatts.

To hold down demand for memory and minimize data movement, “we have to face doing a lot more arithmetic operations per access to memory,” Messina says. Applied mathematicians may have to rearrange their numerical algorithms or recast the equations that drive them. “People will indeed be really rethinking their approach, as opposed to just reimplementing their software using the same approach.”

That includes system software, says Beckman. Over decades, researchers have developed and improved these core programs. For exascale, “not all of them have to be redesigned from scratch, but some of them will.”

Executing parallel processing on millions of cores also will magnify many of the issues researchers face when using today’s most powerful computers.

For example, petascale machines struggle with latency – a lag in retrieving data. To hide latency, processors are programmed to call for a new piece of data before they work on information they have. But with exaflops capacity and limited memory, the old techniques may not function so well. “You have to work really hard to keep the processors busy because they can go really fast,” Messina says. “Keeping them fed has always been an issue but now it’s much more of an issue.”

Load imbalance – inefficient allocation of work to processors, leading some to sit idle while others labor to finish – also is expected to worsen on exascale computers. Software tools must reallocate work on the fly, Messina says.

Similarly, exascale computers also must dynamically compensate for faults – a near certainty when running complex programs on machines with millions of cores and petabytes of memory on disk drives and solid-state circuits. Operating systems and tools must be able to recognize and report failures, and to stop, move or restart processes to minimize disruptions.

Finally, there’s the issue of moving, storing and analyzing data exascale computers generate. Traditionally, data are stored on servers then analyzed with visualization and other tools. But moving and depositing data from exascale simulations will be expensive – in computation and energy. To cut the cost, algorithms may incorporate in-situ analysis: Instead of writing results to disk, researchers would analyze them as the work is done. “That will require some rethinking and restructuring, but it may be a good payoff,” Messina says.

Other payoffs are possible, Heroux says. For applications that run with sufficient precision to solve a given problem on current computers, researchers can use exascale’s extra capability to provide an optimal solution and calculate the degree of possible error. These optimization and uncertainty quantification formulations can easily grow to a thousand times the work of the original problem.

Co-design is necessary to address these challenges, Beckman says. Hardware vendors generally don’t write core programs like highly parallel file systems, so they must work with the software and application developers who do, getting their input on how chip or system architecture can help them. Co-design allows scientists, programmers, hardware vendors and others to understand the tradeoffs between software and hardware. It lets teams set targets and share responsibility.

Scientists often think of hardware, system software and applications as distinct pieces, modifying each while regarding the others as unchanging, Heroux says. Co-design integrates the parts and considers how a change in one affects the others.

With the drive to exascale, Beckman adds, the scientific computing community has realized that all three parts are dependent. “Those three parties are all sitting at the table all the time in designing these new platforms. That’s really the exciting new way to look at co-design.”