Part of the Science at the Exascale series.

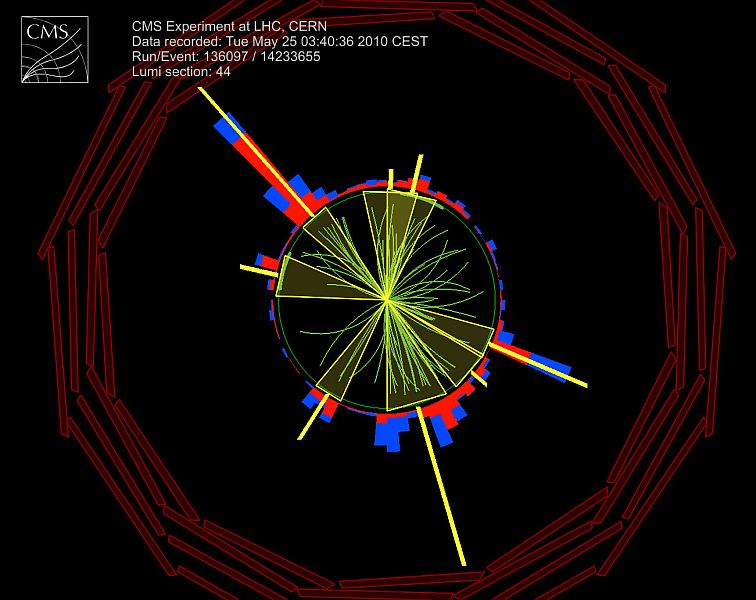

After years of planning, construction and delays, the Large Hadron Collider (LHC) is at last crashing particles into each other in its circular tunnel, 17 miles across, on the border between France and Switzerland. Detectors gather information about particles created in these collisions, providing physicists a feast of data to study matter’s most fundamental components and forces.

At its peak, the LHC will be seven times more energetic than the previous most powerful accelerator. Such leaps happen only once in 20 years, says Frank Würthwein, a physics professor at the University of California, San Diego, who participates in an LHC experiment. “That change in energy allows us to probe a whole new region of nature.”

But mountains of data from the experiment will get little or no scrutiny without sufficiently powerful computers, Würthwein says. That means ones capable of an exaflops – 1018 (a 1 followed by 18 zeroes) calculations per second – or of storing and handling an exabyte (1018 bytes) of data. Exascale computers are about a thousand times more powerful than today’s best machines.

“My data samples are growing so large that in the near future, if I don’t get exascale computing, I’m going to be in trouble,” Würthwein says.

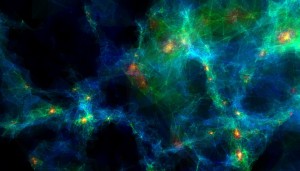

Delaunay-based volume rendering of an N-body simulation is used to study formation of galaxy filaments and walls. Delaunay rendering triangulates a set of points so that no point in the set is inside any triangle. It interpolates space to produce a real-time pseudo rendering of the simulation volume. The program assigns color and transparency to individual triangles as a function of the density of their three vertices. Image by Miguel Aragon-Calvo, Johns Hopkins University.

The same may be true of other areas of high energy physics (HEP), which focuses on the quantum world of neutrinos, muons and other constituents of matter. Besides earth-bound experiments like the LHC, HEP includes explorations of cosmic phenomena like black holes and computer models of things as large as the universe and as tiny as the quarks that comprise neutrons and protons.

Supercomputers perform these simulations, store and analyze experimental results and help scrutinize data from space- and ground-based instruments.

Combining supercomputing with experimental and observational science has generated a deeper understanding of the universe and of the Standard Model that describes matter’s elementary components and the forces governing them. Yet “to date, the computational capacity has barely been able to keep up with the experimental and theoretical research programs,” says a report on “Scientific Challenges for Understanding the Quantum Universe and the Role of Computing at the Extreme Scale.”

“There is considerable evidence that the gap between scientific aspiration and the availability of computing resources is now widening,” participants at the December 2008 meeting wrote. It was one of several workshops the Department of Energy (DOE) Office of Advanced Scientific Computing Research helped sponsor on how exascale computers can address scientific challenges.

“People are a little bit fuzzy about what exascale means,” says Roger Blandford, a workshop co-chair. “Does it mean exaflops or does it mean exabytes (of data) or does it mean something else?”

Regardless, the question facing the workshop was, “Do you need that?” says Blandford, director of the Kavli Institute of Particle Astrophysics and Cosmology at Stanford University and a physics professor at the SLAC National Accelerator Laboratory. Participants decided “it would be crucially enabling,” he says.

Simulation is the dominant research tool in theoretical astrophysics and cosmology, the report says. Computing power has been similarly critical in probing high energy theoretical physics, designing large-scale HEP experiments and storing and mining data from simulations and experiments.

“It’s fair to say that high energy physicists have been all along pushing the envelope in terms of high-performance computing, but now astronomers and astrophysicists have caught up,” Blandford says.

Alex Szalay, the Alumni Centennial professor of astronomy at Johns Hopkins University, agrees: “We always max out whatever computational capabilities we can lay our hands on.”

Szalay studies how billions of galaxies are distributed through the universe and what that tells us about its early years. But rather than observing the night sky, he now spends most of his time analyzing data – including simulation results and sky surveys. That emphasis on data represents a dramatic change in scientific computation, he says.

Whereas astrophysics increasingly deals with data, high energy theoretical particle physics relies on successively more precise simulations of how subatomic particles interact – with each improvement requiring a huge increase in computer capability. A main focus is lattice quantum chromodynamics (QCD), which describes the strong interactions within the Standard Model – the force that binds quarks into particles like protons and neutrons.

Researchers use lattice QCD to calculate many of the properties of quarks and gluons – particles that transmit the strong force – including particle masses, decay rates and even the masses of quarks themselves, says Norman Christ, Ephraim Gildor professor of computational theoretical physics at Columbia University. Lattice QCD breaks up the problem, making it amenable to calculation on parallel processing computers, says Christ, the other of the workshop’s two co-chairs.

Würthwein, Szalay and Christ exemplify just three slivers of HEP research. Each uses available computers as effectively as possible, Blandford says, but “all of this research is throwing up major computational challenges.”

It will take more than one kind of exascale computer to meet challenges the workshop laid out, Blandford says. And there are obstacles to developing those computers: taming their huge appetites for electricity, formulating algorithms to take advantage of their speed and training personnel to work with them.

The potential payoff, however, is huge.

“It’s easy to say these sorts of things,” Blandford says, “but I really believe it, that in astrophysics, cosmology, high energy physics, the discoveries that have been made over the last few decades and what is likely to happen over the next few, are science for the ages.”