For Warren Washington, climate and computers have gone together for more than 50 years. In 1958, as a master’s degree student at Oregon State College (now University) he used a first-generation vacuum-tube machine to model a single cap cloud over an isolated mountain peak.

Washington now has more computer power at his disposal than he could possibly have dreamed in the ’50s. As a senior scientist at the National Center for Atmospheric Research (NCAR) in Boulder, Colo., he works with colleagues to develop revolutionary models of Earth as a living planet – on some of the world’s most powerful computers, those of the U.S. Department of Energy.

When Washington accepts the National Medal of Science from President Obama today, it will highlight a remarkable three-decade relationship. Over that time, Washington and his colleagues have merged climate science with DOE high-performance computers to help change how the world understands climate and how climate scientists use computers.

The only concern most Americans had about fossils fuels in the mid-1970s was avoiding gas station lines and spiking prices. But among atmospheric scientists, fossil fuels already were at the heart of another problem: manmade climate change.

The National Academies of Sciences’ groundbreaking 1975 report “Understanding Climate Change” began with language that still sounds fresh: “The increasing realization that man’s activities may be changing the climate … have brought new interest and concern to the problem of climatic variation. Our response to these concerns is the proposal of a major new program of research designed to increase our understanding of climatic change and to lay the foundation for its prediction.”

‘Warren and his colleagues had the vision to move towards parallelization, and now everyone does it.’

Washington, a member of the NAS committee that produced the report, already was on the job. Within months of joining NCAR in 1963, the ink barely dry on his doctoral thesis, he’d started to co-develop an atmospheric General Circulation Model. He and NCAR atmospheric scientist Akira Kasahara had daily meetings with a small team of computer programmers at the center to finesse their simulation.

The team was thrilled a couple of years later, when their model could “generate more realistic storm systems, and we were even able to display them on a cathode ray tube device that was filmed by a camera,” Washington says.

So in 1978, Washington was a natural pick when DOE sought an NCAR researcher who could help examine the potential climate impacts of carbon dioxide emissions from burning fossil-fuels. It has proved to be an enormously successful partnership, spurring one of the world’s leading climate-modeling collaborations and involving more than $40 million in DOE support, primarily from the Office of Biological and Environmental Research (BER).

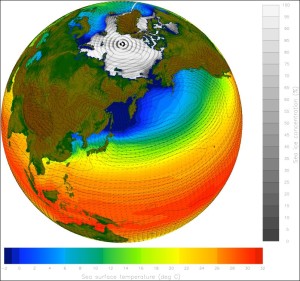

This visualization of a Community Climate System Model simulation shows several aspects of Earth’s climate. The two color scales show sea surface temperatures and sea ice concentrations. It also shows sea-level pressure and low-level winds, including warmer air moving north on the eastern side of low-pressure regions and colder air moving south on the western side of the lows. Image from the National Center for Atmospheric Research/University Corporation for Atmospheric Research.

In the early 1990s, it became clear just how pivotal a choice DOE had made. The department was pioneering the use of parallel high-performance computers (HPCs). Until then, HPCs had a vector architecture, with the bulk of a machine’s processors working simultaneously on a long array of data. On parallel machines computations are distributed between thousands of individual processors, then integrated.

Most climate modelers balked at the change – they’d invested years in creating algorithms, codes and models that ran on vector machines.

Washington, however, took up the challenge. He led a team to create the DOE-NCAR Parallel Climate Model – one of the world’s first advanced models for parallel supercomputers.

“Warren was a visionary in the area of parallel climate modeling,” says Andrew Weaver, the Canada Research Chair in Climate Modeling and Analysis at the University of Victoria. “While the rest of the world maintained its trajectory to run these models on [vector] serial processors, Warren and his colleagues had the vision to move towards parallelization, and now everyone does it.”

Weaver notes that this computational leadership came at a critical time.

“Moving from serial to parallel codes opened up a whole new realm of possibilities. It allowed for better representations of the physics to be incorporated, higher resolution models to be run, and for those same models to be run for longer times. Parallelization was revolutionary for climate science.”

The Parallel Climate Model paved the way for the development of the much more comprehensive Community Climate System Model – now in its fourth and fifth versions (CCSM4 and the Climate Earth System Model [CESM] version 1).

These models are major improvements in two main ways: They integrate the climatic dynamics of not only the atmosphere and oceans but also land surface and sea ice; and they involve a broader collaboration between NCAR, DOE and university climate and computational scientists, drawing on greater scientific expertise and data. In this spirit of collaboration, the models are open source; anyone can download them from the Internet.

The work has had global impact.

Says Washington, “For the last U.N. Intergovernmental Panel on Climate Change (IPCC) assessment report in 2007, our group carried out the largest number of simulations of any of the groups in the world.”

His group, and particularly NCAR colleague Gerald Meehl, lead author of the 2007 IPCC report, shared the 2007 Nobel Peace Prize with other leading climate scientists and former Vice President Al Gore.

The CCSM and CESM models were run on DOE supercomputer systems at Oak Ridge National Laboratory’s National Center for Computational Sciences (ORNL-NCCS) and DOE’s National Energy Research Scientific Computing Center (NERSC) at Lawrence Berkeley National Laboratory.

“It would have been impossible to run the simulations in time for the next IPCC assessment without these DOE HPC resources,” Washington says.

‘We’re in the midst of a conversion from climate models to Earth system models.’

At age 74, Washington says he has no plans for retirement, quipping that his wife doesn’t want him underfoot at home. In fact, after five decades of modeling he still plays a lead role in pushing the boundaries of both climate science and computational modeling in collaboration with DOE scientists and resources.

Today, this includes finding what he calls “the computational sweet spot” for climate models on ORNL-NCCS’ Jaguar supercomputer, the nation’s most powerful. That spot, Washington explains, “is the optimal intersection of real-time integration rate and computational efficiency. If the execution rate of the computer program does not increase past a certain number of processors, then it doesn’t make sense to use more than that number of processors.”

This is a critical issue for the efficient use of Jaguar, which has more than 100,000 processors, because most climate models were designed to run on fewer processors.

To maximize efficiency on Jaguar and other DOE supercomputers, Washington’s group is working with DOE researchers, led by ORNL’s David Bader, to develop and use frameworks like HOMME, the High Order Method Modeling Environment. Sandia National Laboratory’s Mark Taylor has been extensively involved in developing HOMME, with others at ORNL, NCAR and other DOE laboratories.

“For our standard version of the CCSM3 you can’t put 100,000 processors on the problem,” Washington says. “You don’t get a higher degree of efficiency.”

HOMME, however, turns the surface of the Earth into the equivalent of an immensely detailed jigsaw puzzle of tens of thousands of pieces, each one of which can be modeled independently, then integrated with the rest.

“With HOMME our models will run very efficiently on Jaguar.”

The extended NCAR-DOE-university collaboration has been awarded 70 million processor hours on Jaguar for the current year, along with 30 million hours at NERSC and 40 million hours on Argonne National Laboratories’ Blue Gene/P supercomputer. As a result, Washington says, “we’re on track to provide the current IPCC assessment with one of the largest groups of simulations.”

In his autobiography, Odyssey in Climate Modeling, Global Warming, and Advising Five Presidents, Washington asks whether increasingly better climate models will help the public and policymakers understand climate change.

“I believe the answer is yes,” he writes (emphasis his).

Where others have turned away from the public debate in despair or frustration, Washington continues to work – “selflessly,” Canadian climate modeler Weaver says – for greater understanding.

In 2008 Washington led a workshop on “Challenges in Climate Change Science and the Role of Computing at the Extreme Scale,” sponsored by BER and DOE’s Office of Advanced Scientific Computing Research (ASCR). The event brought together about 100 researchers, divided between climate scientists and computing experts.

“In the past decade, DOE has especially tried to tie these two communities together – and I think it’s worked out well,” Washington says.

So well, in fact, that he’s working with others to create exascale climate models – ones that will run on computers capable of a million trillion operations per second – once again redefining the field.

“With the promise of exascale computing,” Washington says, “we’re in the midst of a conversion from climate models to Earth system models, ones that include not just physical processes but biological ones as well. I envisage Earth system models that incorporate virtually all of the process that interplay in the climate and Earth system and that we’ll execute such models on computer systems with more than a thousand times the present capability.”

A statement all the more impressive when spoken by a man whose first climate model, five decades ago, was of a single cap cloud on an isolated mountain top.