As the Great Long Island Hurricane of 1938 ripped apart houses and turned towns into islands, meteorologists recorded crucial data – the sea-level pressure leading up to the terrible event. Alone near the South Pole in 1934, Admiral Richard Byrd documented his ice-bound despair. He also dutifully recorded the surface air pressure every hour.

Storms and blizzards made headlines throughout the late 19th and 20th centuries. But it’s the humble recordings of lows and highs during those unforgettable events that scientists are harvesting now. They hope a computationally intense reanalysis of weather over the past 150 years will tell them whether today’s storms are more extreme and if climate prediction models can be trusted for this purpose.

“It’s that connection to our scientific forbears that makes this project so exciting,” says Gil Compo, who leads the 20th Century Reanalysis Project for the National Oceanic and Atmospheric Administration (NOAA).

“These guys went out at tremendous risk to life and limb” to collect the data. “Now, in ways they never could have imagined, we are going to use data from those dedicated meteorologists to figure out how much weather and climate have changed over the past 150 years.”

Compo and 28 international collaborators hope to gather pressure readings from the surface and from sea level on five continents for every six-hour period going back to 1850. They have cadged records from Jesuit monks, polar explorers, independent observers and trained meteorologists.

“We sent out the call: ‘We need more surface data. Do you have it?’” to universities and weather bureaus. Colleagues combed the records of Google and Microsoft, Compo says.

Are there more extreme weather events now – more incidents of extreme low pressure?

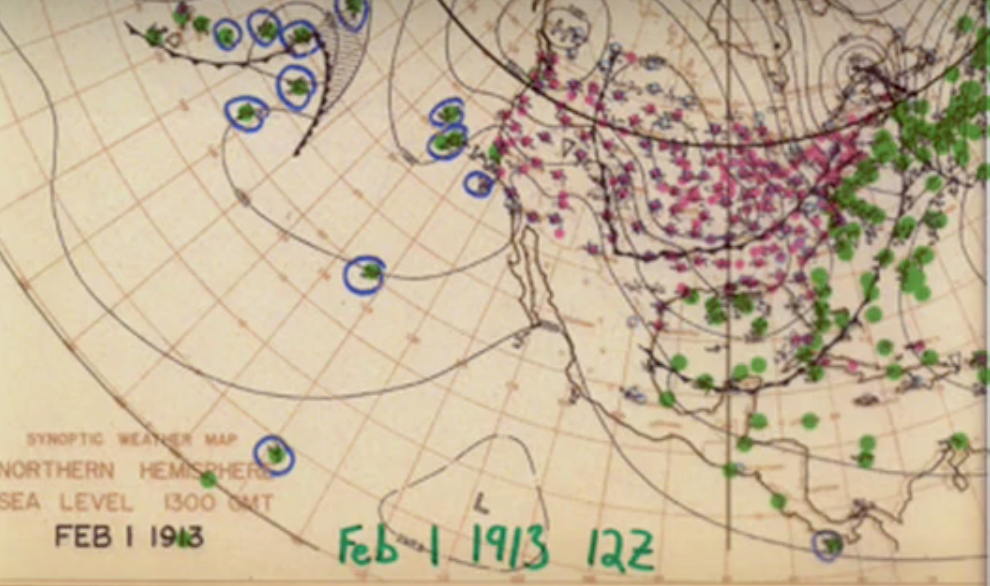

Before airplanes and weather balloons, meteorologists had just surface temperatures, wind speeds and barometric pressures to make forecasts.

The Reanalysis Project aims to use just those old tools to map the weather over 150 years, and in so doing determine whether the models of past and future climate correlate – or are likely to correlate – with the kind of events that happened in the past.

For example, the hurricane known as the Long Island Express roared inland from the Atlantic in 1938, killing up to 800 people and damaging or destroying 57,000 homes in New York, Connecticut, Rhode Island, Massachusetts and New Hampshire.

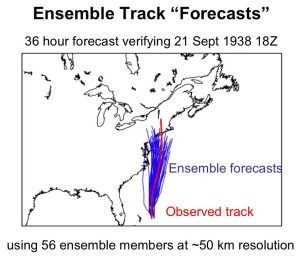

Can air-pressure readings from 1938, by themselves, indicate that a hurricane was inevitable and that it would be one for the ages? Can those readings help show that recent hurricanes are more vicious than even the worst ones of the early 20th century? It’s a daunting challenge.

Compo and his principal collaborators, Jeffrey Whitaker of NOAA and Prashant Sardeshmukh of the Climate Diagnostic Center at the University of Colorado’s Cooperative Institute for Research in the Environmental Sciences, believe new advanced data-assimilation methods can generate highly reliable estimates of conditions high in the air. And if that kind of analysis can be done for today’s weather, why not historically, provided there are enough ground-level readings?

The simulated possible tracks (blue) of the New England Hurricane of 1938 based on air-pressure readings. Seventy-one years ago this month, the so-called Long Island Express killed 800 and left a swath of destruction through New York, Connecticut, Rhode Island and Massachusetts. The model shows good agreement with with the hurricane’s actual path (red).

Version one of the project, covering 1908 to 1958, took the equivalent of 33,000 processors running nonstop for three months, Compo says. The model calculated four readings a day at 200-kilometer intervals around the world. Computing each year uses the equivalent of 224 processors on Bassi, the IBM Power5 and Seaborg, the IBM Power3 (since decommissioned) at the Department of Energy’s National Energy Research Scientific Computing Center (NERSC), based at Lawrence Berkeley National Laboratory.

Compo’s inspirations for the project were the weathermen and meteorologists of the late 1930s. To support the Allied war effort, they gathered data from the previous 40 years to make predictions about where and when shipping lanes would likely ice over.

They used punch cards filled with data and drew by hand the highs and lows on weather maps, one per day going back four decades.

“That was the first retrospective analysis,” he says.

A generation before that some early pilots spiraled up into the atmosphere to record temperatures and air pressures. And in the 1930s, weather balloons also became an important meteorological tool, recording changing air pressure and temperatures at different elevations.

The reanalysis project scientists, using only their surface air-pressure data, tried to predict what the weather balloons should have recorded on those days in the 1930s, 1940s and 1950s.

An analysis shows an astonishing 0.97 correlation between the predictions and the actual weather. If those close correlations prove valid in new versions of the project, then scientists will have a database going back 150 years that compares apples to apples.

With that, they can learn two more things: First, are there more extreme weather events now – more incidents of extreme low pressure, for example? “We can compare the intensity and frequency of storms in the 19th century to the late 20th century,” Compo says. And, second, do today’s best climate models capture big storms of the past, and do they correlate with actual historical events? If so, scientists will have more confidence in those models, which predict accelerated global warming in the coming decades. If not, they will have to tweak the models to more accurately predict future climate.

The researchers also hope to learn the cause of important 20th-century climate events, how today’s climate events compare with those of the past, the role of human industry in recent weather events and how the 1930s drought compares with later droughts.

With the data-crunching prowess of supercomputers, the scientists analyze the highs and lows from each day in history, from each weather-reporting station, for each six-hour period.

On a March day two generations ago in the Kansas City area, for example, there may have been a low over Parkville, but a high over Liberty, and some more lows around Excelsior Springs, Olathe and Independence.

To see if predictions matched reality, the scientists drew on a storehouse of great meteorological events.

What will that map look like in six hours?

It’s anyone’s guess, but the fastest guesses are made by a supercomputer, in this case Franklin, the Cray XT4 at NERSC.

By then, Compo says, “maybe this low moved over here a bit and that one moved way over there. Maybe a really low pressure system developed that was different than our prediction of just a moderate, mediocre anomaly.”

If the scientists want to create weather maps for, say, Jan. 1, 1891, they start on Nov. 1, 1889. They incorporate weather maps generated in 56 simulations of Nov. 1, Compo says, containing all the data needed to run the numerical weather prediction model: winds, temperatures, humidity, ozone and pressure throughout the troposphere and stratosphere.

The 56 maps will diverge greatly from each other and from actual observations of surface and sea level pressure from midnight on Nov. 1, 1889.

Slowly, meticulously, the computer closes the variances between the actual weather that day and what the surface pressure indicates the weather ought to have been.

The scientists use an equation called the Kalman filter to combine the observations and the maps and to make new maps that are much closer to what actually happened on Nov. 1, 1889, at midnight, 6 a.m., noon and so forth.

But they are still a long way off.

“We combine these guesses with the actual pressure observations” from the early morning hours of Nov. 1, “to make the 56 maps that are closest to what actually happened,” Compo says.

The guesses, now in place, are compared to the actual readings six hours later.

“Instead of just a random set of possibilities, the guesses evolve closer to what really happened,” Compo says. “We change our model from the guess. That gives us our weather map. We keep repeating this process for the next 14 months.”

By Jan. 1, 1891, “the maps are very good, and closely reflect what actually happened at that time.”

The reanalysis, including going back 14 months to get a running start at winnowing the guesses, starts again every half-decade.

To see if predictions matched reality, the scientists drew on a storehouse of great meteorological events.

From the 1920s to 1940s, for example, there was a dramatic warming in the Arctic. Was that caused by human production of carbon dioxide? Most scientists say no, that it was a natural fluctuation that lasted a couple of decades.

Over the past 20 years, the Arctic has lost a tremendous amount of sea ice, most likely attributable to human-caused global warming.

How do the events differ? What explanations do the surface-level pressure readings hold for why one event probably was a natural fluctuation, the other human-caused?

The researchers also are anxious to comb air pressure data for clues to a dramatic 1895 cold-air outbreak that froze the southern orange crop and gave a boost to Miami, southerly enough to weather even the coldest snaps.

And they’ll examine the air pressures leading to the Tri-state Tornado of 1925. “We can’t get a tornado” on the weather maps because measurements cover 200-kilometer sections, Compo says. “But we can reconstruct the large-scale conditions, see if it was moister than usual, for example.”

The DOE’s INCITE program (for Innovative and Novel Computational Impact on Theory and Experiment) provided the project with 2.8 million processor hours at NERSC in 2008 and with 1.1 million hours at Oak Ridge National Laboratory for 2009. NOAA’s Climate Program office also has provided support.

Rob Allan, managing the Atmospheric Circulation Reconstruction over the Earth (ACRE) initiative of the United Kingdom’s Met Office, is a collaborator. Through ACRE, the researchers are recovering data from the South Pole expeditions of Scott, Ross, Byrd, Shackleton, Amundsen and others.

For the Arctic region, they are using data recorded by explorers who tried to find the lost Franklin expedition. “They took pressure and temperature readings,” Compo says. “We’ll take their pressure observations from wherever they reported themselves as being. We’ll combine those with all the others taken that day within six hours and we’ll get the model.”

Version two of the model is in production and will cover 1871 to 2009. It runs on Franklin and on Jaguar, the Cray XT5 at Oak Ridge. Version two draws on extra variables such as solar and carbon dioxide variables and volcanic aerosols to help reconstruct the most accurate historic weather maps.

A Surface Input Reanalysis for Climate Applications will begin in 2011 and cover the years 1850 to 2011. It also will employ Jaguar and get recordings at 50-kilometer intervals rather than 200.

“To double the resolution we need 10 times the computer power,” Compo says. “And we want to quadruple the resolution. We’ll need tens of thousands of processors.”

To test the higher-resolution project the researchers will start with the 1938 hurricane.

An ambitious, version three reanalysis project should be done by 2012.

“This will give us the ability to compare how our state-of-the-art model correlates with historical weather data,” Compo says. “It should give us confidence in our understanding of how our weather will change in future decades.”