Turbulence has confounded physicists for ages. Richard Feynman called turbulence “the most important unsolved problem in classical Newtonian physics.” Werner Heisenberg was once asked if he had any questions for God. He replied, “Why Relativity? And why turbulence?”

Turbulence describes fluid flow dominated by eddies – patches of swirl that can range in size from the tiny vortices around a hummingbird’s wings to a raging hurricane.

Combustion is a particularly perplexing form of turbulence, says George Catalano, a mechanical engineering professor at the State University of New York (SUNY) at Binghamton. “We have no exact mathematical solution to even the simplest of turbulent flows, and combustion represents some of the most complicated turbulent flows,” says Catalano, an expert in fluid mechanics.

It should be no surprise, then, that simulating combustion takes massive computational power, supplied largely through DOE’s INCITE program (Innovative and Novel Computational Impact on Theory and Experiment) – and by any other means necessary.

Jacqueline Chen and Joe Oefelein say their teams at Sandia National Laboratories’ Combustion Research Facility (CRF) in Livermore, Calif., used an unprecedented amount of computer time – 30 million processor hours on Jaguar, a Cray XT computer at Oak Ridge National Laboratory (ORNL) that has been rated the world’s most powerful for open science. With more than 181,000 AMD Opteron processor cores, Jaguar is capable of 1.64 quadrillion mathematical calculations a second.

“CRF’s focus has been on one-of-a-kind flame simulations using one-of-a-kind supercomputers that are not available to the wider research community,” says aerospace engineering professor Suresh Menon, a Sandia collaborator who directs the Georgia Institute of Technology Computational Combustion Laboratory in Atlanta.

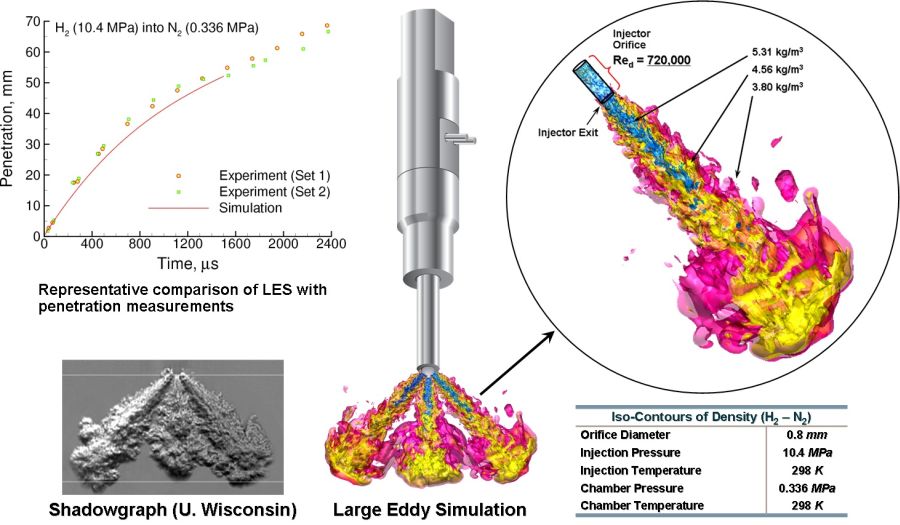

This representative large eddy simulation (LES) shows a high-pressure injector used for a hydrogen-fueled internal combustion engine under study at the Combustion Research Facility at Sandia National Laboratories. The graph at the top left compares penetration measurements made in experiments with predicted results. At bottom left is a shadowgraph image showing the transient structure of the turbulent jets. Compare that with the LES simulation at the center showing the complete computational domain – an actual three-orifice injector used in the engine. At the right is an enlargement of one of the jets at an instant in time showing the turbulent structure in both the injector orifice and the ambient environment.

Chen, Oefelein and Ramanan Sankaran at ORNL are running direct numerical simulations (DNS) and large eddy simulations (LES) on Jaguar to model the complex air, heat and chemical interactions typically encountered in internal combustion engines.

Chen and her team study how controlling pressure and ignition can stabilize different flames burning a range of fuels. “We are using DNS on Jaguar to model a jet flame at elevated pressures,” she says. “In the past, we have modeled hydrogen and ethylene-air flames and a flame fueled by n-heptane, a diesel surrogate. In the future, we will model dimethyl ether and ethanol flames.”

Oefelein’s team uses LES to investigate turbulent flow in an actual internal combustion engine under real operating conditions.

“Large eddy simulation has been a major scientific breakthrough over the past decade in the combustion community,” he says. “It provides the mathematical formalism to treat turbulent flow over multiple dimensions in a computationally feasible manner.”

Together, LES and DNS provide a more complete picture of internal combustion. “DNS and LES are complementary,” Oefelein says. “LES captures large-scale combustion processes, whereas DNS captures fine, small-scale fuel mixing and chemistry.”

A lifted flame is produced when cold fuel and hot air mix and ignite in a high-speed jet.

Applied to a fluid, Newton’s second law – that the force on an object is equal to its mass times its acceleration – becomes the Navier-Stokes equation, which describes the flow of liquids and gases subject to viscosity and pressure.

Direct numerical simulation, Chen explains, “is a high-accuracy, high-fidelity approach to solving the Navier-Stokes equation” at small scales where turbulence isn’t much of a factor.

In the flows that govern combustion, “the fuel and the oxidizer (oxygen from air) have to be molecularly mixed before they can react,” Chen says. To get a complete picture, the simulation must consider this so-called “fine-scale mixing,” a DNS specialty that “requires tens of millions of hours on a petascale computer and generates hundreds of terabytes of data.”

Desktop computers typically move kilobyte and megabyte-sized information – 1,000 and 1 million bytes, respectively. But Jaguar can handle bytes by the trillions (tera-) and quadrillions (peta-).

“The supercomputers available to DOE are at the leading edge,” Menon says, and it takes leading-edge software to run them. Chen’s team used S3D, a software code developed at Sandia, to achieve what she calls “an unprecedented level of quantitative detail” simulating a so-called lifted flame.

Most people have probably seen a lifted flame, which is produced when cold fuel and hot air mix and ignite in a high-speed jet. Lifted flames are keystones of advanced combustion technology and float just above the burner, reducing thermal stress on the nozzle and, in theory, burning fuel cleanly enough that emissions are mostly absent.

But lifted flames also can easily blow out if the turbulent mixing of fuel and air isn’t just right. Modeling a variety of burning fuels under varying conditions is a first step toward stabilizing a lifted flame and achieving something of an oxymoron: stabilized turbulence.

Chen and her team started modeling lifted flames burning ethylene, a simple, three-carbon molecule considered a precursor to simulating the more complicated hydrocarbons in most transportation fuels. Using a three-dimensional grid with more than a billion points, each spaced 15 microns apart, the simulation generated more than 120 terabytes of data, 10 times more than the Library of Congress holds.

Such high-level data storage is a constant challenge, so Chen’s team stored their model on the Oak Ridge Lustre system, a 284-terabyte bank. They also will use Lens, a cluster of Linux-operating processors holding a maximum 677 terabytes, and the High-Performance Storage System, which holds about 3 quadrillion bytes.

DNS simulations generate boatloads of remarkably precise data, but eventually break down as a dimensionless ratio called the Reynolds number rises.

The Reynolds number quantifies the impact of a fluid’s thickness, or viscosity, on its ability to flow. Turbulence and Reynolds number are directly related. Thick pancake syrup has a low Reynolds number and an equally low likelihood of turbulence. Smoke, on the other hand, has a high Reynolds number and a high likelihood of turbulence.

The more turbulent a gas or fluid, the more complicated its flow and the harder it is to simulate, all of which “makes it prohibitive for DNS to model high Reynolds number flows in the foreseeable future,” Chen says.

DNS also fares poorly at the so-called device level – the level of the average automobile engine, where macromolecular phenomena predominate and turbulence is certain.

Devices, Oefelein explains, present a “wide range of time and length scales which, from the smallest to the largest, typically span a factor of more than 100,000 that must be considered in a calculation.”

To develop a picture of “realistic internal combustion engine-type flows, DNS would probably require trillions of individual grid points,” says Georgia Tech’s Menon, who co-authored a paper on combustion with Oefelein last year. “It wouldn’t be feasible, even on a petascale machine.”

Large eddy simulations, on the other hand, “treat the problem with considerably fewer grid points,” Menon explains. “Given current supercomputer capabilities, LES can study flows in more complex geometries, such as internal combustion engines.”

Formulated in the late 1960s to model atmospheric air currents, LES divides turbulence into two phenomena: large eddies and small eddies. According to the theory of self-similarity, large eddies are dependent on flow geometry, whereas small eddies are independent and pretty much all alike.

Considering but not solving for the effect of small eddies on a turbulent current, LES simulates only the large eddies, considerably simplifying the turbulent motion of fluids.

Because it doesn’t time-average the effects of reactive-species concentrations, LES also can predict instantaneous flow characteristics. Averaged concentrations fail to consider localized areas, where the a mount of a chemical or fuel may be high enough to react or combust.

Where Chen and her team used SD3 for their DNS simulations, Oefelein’s team uses RAPTOR, a software code designed specifically for application of LES to turbulent flows. Developed by Oefelein, RAPTOR solves the conservation equations of mass, momentum and energy for a chemically reacting gas or liquid flow system in complex geometries.

RAPTOR is versatile enough to account for chemistry and thermodynamics at the molecular level. But it’s also new, so Oefelein says he spent “significant man hours” in 2008 collaborating with Sankaran at ORNL, assuring that RAPTOR would run on Jaguar.

They’ve confidently optimized RAPTOR since then and have been using LES to study two next-generation engines under characteristic high-pressure, low-temperature conditions: the hydrogen-fueled internal combustion engine (H2-ICE) and homogeneous-charged compression-ignition (HCCI) engine.

HCCI offers “high, diesel-like efficiencies with low emissions,” Oefelein says. “It burns lean.”

To perfect HCCI technology, researchers are seeking better ignition controls, higher limits on the rate of heat released from combustion, robust cold start capability and the ability to use alternative fuels.

Meeting these challenges depends on “developing an improved understanding of highly turbulent combustion processes at high pressures,” Oefelein says. Inside these next-gen engines, “LES calculations will provide the most resolved, high-fidelity picture of combustion processes to date. Results from these calculations will provide a clear understanding of fuel efficiency, emissions, turbulent mixing and turbulence-chemistry interactions.”