It’s 1973. Major oil-producing countries have united to raise prices. Arab nations have cut off oil shipments to much of the West. American drivers are struggling through long gas lines and shortages.

It would forever change the way Americans think about energy – and alter the path of scientific research.

“We all got up one morning in 1973 and there were lines at the gas pumps,” recalls Alvin Trivelpiece, who served as assistant director for research in the Controlled Thermonuclear Research (CTR) Division of the Atomic Energy Commission (AEC).

Alvin Trivelpiece

His boss, AEC Chair Dixy Lee Ray, responded with a proposed $10 billion program named Project Independence.

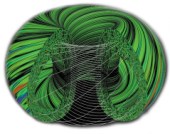

Scientists submitted a torrent of proposals for research on alternative energy sources – including fusion, which could produce abundant, clean power by fusing hydrogen atoms in a hot ionized gas cloud called a plasma.

“It was an opportunity to make the case for greatly expanding the fusion program,” Trivelpiece says. “It wouldn’t have happened without the oil embargo.”

The budget for fusion research went from $20 million to $70 million in a couple of years and eventually to about $500 million in 1980. “High-performance computing was the beneficiary of these circumstances,” Trivelpiece adds. Trivelpiece wanted to provide more powerful computing resources for fusion scientists. His vision was for a centralized computer remote users could connect to via telecommunications links. This vision helped alter the paradigm for performing research.

The odyssey began with the Controlled Thermonuclear Research Computer Center (CTRCC), which later became the National Magnetic Fusion Energy Computer Center (NMFECC), then the National Energy Research Supercomputer Center (NERSC), and finally the National Energy Research Scientific Computing Center, the state-of-the-art national facility that serves government, industry, and academic users today.

In 1973, Trivelpiece was on leave from his post as a University of Maryland physics professor when he came to the AEC. He soon formed a study group to assess the CTR Division’s computing needs.

Trivelpiece proposed locating a powerful computer at one lab and connecting other labs through a telecommunications link.

The group identified several areas that required special attention in order to develop expertise in predicting and understanding plasma behavior in large-scale thermonuclear confinement systems and fusion reactors. One suggestion was the creation of a CTR computer center with “its own special-purpose dedicated computer,” Trivelpiece says.

The CTR Standing Committee, an advisory group for the fusion program, recommended an independent review of the size and scope of computational activity in CTR. The Ad Hoc Panel on the Application of Computers to Controlled Thermonuclear Research, led by Bennett Miller of the AEC was formed in May 1973, and delivered its report, Application of Computers to Controlled Thermonuclear Research in July of that same year.

An advisory panel said large computers were needed to analyze the properties of magnetically confined plasmas and to simulate the characteristics of fusion reactors. The panel concluded that CTR would need to immediately scale up to two of the most powerful computers in the world – a Control Data Corporation (CDC) 7600, an IBM 360/195 or a Texas Instruments ASC – all of which had about 10 megaflops of compute power. (A megaflops is one million calculations per second.)

The report would lead to the creation of the National CTR Computer Center at Lawrence Livermore National Laboratory in California. However, before that could happen, Trivelpiece had a lot of political work to do to convince his colleagues such a center was needed.

What Trivelpiece proposed was locating a powerful computer at one lab and connecting other labs through a telecommunications link.

“Experts in the fusion computing business told me that such as scheme wouldn’t work, but it’s likely that they wanted their own computer at their institution and were worried this approach would reduce research dollars,” he adds.

“At the time, the idea of doing this was pretty foreign,” he says. The total of all computer power available to CTR was the equivalent of one CDC 6600 – a computer with about one megabyte of memory and a peak performance of less than one megaflops. To put that into perspective, all contemporary personal computers perform in the tens or hundreds of megaflops and regularly contain at least a gigabyte of memory – more than 15,000 times that of the CDC 6600.

“The minimum needed was the equivalent power of about two CDCs,” Trivelpiece says.

Based on his own experience as an experimental physicist, Trivelpiece concluded “It was impossible to compare two experiments because (the researchers) were all using different codes,” or computer programs, he says. “We needed one transparent code that everyone could work on.”

The fusion community also needed “unfettered access to the largest high-performance computers,” he adds.

The CTR Center was to begin operating in 1975, but obtaining the computer presented a challenge. The General Services Administration (GSA), the federal government’s acquisition agency, suggested the fusion program use 10 existing GSA computers at locations around the country when they were available. Trivelpiece found this arrangement unacceptable.

Since the center would be located at Livermore Lab, Trivelpiece decided the lab should buy the mainframe, remote site computers and telecommunications equipment. He argued that Livermore routinely made purchases of this scope and it would be difficult for the AEC headquarters to do the same thing quickly.

“I fell on my sword on this matter and prevailed,” Trivelpiece says. The CTR Center began operating a CDC 7600, with 50-kilobit links initially connecting remote users at other major labs. This is about what free dial-up connections provide today, but was lightning fast 30 years ago.

Trivelpiece gives the most credit for creation of the CTR Center to Johnny Abbadessa, the AEC comptroller at the time.

“There wouldn’t have been a CTR or MFE Center without him,” Trivelpiece says. Abbadessa was intrigued by the idea of a central computer connected to multiple sites as a way to make better computing resources available to more scientists and engineers throughout the weapons lab complex.

In 1976 the CTR Computer Center was renamed the National Magnetic Fusion Energy Computer Center (NMFECC).Steps were taken to acquire a Cray-1, a supercomputer four times as fast as the CDC 7600 and packed with more memory.

Additional remote sites were added at the University of California–Los Angeles and the University of California–Berkeley. This was also the start of the Magnetic Fusion Energy Network (MFEnet), a satellite network connecting Livermore to a handful of key national laboratories. Numerous tail circuits linked those labs to other fusion energy sites.

The much-anticipated Cray-1 arrived in May 1978. Because software for the CDC 7600 was homegrown, the NMFECC undertook a major project to convert the 7600 operating system (called the Livermore Time Sharing System, or LTSS) and other codes to run on the new machine.

The resulting Cray Time Sharing System (CTSS) provided 24-hour reliability. It allowed interactive use of the Cray-1, and six other computer centers adopted it.

By then, 1,000 researchers were using the center. In 1979 it moved to a new building which housed four major computers and network equipment valued at $25 million.

The NMFECC continued expanding in the 1980s. Trivelpiece was named director of the DOE Office of Energy Research and again had a hand in defining the department’s computing resources.

The center’s major players — Los Alamos, Lawrence Livermore and Oak Ridge national laboratories, General Atomics and the Princeton Plasma Physics Lab — used 64% of the resources, with the remainder going to other labs.

After surveying a number of disciplines, Trivelpiece decided the NMFECC should include researchers in other fields.

Trivelpiece also recommended that MFEnet be combined with a similar network supporting high-energy physics research. The new Energy Sciences Network, or ESnet, was to create a single general-purpose scientific network for the energy research community. Staffers at the NMFECC were responsible for operating the new network. (Communications speeds, only 56 kilobits per second in the late 1980s when ESnet came into being, now exceed 600 megabits per second, or over 10,000 times faster.)

By the middle of the decade, nearly 3,500 users were taking advantage of NMFECC resources. The center added a Cray X-MP as well as the first Cray-2 supercomputer. The four-processor Cray-2, along with the two-processor X-MP, allowed for multiprocessing of codes, resulting in far higher available computing speed. The X-MP had a theoretical peak speed of 400 million calculations per second (400 megaflops); the Cray-2, with a theoretical peak speed of nearly 2 billion calculations per second (2 gigaflops), was twelve times as fast as the Cray-1 acquired in 1978.

With the dawn of a new decade, the NMFECC’s name was changed to the National Energy Research Supercomputer Center (NERSC) to reflect its broader mission. What had its roots in weapons code, fusion research and boxes of switches cobbled together to produce computing power at what now seem like excruciatingly slow speeds became a world-class supercomputing effort.

Today, NERSC at Lawrence Berkeley National Laboratory is home to seven supercomputers used by more than 2,000 researchers at national laboratories, universities, and industry. The most powerful of these is still being installed – a 100-teraflops Cray XT-4.

Many individuals lent their knowledge and insight into the management, both technical and administrative, of these computing machines, enabling their growth from a peak performance of 1 million calculations per second in 1974 to today’s machines that can run at performance times of at least 100 trillion calculations per second.

“It was a privilege to have had the opportunity to help this effort along at a critical time, but there are many gifted people who really made it all work. They are the ones that deserve the most credit,” Trivelpiece says.

The bottom line for many, he adds, was “to create conditions that allow science to get done.”