In a project that harks back to the days of computer pioneer John Von Neumann, scientists at Sandia National Laboratories in New Mexico are breaking down the entire concept of an operating system (OS) and rebuilding it.

Like Von Neumann’s original computer architecture, the Config-OS framework they’re designing combines a family of core components to build special-purpose operating systems. Project coordinator Ron Brightwell and his team want to build an OS backbone sturdy enough to run a variety of applications on computers capable of 1 quadrillion calculations per second and beyond, but with good performance and the features programmers need.

The project is part of the FAST-OS initiative sponsored by the Department of Energy’s Office of Advanced Scientific Computing Research. It combines the massively parallel scientific computing systems experience of Brightwell and his team at Sandia, a DOE facility, with their long-time academic partner Barney Maccabe at the University of New Mexico and the visionary work of Thomas Sterling at Louisiana State University.

Sterling is perhaps best known for his pioneering work on Beowulf, the first system to cluster inexpensive personal computers into a parallel processing machine. Now he’s developing platform designs for beyond-petascale computing. Brightwell says the partnership will ensure that Config-OS (as it’s called for now) will accommodate new computer architectures well into the next decade.

The goal is to build a framework for operating systems appropriate for a wider variety of parallel computing architectures and applications.

Those future architectures are likely to be based on the parallel programming model, which has become the dominant high-end computing scheme since the early 1990s. Parallel computing solves big problems much more quickly by breaking them into pieces so multiple processors can work on them simultaneously. Today, parallel computing systems have grown to thousands of processors and that trend is likely to continue creating computers with tens of thousands of processors over the next decade. A critical challenge is now developing the operating systems that will effectively control these very large processor count systems when they are used to solve our nation’s most complex science and engineering application problems.

When high-performance parallel computing was first developed, programmers created operating systems specific to each platform, Brightwell explains. Some programmers elected to build a simple, “lightweight” OS that didn’t compromise system speed. Others chose to use existing full-featured systems developed for time-shared environments, where each server simultaneously juggled several users and applications. The relatively inexpensive Beowulf clusters and the Linux open-source operating system enabled parallel programming with a larger set of OS services and features. However, Brightwell points out, many of those services deal with file systems and virtual memory, which are irrelevant for large parallel systems like Sandia’s Red Storm – the first computer in the Cray XT3 product line.

This multi-institutional research team wants the best of both worlds: an OS that doesn’t compromise system speed, but offers all the components a programmer might want for a custom computer simulation. Sandia researchers often run these kinds of large-scale simulations, such as models of metal stresses in a fire environment or turbulent reacting flow in internal combustion engines.

The team first wrestled with the trade-off of running a “full-featured” OS such as Linux versus a “lightweight” OS such as Catamount, which was built to run on the Cray XT3. The challenge is to maintain Catamount’s scalability (the capacity to run well on an ever-greater number of processors) and performance while allowing for additional features as needed. The goal is to build a framework for operating systems appropriate for a wider variety of parallel computing architectures and applications.

“The idea is to understand the performance issues when you take an operating system like Catamount, break it into components and then put those components back together,” Brightwell says.

The researchers first defined the crucial components – memory management, networking and scheduling – then built the elements as part of a flexible generic framework that can be customized based on specific project needs. The system has been demonstrated on a small number of platforms: the Xen hardware virtualization environment, the PowerPC architecture, and the Cray XT3.

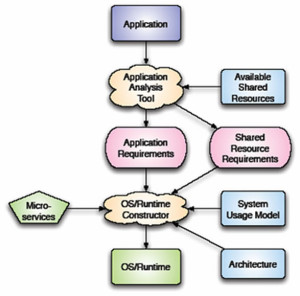

“We’ve got the framework now, and we are in the middle of determining what the performance implications of the framework will be,” Brightwell says. “In theory, we envision our framework to be sophisticated enough for a programmer to be able to look at a menu of services and say, ‘Here are the services that I want, build me an operating system that gives me those, and only those, services.’”

Long-term plans for Config-OS include building a library of components that will allow programmers to choose which services they need for a given simulation, and collaborating with application developers to test and debug the system.