Two new Department of Energy-sponsored telescopes, the Dark Energy Spectroscopic Instrument (DESI) and the Vera C. Rubin Observatory, will map cosmic structure in unprecedented detail. At Argonne and Lawrence Berkeley national laboratories, teams have developed a pair of the world’s most powerful cosmological simulation codes and are ready to translate the telescopes’ tsunami of observational data into new insights.

“Our job is to provide the theoretical backdrop to these observations,” says Zarija Lukić, a computational cosmologist at LBNL’s Cosmology Computing Center. “You cannot infer much from observations alone about the structure of the universe without also having a set of powerful simulations producing predictions for different physical parameters.”

Lukić is a lead developer of Nyx, named after the Greek goddess of night. For more than a decade the Nyx group has collaborated with the hardware/hybrid accelerated cosmology code, or HACC, team at Argonne, co-led by computational cosmologist Katrin Heitmann, who is also the principal investigator for an Office of Science SciDAC-5 project in High Energy Physics (HEP) that will continue to advance code development. (See sidebar, “About SciDAC.”)

With a computing time grant from the ASCR Leadership Computing Challenge (ALCC), the Nyx and HACC teams are fine-tuning their codes, readying them to tease out cosmic details from incoming DESI and Rubin Observatory data.

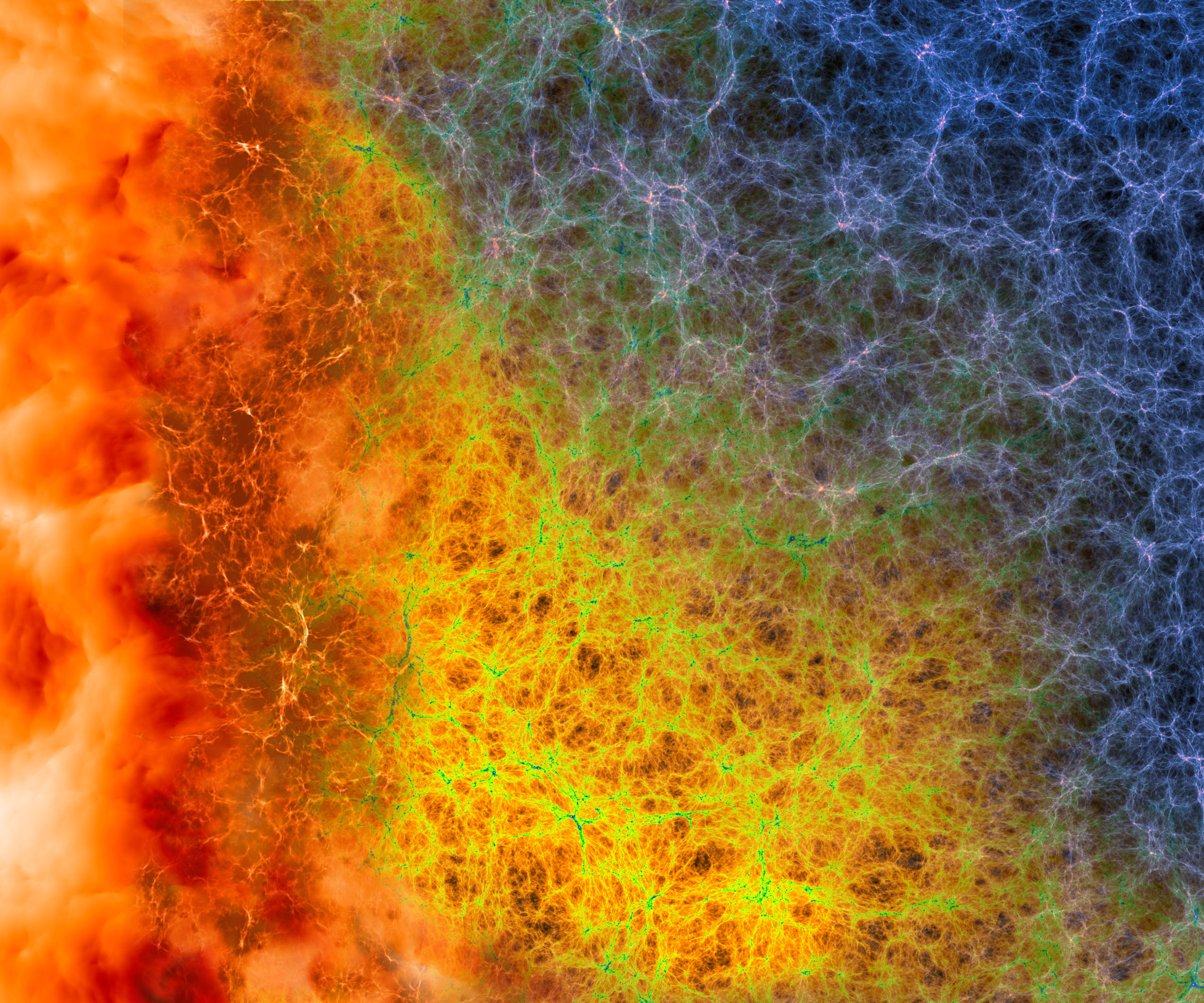

The codes and telescopes focus on understanding how dark matter and dark energy shape cosmic structure and dynamics. Matter in the universe is unevenly distributed. Astronomers see a cosmic web, a vast tendril-like network of dense regions of gas and galaxies interspersed with low-density voids.

The standard explanation for the cosmic web is the Lambda-CDM (cold dark matter) model. It posits that the cosmos’ total mass and energy is composed of 68% dark energy, 27% dark matter and only about 5% visible, or baryonic, matter – the stars, planets and us.

Dark matter particles’ exact nature is yet a mystery, as is another cosmic conundrum linked to dark energy: “What causes the accelerated expansion of the universe?” asks Heitmann, leader of Argonne’s Cosmological Physics and Advanced Computing group.

Heitmann’s HACC team collaborates closely with the Rubin Observatory’s Legacy Survey of Space and Time (LSST) observing campaign. Starting in early 2025, Rubin, in northern Chile, will record the entire visible southern sky every few days for a decade, tracking the movement of billions of galaxies in the low-redshift, or nearby, universe. This will produce unprecedented amounts of data: six million gigabytes per year.

The incoming deluge doesn’t deter Heitmann. The HACC team won an award at the SC19 high-performance computing conference for a record-breaking transfer of almost three petabytes of data to generate virtual universes on Oak Ridge National Laboratory’s Summit supercomputer. The simulations produce synthetic skies, virtual versions of what a telescope will see, that let astronomers plan and test observing strategies.

DESI will observe about 30 million galaxies and quasars across a third of the night sky.

“Our simulations are geared toward supporting the large observational surveys that are coming online,” says Heitmann, who’s also a spokesperson for LSST’s Dark Energy Science Collaboration. “They are large cosmic volume with very high resolution and there are only a handful of such stimulations currently available in the world.”

In 2019, the HACC team used the largest ALCC allocation ever – the entire Argonne Mira supercomputer for several months – to produce a synthetic sky for both the DESI and Vera Rubin teams to use when testing their observation strategies.

The Nyx team is working most closely with DESI, which began collecting data in 2022. Located at the Kitt Peak Observatory in Arizona, DESI’s four-year spectroscopic observing campaign will map the cosmos’ large-scale structure across time using its 5,000-eye fiber-optic robotic telescope. It will observe about 30 million pre-selected galaxies and quasars across a third of the night sky.

As part of this, DESI will observe about 840,000 distant, or high redshift, quasars, three times as many as previous surveys collected. Quasars are astoundingly bright objects – thousands of times as bright as an entire galaxy. As their light travels between the quasar and Earth, some wavelengths are absorbed by neutral hydrogen gas present in the intergalactic medium between distant galaxies. Thus, the quasar’s distinctive light fingerprint, known as the Lyman-alpha forest, maps intergalactic hydrogen distribution.

Statistically analyzing and modeling hundreds of thousands of DESI Lyman-alpha forest spectra will provide unprecedented constraints on the mass and properties of candidate dark matter particles, says Lukić, whose LBNL post-doctoral colleague, Solène Chabanier, leads the working group focused on this problem.

Nyx has a strong track record of modeling these spectra to better understand cosmic structure. For example, for a 2017 Science paper, the group used Nyx simulations and Lyman-alpha forest observations to identify gas distribution smoothness in the intergalactic medium.

HACC and Nyx were born about 15 years ago with the common goal of creating state-of-the-art, highly scalable codes that run on any supercomputing platform, capitalizing on new generations of DOE supercomputers. Both codes are key components of the DOE Exascale Computing Project’s (ECP) ExaSky program, which is scheduled to conclude at the end of this year. HEP and ASCR, as part of SciDAC-5, will to develop the codes.

What makes Nyx and HACC a great tag team is that they use very different mathematical approaches to hydrodynamics, Lukić says – how the codes model elements such as pressure, temperature and baryonic matter movement.

Nyx uses adaptive mesh refinement, dividing space into a grid describing hydrodynamical properties – the fluidic forces – of baryons. The HACC code was originally developed for gravity-only simulations, but as part of the ECP, the HACC team added a particle-based, hydrodynamics component, which is a major component of the HEP-SciDAC collaboration.

“LSST will go to smaller and smaller scales with its measurements and at these scales you have to understand what baryonic physics is doing to interpret the measurements,” Heitmann says.

Equipping both codes with hydrodynamic models provides a unique ability to test observations, Lukić says. Part of the team’s ALCC allocation is to further test this congruence. “If two codes based on entirely different mathematical methodologies precisely agree on some observation, then you are much more confident that you’ve got the numerical component right.”