In the late 2000s, Lawrence Berkeley National Laboratory scientists Carl Steefel and David Trebotich had a computational dream. They wanted to apply their unique code for modeling fluid flow and geochemical reactions in microscopic sedimentary rock fractures and pores to a piece of simulated rock 10 times bigger than ever. The goal: modeling a piece of shale about the size and shape of a flat stick of chewing gum at the level of microns (millionths of a meter). The problem: They needed a bigger supercomputer.

“When most people think of rock fractures they think of gigantic features like the San Andreas Fault,” says Steefel, a Berkeley Lab computational geoscientist. Most fractures, however, are just 10 to a hundred microns wide. And understanding the physics of how these cracks form and grow is critical if scientists are to simulate and predict how underground reservoirs behave, especially those under consideration for carbon sequestration. “Modeling this requires an enormous amount of computational power – it’s an exascale problem” that will tap the next generation of supercomputers.

Steefel’s and Trebotich’s cutting-edge computational geoscience ambitions are bearing fruit in Subsurface, an Exascale Subsurface Simulator of Coupled Flow, Transport, Reactions, and Mechanics. It’s one of about two dozen applications under development in the Department of Energy’s Exascale Computing Project. Subsurface will be among the first science applications to run on Frontier, DOE’s first exascale machine, when it comes on line at the Oak Ridge Leadership Computing Facility this year.

The multiphysics simulations Subsurface produces will provide critical insights to guide subterranean carbon storage and make fossil fuel extraction more efficient and environmentally friendly, says Steefel, the project’s science lead and a pioneer in computational geoscience. (His 1992 Yale University thesis on numerical modeling of reactive transport in a geological setting helped launch the field.) The dynamics of micron-sized fractures are key to the long-term success of mitigating climate change by burying carbon dioxide (CO2) and of other applications such as hydrogen storage. These proposed subsurface reservoirs are often under shale caprocks and are in former oil and gas fields riddled with concrete-encased wellbores.

“When CO2 dissolves in water,” Steefel explains, “it forms an acidic fluid that attacks the concrete very vigorously, dissolves it, weakens it mechanically and potentially wormholes it, forming tiny fractures that can then expand, releasing the CO2. And it’s the same possible failure mechanisms for shale caprocks.”

Subsurface’s core goal, Steefel says, is to accurately model the complex geochemical and geomechanical stressors in rock and concrete to predict fractures and track their micron-level evolution – a key to a reservoir’s long-term stability.

For the past decade Steefel, Trebotich and LBNL staff scientist and computational geoscientist Sergi Molins have collaborated on Chombo-Crunch, the software at Subsurface’s heart. Chombo-Crunch combines two powerful codes. The first: a versatile computational fluid dynamics (CFD) program Trebotich developed using Chombo, an LBNL computational software package. The second: CrunchFlow, Steefel and Molin’s code – refined over two decades – for simulating how chemical reactions occur and change as fluids travel underground.

“Chombo-Crunch’s real strength starts with its ability to do direct numerical simulation from arbitrarily complex image data,” says Trebotich, a staff scientist in Berkeley Lab’s Applied Numerical Algorithms Group and the code development lead for Subsurface.

Enter Subsurface, which will achieve this exascale feat by modeling an estimated trillion grid cells with 16 trillion degrees of freedom.

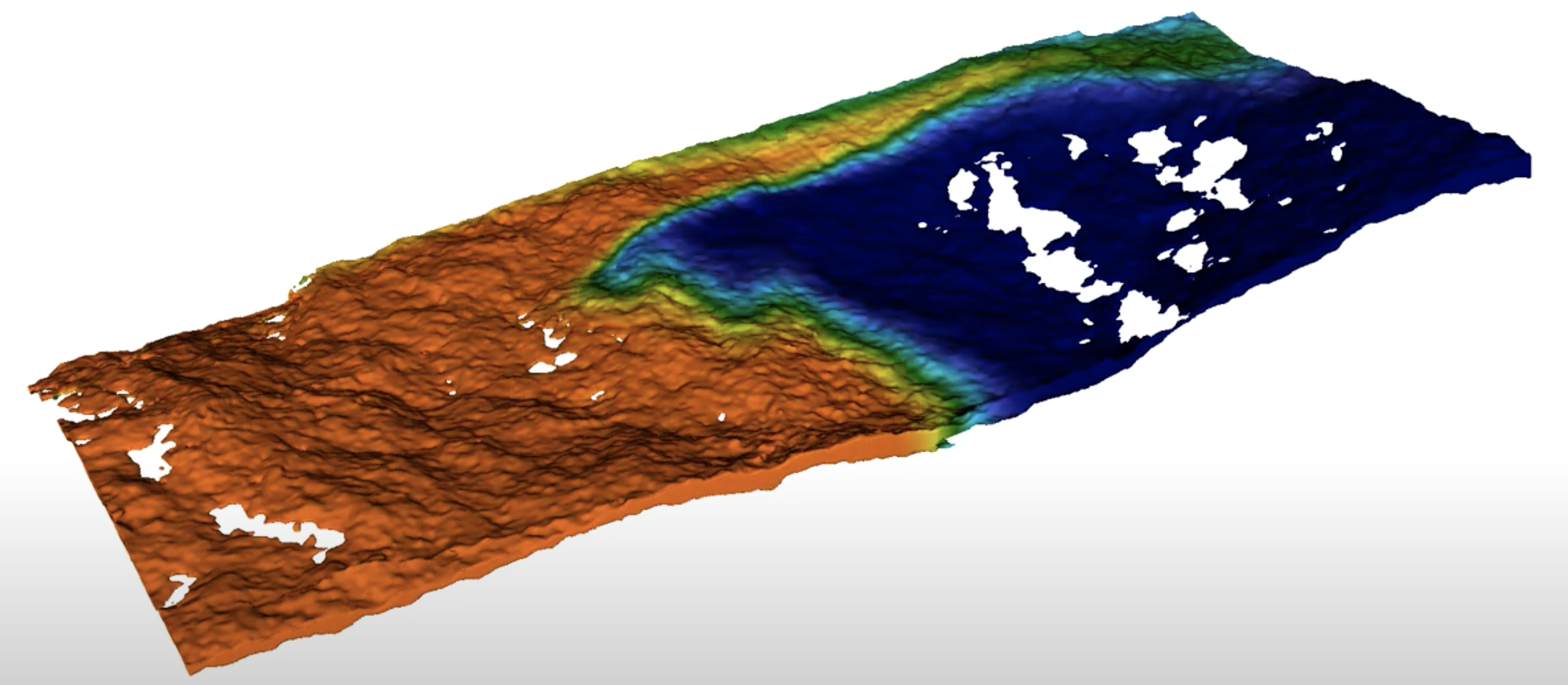

To begin, an advanced light source or scanning-electron microscope takes a series of micron-resolution images slices of a rock sample. These are mathematically mapped onto a grid, letting Chombo-Crunch explicitly resolve reactive surface area, a key feature of the combined code.

In 2015, Trebotich used the CFD solver to simulate flow in a piece of shale of about 100 microns cubed at 24-nanometer (billionth-of-a-meter) resolution. The simulation required 700,000 cores on the Cori supercomputer at DOE’s National Energy Research Scientific Computing Center (NERSC) to model about 20 billion individual grid cells and 120 billion degrees of freedom.

“It was probably the largest complex geometry computation ever,” says Trebotich, who is one of NERSC’s single largest users over the past decade, consuming more than a billion hours of high performance computing (HPC) time.

“This 100-micron cube simulation for shale was great, but you really want to be running a centimeter cube” to get a representative sample of the material’s behaviour – one that researchers can extend to reservoir scale, Steefel says. Enter Subsurface, which will achieve this exascale feat by modeling an estimated trillion grid cells with 16 trillion degrees of freedom, including hydraulic, mechanical and chemical factors.

Steefel says the 10-person Subsurface team sees the move to exascale as an the opportunity to improve its simulations with the addition of geomechanics – movement of the rock fractures themselves. To that end Subsurface couples Chombo-Crunch with GEOSX, another DOE multiphysics simulator, including geomechanics, for modeling subsurface carbon storage. A group led by scientist Randy Settgast of the Atmospheric, Earth, and Energy Division at Lawrence Livermore National Laboratory developed GEOSX.

“We teamed up with them and basically said let’s do a high-performance computing handshake between the two code bases,” Steefel says.

However, the two codes use fundamentally different mathematical techniques: Chombo-Crunch is a structured grid finite-volume code; GEOSX is an unstructured grid finite-element code. Subsurface adapts by operating the two codes in the equivalent of a tightly linked tango, sharing information but separate.

“They’re mutually exclusive – we have a way of coupling the two without having to embed either in the other,” Trebotich says.

As with all the Exascale Computing Project science initiatives, Trebotich and Steefel say a primary challenge has been optimizing Subsurface’s component codes to use graphics processing units (GPUs). Frontier and DOE’s other major HPC systems use a hybrid architecture of both central processing units (the chips driving most previous HPC systems) and GPUs, which excel at efficiently handling many small pieces of data simultaneously.

“It’s a new architecture, and so the algorithms have to be rewritten,” Steefel says. It also requires close collaboration with DOE groups that are recasting the foundational solver code libraries Subsurface uses.

An additional focus when working with GPUs is finding “the new sweet spot” for load balancing to optimize Subsurface’s memory footprint, Trebotich says. On Cori, Chombo-Crunch was optimized to run with a load-balancing sweet spot of one 323 box of cells per core. Experimental work on exascale development machines led him to estimate that what required eight nodes on Cori will require just a single GPU node on Frontier. “So we can solve a bigger problem – and that’s exactly what we set out to do.”

Just how big a simulation Subsurface will ultimately achieve at exascale is an open question, Steefel says. He speaks with three decades of experience in scaling-up geoscience numerical models, starting in the late 1980s with a 32-bit VAX computer that had less power than today’s average smart phone. In the back of his mind, he says, there’s the hope of scaling that stick-of-gum sized shale simulation into a small reservoir-sized model. “It’s our stretch goal.”