Part of the Science at the Exascale series.

If clean, abundant energy from nuclear fusion – the process that generates the sun’s power – becomes a reality, powerful supercomputers will have helped get us there.

Detailed computational models are key to understanding and addressing the complex technical challenges associated with developing this carbon-free energy source that produces no significant toxic waste and burns hydrogen, the most abundant element in the universe.

Accurate, predictive simulations present computational challenges that must be overcome to harvest important information on the fundamental reactions powering ITER, the multibillion-dollar international effort to build a fusion experiment capable of producing significantly more energy than it consumes.

The models that will point the way to commercially viable fusion energy will depend on exascale computing and other new technologies developed along the way. Access to exascale computers has the potential to accelerate progress toward a clean-energy future, says William Tang, director of the Fusion Simulation Program at the Princeton Plasma Physics Laboratory at Princeton University.

“We have good ideas how to move forward,” Tang says, “but to enhance the physics fidelity of the simulation models and incorporate the added complexity that needs to be taken into account will demand more and more powerful computing platforms and more systematic experiments to validate models.”

Scientists now focus simulations on single aspects of plasma physics. A realistic model must integrate multiple physical simulations, and that will require the next generation of computing power. Next-generation computers, expected to come on line within the decade, will be capable of an exaflops – 1 million trillion (1018) calculations per second – about 1,000 times more powerful than today’s fastest machines.

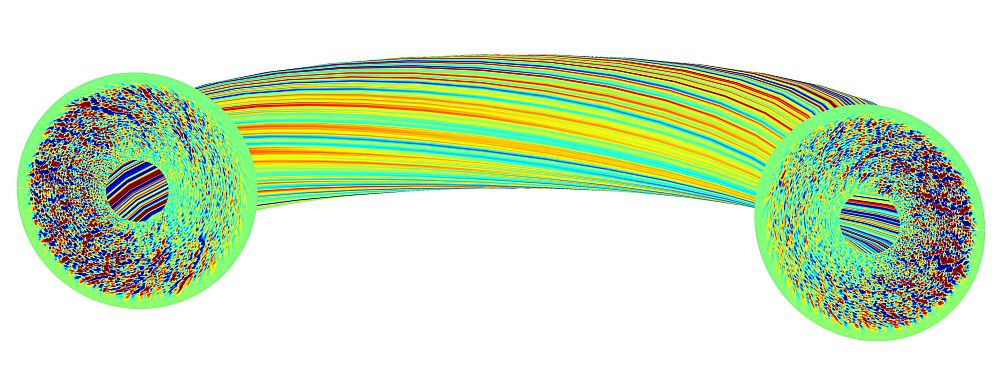

This simulation of the DIII-D fusion energy experiment, operated by General Atomics, illustrates disruptions that can terminate plasma discharges, loading the reactor walls with thermal and magnetic energy and possibly damaging them. Certain field lines, colored blue, open to strike the top and bottom divertor plates during the disruption. The divertor plates exhaust hot gases and impurities from the reactor. Image courtesy of Scott Kruger, Tech-X Corp.

“The fusion energy science or plasma physics area has been engaged in high-performance computing (HPC) for a long time,” says Tang, who co-chaired a major workshop on the subject sponsored by the Department of Energy (DOE) offices of Fusion Energy Sciences and Advanced Scientific Computing Research. “Some of our applications have computed at the bleeding edge throughout the history of high-performance computing, and that is still the case today.”

In the coming years, computer science and applied mathematicians will have to devise new algorithms to deal with ever-increasing concurrent operation in advanced HPC platforms, along with improved methods to manage, analyze and visualize the torrent of data more powerful simulations and larger experiments, such as ITER, will generate.

They will also need improved formulations for translating the physics of fusion, and other complex natural and engineered systems, into mathematical representations. This includes advanced techniques to develop integrating frameworks, effective workflows and the necessary tools to make comprehensive simulation models run efficiently on exascale machines.

Time-hungry models

Computer simulation is and will continue to be the only game in town for examining some aspects of fusion energy technology, says Zhihong Lin, a professor of physics and astronomy at the University of California, Irvine. “You can do some things (with computers) you can’t do now in experiments, because right now we don’t have burning plasma experiments” like ITER.

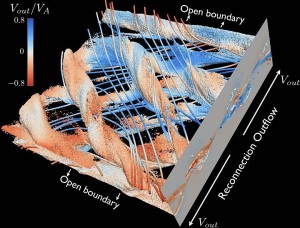

This three-dimensional kinetic simulation shows magnetic reconnection in a large-scale electron-positron plasma. Isosurfaces representing points of a constant density value are colored by the reconnection outflow velocity. Magnetic islands develop at resonant surfaces across the layer, leading to complex interactions of flux ropes over a range of different angles and spatial scales. Image courtesy of William Daughton, Los Alamos National Laboratory.

Lin leads a DOE Scientific Discovery through Advanced Computing (SciDAC) project to assess the effects of energetic particles on the performance of burning plasmas in ITER. The project has received grants of 20 million processor hours in 2010 and 35 million hours in 2011 from INCITE, DOE’s Innovative and Novel Computational Impact on Theory and Experiment program. The research uses Oak Ridge National Laboratory’s Jaguar, a Cray XT, rated one of the world’s fastest supercomputers.

“We’re always taking whatever computer time we can get our hands on” to make ever-larger simulations, Lin says, because even with millions of hours on supercomputers, models still must simplify the physics behind fusion.

Simulations also face the formidable challenge of encompassing the huge spans in space and time required to accurately represent fusion – from tiny hydrogen pellets to ITER’s 7-story-tall reactor and from near-instantaneous interactions between charged particles to the continuous behavior of a plasma cloud.

‘Fourth state of matter’

Much of Lin’s research focuses on the plasma, often referred to as the “fourth state of matter.” Plasma, which comprises more than 99 percent of the visible universe, is a gas of charged particles, electrons and ions that is six times hotter than the sun’s core. ITER’s plasma will fuse particles and release energy. Lin and fellow scientists model plasma turbulence and transport of energetic alpha particles the fusion reaction produces.

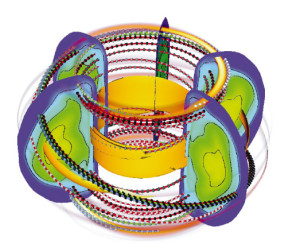

In ITER, magnetic fields will contain the plasma in a donut-shaped vacuum chamber called a tokamak. If the magnetic trap confines the hot plasma long enough at sufficiently high densities and temperatures, a controlled fusion reaction will occur, releasing huge amounts of energy.

Highly detailed models that represent all key physical processes would give researchers the confidence to perform virtual fusion experiments, Lin says, and help physicists design optimized actual experiments, each of which can cost millions of dollars.

Plasma simulations also must model the kinetic dynamics governing individual particles in complex three-dimensional systems. Kinetic simulations involve accurately representing the movement and interaction of billions or trillions of individual electrons and ions, including positively charged alpha particles ejected from atomic nuclei. The models must track the particles in multiple dimensions – their velocity, for instance, as well as their location in space.

A singular window to fusion

Alpha particles generate heat that should make the fusion reaction self-sustaining, but they also excite electromagnetic instabilities that induce the particles to escape, possibly damaging the reactor walls. “If we lost large fractions of (the particles) we would not have enough self-heating to sustain a high-temperature plasma,” Lin says. Simulations must account for these electromagnetic waves and instabilities.

Models of turbulence and particle transport in the ITER reactor demand major computer power to encompass a huge span of scales in space and time. But they also must use mathematical formulas that describe both fluid-like collective behavior and the movement of individual particles that interact with each other and with electromagnetic fields.

“For most of the problems, like turbulence, we’re talking about how the alpha particles excite the turbulence and how the turbulence leads to the transport of the alpha particles. They can’t be treated as a fluid element. You have to treat the movement of individual particles” – potentially billions or trillions of particles.

No fusion experiments now operating have established a self-sustained, large-scale fusion reaction. “They don’t produce large fractions of the alpha particles, so you cannot really study the alpha particle turbulence in the regime that would be relevant in ITER,” Lin adds. Virtual experiments via simulation can help address that need.

With SciDAC and INCITE collaborators Chandrika Kamath of Lawrence Livermore National Laboratory, Donal Spong of Oak Ridge National Laboratory and Ronald Waltz of General Atomics Corp., Lin is focusing on two computer programs: the gyrokinetic toroidal code (GTC) and GYRO.

GTC is a “particle-in-cell” code. It tracks individual particles and runs well on massively parallel computers comprised of thousands of processors. GYRO is a continuum code that uses a fixed grid of computational points in particle position and velocity to track particles and heat transport.

Both codes solve the same equations but in different ways, Lin says, and the researchers want to compare them. “In principle you should get the same results but depending on different algorithms and different approximations, they don’t agree all the time.”

In the INCITE project, Lin and Waltz will contrast outcomes from each code and with data from existing fusion reactors to verify and validate the simulations.

Using two codes also will allow the scientists to study different plasma physics, Lin says.

“We’re not sure whether a single code would be reliable to do so. It’s better to come from different angles, even just to tackle the same physics problem.”

In general, Tang says, higher physics fidelity FES models and access to exascale computers have the potential to accelerate progress toward a clean-energy future. This will require “more realistic predictive models that are properly validated against experimental measurements and will perform much more effectively on advanced HPC platforms.

“It’s an exciting prospect which I believe we can realize if enough sustained resources are dedicated to the effort and if we also manage to attract, train and assimilate the bright young talent needed in the field.”